Multiprocessing with NumPy Arrays – GeeksforGeeks

Multiprocessing is a powerful tool that enables a computer to perform multiple tasks at the same time, improving overall performance and speed. In this article, we will see how we can use multiprocessing with NumPy arrays.

NumPy is a library for the Python programming language that provides support for arrays and matrices. In many cases, working with large arrays can be computationally intensive, and this is where multiprocessing can help. By dividing the work into smaller pieces, each of which can be executed simultaneously, we can speed up the overall processing time.

Syntax of the multiprocessing.Pool() method:

multiprocessing.pool.Pool([processes[, initializer[, initargs[, maxtasksperchild[, context]]]]])

A process pool object controls a pool of worker processes to which jobs can be submitted. It supports asynchronous results with timeouts and callbacks and has a parallel map implementation.

Parameters:

- processes: is the number of worker processes to use. If processes is None then the number returned by os.cpu_count() is used.

- initializer: If the initializer is not None, then each worker process will call initializer(*initargs) when it starts.

- maxtasksperchild: is the number of tasks a worker process can complete before it will exit and be replaced with a fresh worker process, to enable unused resources to be freed. The default maxtasksperchild is None, which means worker processes will live as long as the pool.

- context: can be used to specify the context used for starting the worker processes. Usually, a pool is created using the function multiprocessing.Pool() or the Pool() method of a context object. In both cases, context is set appropriately.

Example 1:

Here’s an example that demonstrates how to use multiprocessing with NumPy arrays. In this example, we will create an array of random numbers, then use multiprocessing to multiply each element in the array by 2.

Python3

|

|

The `multiprocessing.Pool` class is used to create a pool of processes that can be used to execute the `multiply_elements` function. The `map` method is used to apply the function to each subarray in the array. The result is a list of arrays, each of which contains the result of multiplying the corresponding subarray by 2.

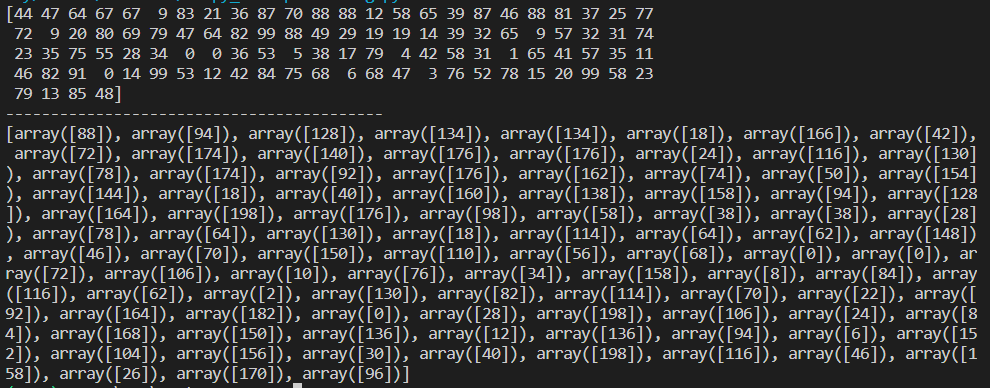

Output :

[44 47 64 67 67 9 83 21 36 87 70 88 88 12 58 65 39 87 46 88 81 37 25 77 72 9 20 80 69 79 47 64 82 99 88 49 29 19 19 14 39 32 65 9 57 32 31 74 23 35 75 55 28 34 0 0 36 53 5 38 17 79 4 42 58 31 1 65 41 57 35 11 46 82 91 0 14 99 53 12 42 84 75 68 6 68 47 3 76 52 78 15 20 99 58 23 79 13 85 48] ------------------------------------------ [array([88]), array([94]), array([128]), array([134]), array([134]), array([18]), array([166]), array([42]), array([72]), array([174]), array([140]), array([176]), array([176]), array([24]), array([116]), array([130]), array([78]), array([174]), array([92]), array([176]), array([162]), array([74]), array([50]), array([154]),array([144]), array([18]), array([40]), array([160]), array([138]), array([158]),array([94]), array([128]), array([164]), array([198]), array([176]), array([98]),array([58]), array([38]), array([38]), array([28]), array([78]), array([64]), array([130]), array([18]), array([114]), array([64]), array([62]), array([148]), array([46]), array([70]), array([150]), array([110]), array([56]), array([68]), array([0]), array([0]), array([72]), array([106]), array([10]), array([76]), array([34]), array([158]), array([8]), array([84]), array([116]), array([62]), array([2]), array([130]), array([82]), array([114]), array([70]), array([22]), array([92]), array([164]), array([182]), array([0]), array([28]), array([198]), array([106]), array([24]), array([84]), array([168]), array([150]), array([136]), array([12]), array([136]), array([94]), array([6]), array([152]), array([104]), array([156]), array([30]), array([40]), array([198]), array([116]), array([46]), array([158]), array([26]), array([170]), array([96])]

Example 2:

Let’s consider a simple example where we want to compute the sum of all elements in an array. In a single-process implementation, we would write the following code:

Python3

|

|

Output:

The time.perf_counter() method of the time module is used to measure the performance counter. It returns a float value.

55 4.579999999998474e-05

Now, let’s see how we can perform the same computation using multiple processes. The following code demonstrates how to use the multiprocessing module to split the array into smaller chunks and perform the computation in parallel:

Python3

|

|

Output:

55 0.3379081

In the above code, we have split the original array into 4 equal parts and assigned each part to a separate process. The worker function takes an array as input and returns the sum of its elements. The multi_process_sum function creates a pool of 4 processes and maps the worker function to each chunk of the array. The results from each process are collected and summed up to get the final result. The output of the above code will also be 55, just like the single-process implementation.

In conclusion, by using multiprocessing with NumPy arrays, we can speed up computationally intensive operations and improve the overall performance of our applications. It is a powerful tool that can help to optimize code and make the most of a computer’s resources.

Multiprocessing is a powerful tool that enables a computer to perform multiple tasks at the same time, improving overall performance and speed. In this article, we will see how we can use multiprocessing with NumPy arrays.

NumPy is a library for the Python programming language that provides support for arrays and matrices. In many cases, working with large arrays can be computationally intensive, and this is where multiprocessing can help. By dividing the work into smaller pieces, each of which can be executed simultaneously, we can speed up the overall processing time.

Syntax of the multiprocessing.Pool() method:

multiprocessing.pool.Pool([processes[, initializer[, initargs[, maxtasksperchild[, context]]]]])

A process pool object controls a pool of worker processes to which jobs can be submitted. It supports asynchronous results with timeouts and callbacks and has a parallel map implementation.

Parameters:

- processes: is the number of worker processes to use. If processes is None then the number returned by os.cpu_count() is used.

- initializer: If the initializer is not None, then each worker process will call initializer(*initargs) when it starts.

- maxtasksperchild: is the number of tasks a worker process can complete before it will exit and be replaced with a fresh worker process, to enable unused resources to be freed. The default maxtasksperchild is None, which means worker processes will live as long as the pool.

- context: can be used to specify the context used for starting the worker processes. Usually, a pool is created using the function multiprocessing.Pool() or the Pool() method of a context object. In both cases, context is set appropriately.

Example 1:

Here’s an example that demonstrates how to use multiprocessing with NumPy arrays. In this example, we will create an array of random numbers, then use multiprocessing to multiply each element in the array by 2.

Python3

|

|

The `multiprocessing.Pool` class is used to create a pool of processes that can be used to execute the `multiply_elements` function. The `map` method is used to apply the function to each subarray in the array. The result is a list of arrays, each of which contains the result of multiplying the corresponding subarray by 2.

Output :

[44 47 64 67 67 9 83 21 36 87 70 88 88 12 58 65 39 87 46 88 81 37 25 77 72 9 20 80 69 79 47 64 82 99 88 49 29 19 19 14 39 32 65 9 57 32 31 74 23 35 75 55 28 34 0 0 36 53 5 38 17 79 4 42 58 31 1 65 41 57 35 11 46 82 91 0 14 99 53 12 42 84 75 68 6 68 47 3 76 52 78 15 20 99 58 23 79 13 85 48] ------------------------------------------ [array([88]), array([94]), array([128]), array([134]), array([134]), array([18]), array([166]), array([42]), array([72]), array([174]), array([140]), array([176]), array([176]), array([24]), array([116]), array([130]), array([78]), array([174]), array([92]), array([176]), array([162]), array([74]), array([50]), array([154]),array([144]), array([18]), array([40]), array([160]), array([138]), array([158]),array([94]), array([128]), array([164]), array([198]), array([176]), array([98]),array([58]), array([38]), array([38]), array([28]), array([78]), array([64]), array([130]), array([18]), array([114]), array([64]), array([62]), array([148]), array([46]), array([70]), array([150]), array([110]), array([56]), array([68]), array([0]), array([0]), array([72]), array([106]), array([10]), array([76]), array([34]), array([158]), array([8]), array([84]), array([116]), array([62]), array([2]), array([130]), array([82]), array([114]), array([70]), array([22]), array([92]), array([164]), array([182]), array([0]), array([28]), array([198]), array([106]), array([24]), array([84]), array([168]), array([150]), array([136]), array([12]), array([136]), array([94]), array([6]), array([152]), array([104]), array([156]), array([30]), array([40]), array([198]), array([116]), array([46]), array([158]), array([26]), array([170]), array([96])]

Example 2:

Let’s consider a simple example where we want to compute the sum of all elements in an array. In a single-process implementation, we would write the following code:

Python3

|

|

Output:

The time.perf_counter() method of the time module is used to measure the performance counter. It returns a float value.

55 4.579999999998474e-05

Now, let’s see how we can perform the same computation using multiple processes. The following code demonstrates how to use the multiprocessing module to split the array into smaller chunks and perform the computation in parallel:

Python3

|

|

Output:

55 0.3379081

In the above code, we have split the original array into 4 equal parts and assigned each part to a separate process. The worker function takes an array as input and returns the sum of its elements. The multi_process_sum function creates a pool of 4 processes and maps the worker function to each chunk of the array. The results from each process are collected and summed up to get the final result. The output of the above code will also be 55, just like the single-process implementation.

In conclusion, by using multiprocessing with NumPy arrays, we can speed up computationally intensive operations and improve the overall performance of our applications. It is a powerful tool that can help to optimize code and make the most of a computer’s resources.