2024: The year of the value-driven data person

2024: The Year of the Value-Driven Data Person

Growth at all costs has been replaced with a need to operate efficiently and be ROI-driven–data teams are no exception

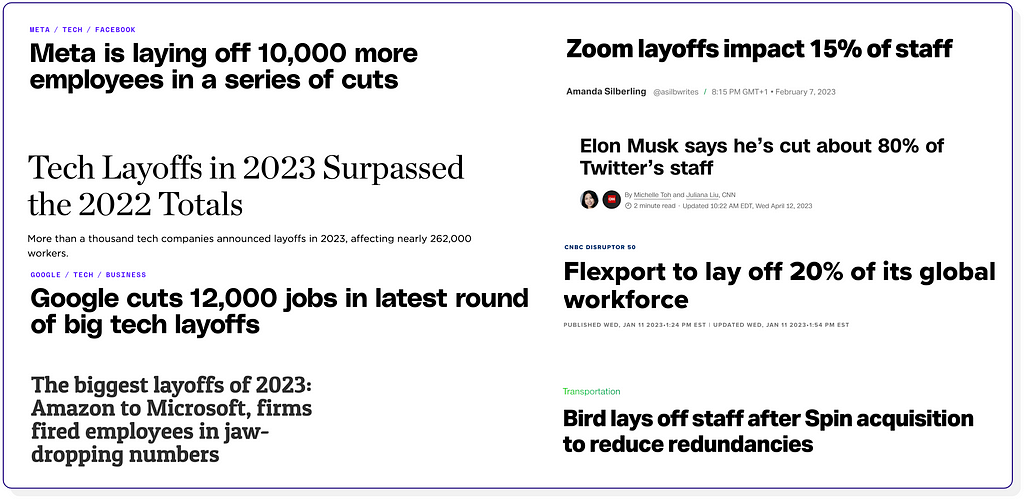

It’s been a whirlwind if you worked in tech over the past few years.

VC funding declined by 72% from 2022 to 2023

New IPOs fell by 82% from 2021 to 2022

More than 150,000 tech workers were laid off in the US in 2023

During the heydays until 2021, funding was easy to come by, and teams couldn’t grow fast enough. In 2022, growth at all costs was replaced with profitability goals. Budgets were no longer allocated based on finger-in-the-air goals but were heavily scrutinized by the CFO.

Data teams were not isolated from this. A 2023 survey by dbt found that 28% of data teams planned on reducing headcount.

Looking at the number of data roles in selected scale-ups, compared to the start of last year, more have reduced headcount than have expanded.

The new reality for data teams

Data teams now find themselves at a chasm.

On one hand, the ROI of data teams has historically been difficult to measure. On the other hand, AI is having its moment (according to a survey by MIT Technology Review, 81% of executives believe that AI will be a significant competitive advantage for their business). AI & ML projects often have clearer ROI cases, and data teams are at the center of this, with an increasing number of machine learning systems being powered by the data warehouse.

So, what’s data people to do in 2024?

Below, I’ve looked into five steps you can take to make sure you’re well-positioned and aligned to business value if you work in a data role.

Ask your stakeholders for feedback

People like it when they get to share their opinions about you. It makes them feel listened to and gives you a chance to learn about your weak spots. You should lean into this and proactively ask your important stakeholders for feedback.

While you may not want to survey everyone in the company, you can create a group of people most reliant on data, such as everyone in a senior role. Ask them to give candid, anonymous feedback on questions such as happiness about self-serve, the quality of their dashboards, and if there are enough data people in their area (this also gives you some ammunition before asking for headcount).

End with the question, “If you had a magic wand, what would you change?” to allow them to come up with open-ended suggestions.

Survey results–data about data teams’ data work. It doesn’t get better…

Be transparent with the survey results and play it back for the stakeholders with a clear action plan for addressing areas that need improvement. If you run the survey every six months and put your money where your mouth is, you can come back and show meaningful improvements. Make sure to collect data about which business area the respondents work in. This will give you some invaluable insights into where you’ve got blind spots and if there are specific pain points in business areas, you didn’t know about.

Build a business case as if you were seeking VC funding

You can sit back and wait for stakeholder requests to come to you. But if you’re like most data people, you want to have a say in what projects you work on and may even have some ideas yourself.

Back in my days as a Data Analyst at Google, one of the business unit directors shared a wise piece of advice: “If you want me to buy into your project, present it to me as if you were a founder raising capital for your startup”. This may sound like Silicon Valley talk, but he had some valid points when I dug into it.

- Show what the total $ opportunity is and what % you expect to capture

- Show that you’ve made an MVP to prove that it can be done

- Show me the alternatives and why I should pick your idea

Example–ML model business case proposal summary

Business case proposals like the one above are presented to a few of the senior stakeholders in your area to get buy-in that you should spend your time here instead of one of the hundreds of other things you could be doing. It gives them a transparent forum for becoming part of the project and being brought in from the get-go–and also a way to shoot down projects early where the opportunity is too small or the risk too big.

Projects such as a new ML model or a new project to create operational efficiencies are particularly well suited for this. But even if you’re asked to revamp a set of data models or build a new company-wide KPI dashboard, applying some of the same principles can make sense.

Take a holistic approach to cost reduction

When you think about cost, it’s easy to end up where you can’t see the forest for the trees. For example, it may sound impressive that a data analyst can shave off $5,000/month by optimizing some of the longest-running queries in dbt. But while these achievements shouldn’t be ignored, a more holistic approach to cost savings is helpful.

Start by asking yourself what all the costs of the data team consist of and what the implications of this are.

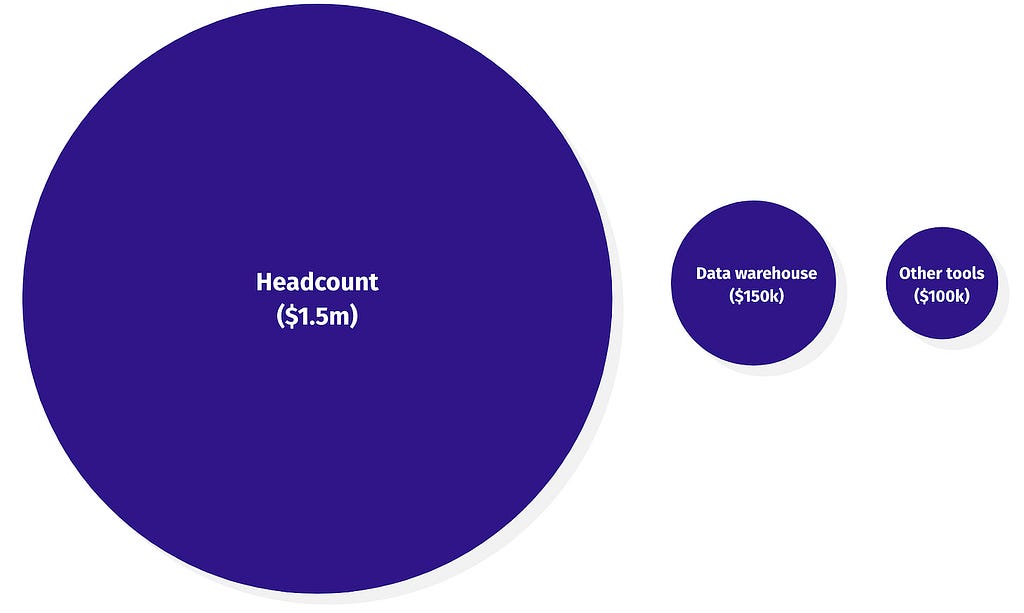

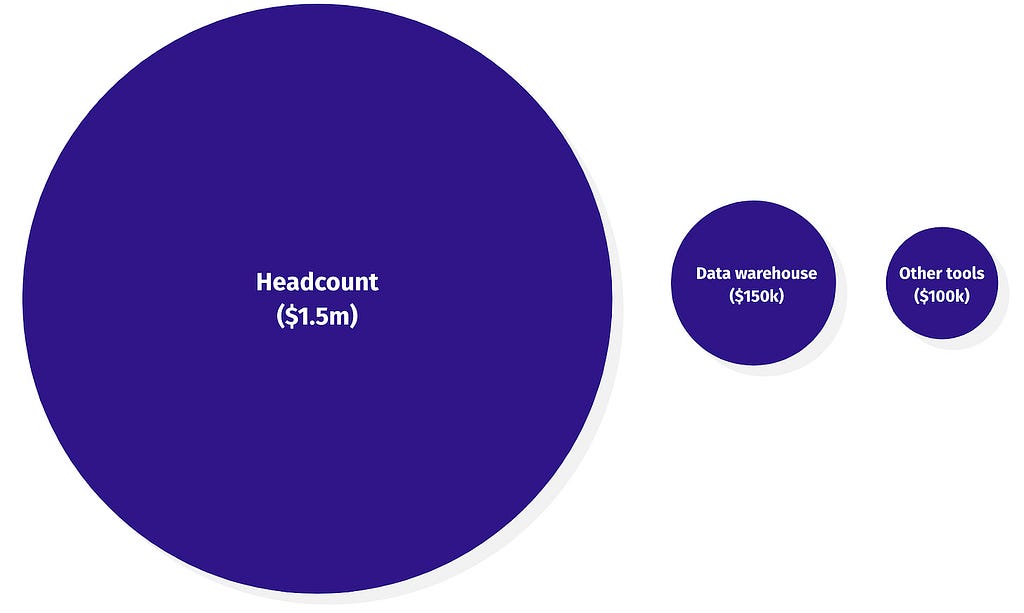

If you take a typical mid-sized data team in a scaleup, it’s not uncommon to see the three largest cost drivers be disproportionately allocated as:

- Headcount (15 FTEs x $100,0000): $1,500,000

- Data warehouse costs (e.g., Snowflake, BigQuery): $150,000

- Other data tooling (e.g., Looker, Fivetran): $100,000

This is not to say that you should immediately be focusing on headcount reduction, but if your cost distribution looks anything like the above, ask yourself questions like:

Should we have 2x FTEs build this in-house tool, or could we buy it instead?

Are there low-value initiatives where an expensive head count is tied up?

Are two weeks of work for a $5,000 cost-saving a good return on investment?

Are there optimizations in the development workflow, such as the speed of CI/CD checks, that could be improved to free up time?

Balance speed and quality

I’ve seen teams get bogged down by having 10,000s dbt tests across thousands of data models. It’s hard to know which ones are important, and developing new data models takes twice as long as everything is scrutinized through the same lens.

On the other hand, teams who barely test their data pipelines and build data models that don’t follow solid data modeling principles too often find themselves slowed down and have to spend twice as much time cleaning up and fixing issues retrospectively.

The value-drive data person carefully balances speed and quality through

- A shared understanding of what the most important data assets are

- Definitions of the level of testing that’s expected for data assets based on importance

- Expectations and SLAs for issues based on severity

They also know that to be successful, their company needs to operate more like a speed boat and less like a tanker–taking quick turns as you learn through experiments what works and what doesn’t, reviewing progress every other week, and giving autonomy to each team to set their direction.

Data teams often operate under uncertainty (e.g., will this ML model work). The faster you ship, the quicker you learn what works and what doesn’t. The best data people are careful always to keep this in mind and know where on the curve they fall.

For example, if you’re an ML engineer working on a model to decide which customers can sign up for a multi-billion dollar neobank, you can no longer get away with quick and dirty work. But if you’re working in a seed-stage startup where the entire backend may be redone in a few months, you know sometimes to balance speed over quality.

Proactively share the impact of your work

People in data roles are often not the ones to shout the loudest about their achievements. While nobody wants to be a shameless self-promoter, there’s a balance to strive towards.

If you’ve done work that had an impact, don’t be afraid to let your colleagues know. It’s even better if you have some numbers to back it up (who better to put numbers to the impact of data work than you). When doing this, it’s easy to get bogged down by implementation details of how hard it was to build, the fancy algorithm you used, or how many lines of code you wrote. But stakeholders care little about this. Instead, consider this framing.

- Focus on impact

- Instead of saying “delivered X”

- Use “delivered X, and it had Y impact”

Don’t be afraid to call out early when things are not progressing as expected. For example, call it out if you’re working on a project going nowhere or getting increasingly complex. You may fear that you put yourself at risk by doing so, but your stakeholders will perceive it as showing a high level of ownership and not falling for the sunk cost fallacy.

If you’ve any more ideas or questions about the article, reach out to me on LinkedIn.

2024: The year of the value-driven data person was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

2024: The Year of the Value-Driven Data Person

Growth at all costs has been replaced with a need to operate efficiently and be ROI-driven–data teams are no exception

It’s been a whirlwind if you worked in tech over the past few years.

VC funding declined by 72% from 2022 to 2023

New IPOs fell by 82% from 2021 to 2022

More than 150,000 tech workers were laid off in the US in 2023

During the heydays until 2021, funding was easy to come by, and teams couldn’t grow fast enough. In 2022, growth at all costs was replaced with profitability goals. Budgets were no longer allocated based on finger-in-the-air goals but were heavily scrutinized by the CFO.

Data teams were not isolated from this. A 2023 survey by dbt found that 28% of data teams planned on reducing headcount.

Looking at the number of data roles in selected scale-ups, compared to the start of last year, more have reduced headcount than have expanded.

The new reality for data teams

Data teams now find themselves at a chasm.

On one hand, the ROI of data teams has historically been difficult to measure. On the other hand, AI is having its moment (according to a survey by MIT Technology Review, 81% of executives believe that AI will be a significant competitive advantage for their business). AI & ML projects often have clearer ROI cases, and data teams are at the center of this, with an increasing number of machine learning systems being powered by the data warehouse.

So, what’s data people to do in 2024?

Below, I’ve looked into five steps you can take to make sure you’re well-positioned and aligned to business value if you work in a data role.

Ask your stakeholders for feedback

People like it when they get to share their opinions about you. It makes them feel listened to and gives you a chance to learn about your weak spots. You should lean into this and proactively ask your important stakeholders for feedback.

While you may not want to survey everyone in the company, you can create a group of people most reliant on data, such as everyone in a senior role. Ask them to give candid, anonymous feedback on questions such as happiness about self-serve, the quality of their dashboards, and if there are enough data people in their area (this also gives you some ammunition before asking for headcount).

End with the question, “If you had a magic wand, what would you change?” to allow them to come up with open-ended suggestions.

Survey results–data about data teams’ data work. It doesn’t get better…

Be transparent with the survey results and play it back for the stakeholders with a clear action plan for addressing areas that need improvement. If you run the survey every six months and put your money where your mouth is, you can come back and show meaningful improvements. Make sure to collect data about which business area the respondents work in. This will give you some invaluable insights into where you’ve got blind spots and if there are specific pain points in business areas, you didn’t know about.

Build a business case as if you were seeking VC funding

You can sit back and wait for stakeholder requests to come to you. But if you’re like most data people, you want to have a say in what projects you work on and may even have some ideas yourself.

Back in my days as a Data Analyst at Google, one of the business unit directors shared a wise piece of advice: “If you want me to buy into your project, present it to me as if you were a founder raising capital for your startup”. This may sound like Silicon Valley talk, but he had some valid points when I dug into it.

- Show what the total $ opportunity is and what % you expect to capture

- Show that you’ve made an MVP to prove that it can be done

- Show me the alternatives and why I should pick your idea

Example–ML model business case proposal summary

Business case proposals like the one above are presented to a few of the senior stakeholders in your area to get buy-in that you should spend your time here instead of one of the hundreds of other things you could be doing. It gives them a transparent forum for becoming part of the project and being brought in from the get-go–and also a way to shoot down projects early where the opportunity is too small or the risk too big.

Projects such as a new ML model or a new project to create operational efficiencies are particularly well suited for this. But even if you’re asked to revamp a set of data models or build a new company-wide KPI dashboard, applying some of the same principles can make sense.

Take a holistic approach to cost reduction

When you think about cost, it’s easy to end up where you can’t see the forest for the trees. For example, it may sound impressive that a data analyst can shave off $5,000/month by optimizing some of the longest-running queries in dbt. But while these achievements shouldn’t be ignored, a more holistic approach to cost savings is helpful.

Start by asking yourself what all the costs of the data team consist of and what the implications of this are.

If you take a typical mid-sized data team in a scaleup, it’s not uncommon to see the three largest cost drivers be disproportionately allocated as:

- Headcount (15 FTEs x $100,0000): $1,500,000

- Data warehouse costs (e.g., Snowflake, BigQuery): $150,000

- Other data tooling (e.g., Looker, Fivetran): $100,000

This is not to say that you should immediately be focusing on headcount reduction, but if your cost distribution looks anything like the above, ask yourself questions like:

Should we have 2x FTEs build this in-house tool, or could we buy it instead?

Are there low-value initiatives where an expensive head count is tied up?

Are two weeks of work for a $5,000 cost-saving a good return on investment?

Are there optimizations in the development workflow, such as the speed of CI/CD checks, that could be improved to free up time?

Balance speed and quality

I’ve seen teams get bogged down by having 10,000s dbt tests across thousands of data models. It’s hard to know which ones are important, and developing new data models takes twice as long as everything is scrutinized through the same lens.

On the other hand, teams who barely test their data pipelines and build data models that don’t follow solid data modeling principles too often find themselves slowed down and have to spend twice as much time cleaning up and fixing issues retrospectively.

The value-drive data person carefully balances speed and quality through

- A shared understanding of what the most important data assets are

- Definitions of the level of testing that’s expected for data assets based on importance

- Expectations and SLAs for issues based on severity

They also know that to be successful, their company needs to operate more like a speed boat and less like a tanker–taking quick turns as you learn through experiments what works and what doesn’t, reviewing progress every other week, and giving autonomy to each team to set their direction.

Data teams often operate under uncertainty (e.g., will this ML model work). The faster you ship, the quicker you learn what works and what doesn’t. The best data people are careful always to keep this in mind and know where on the curve they fall.

For example, if you’re an ML engineer working on a model to decide which customers can sign up for a multi-billion dollar neobank, you can no longer get away with quick and dirty work. But if you’re working in a seed-stage startup where the entire backend may be redone in a few months, you know sometimes to balance speed over quality.

Proactively share the impact of your work

People in data roles are often not the ones to shout the loudest about their achievements. While nobody wants to be a shameless self-promoter, there’s a balance to strive towards.

If you’ve done work that had an impact, don’t be afraid to let your colleagues know. It’s even better if you have some numbers to back it up (who better to put numbers to the impact of data work than you). When doing this, it’s easy to get bogged down by implementation details of how hard it was to build, the fancy algorithm you used, or how many lines of code you wrote. But stakeholders care little about this. Instead, consider this framing.

- Focus on impact

- Instead of saying “delivered X”

- Use “delivered X, and it had Y impact”

Don’t be afraid to call out early when things are not progressing as expected. For example, call it out if you’re working on a project going nowhere or getting increasingly complex. You may fear that you put yourself at risk by doing so, but your stakeholders will perceive it as showing a high level of ownership and not falling for the sunk cost fallacy.

If you’ve any more ideas or questions about the article, reach out to me on LinkedIn.

2024: The year of the value-driven data person was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.