A Small Taste of Classical AI: Solving Search Problems | by Dr. Robert Kübler | Jun, 2022

Learn how to solve search problems such as in a systematic and general way

In the broad field of artificial intelligence, there is the exciting branch of state-space search problems that is filled with all of your favorite puzzles:

Maybe you had to write algorithms to solve some of these or other search problems of that style. Chances are also that you had a uniquely tailored algorithm for each of these problems, which is quite tedious.

In this article, I want to show you

- how these (and other) problems are connected and

- how to write a meta-algorithm that solves all of them.

SPOILER: Find the code on my Github.

The problems — which I will also call games from now — above are not as complex as chess, Go, or any advanced computer game. Still, they are interesting to study, and solving some of them can be NP-hard already, i.e. probably there exist no polynomial-time algorithms to solve them in general.

Let me sketch which kind of games we will solve now.

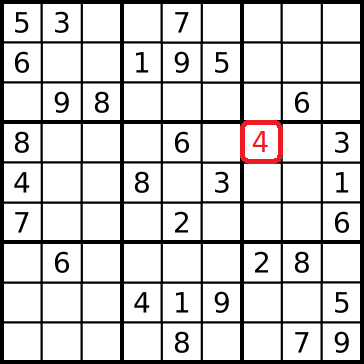

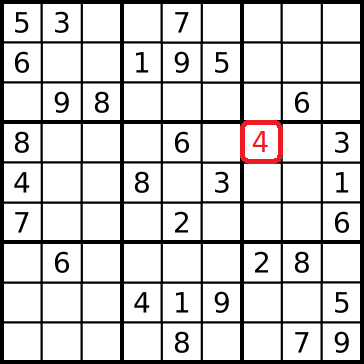

Games have several game states, one of them being the initial state that we will find when we start the problem. A game state is just all the essential information about the status of the game. In a Sudoku, this might be the field including all of the numbers. it can be conveniently represented as a 9×9 matrix filled with digits, with a 0 for empty fields, for example.

You can take an action to change one game state to another game state. In a Sudoku, filling in numbers are the only actions you can take. You have to specify an empty cell and a number, and then write the number into the specified cell, changing the game state.

There is also a goal state that we try to reach, that lets us win the game. In a Sudoku, each row, column and block have to show all digits 1–9 exactly once.

Okay, if you have taken a look into some of the above problems and puzzles, you have probably noticed that they all seem quite different. How can we combine them into a single framework? Well, the following is the only secret:

Solving search problems boils down to graph traversal.

So, what does that mean? I hope that you have heard about algorithms like depth-first search (DFS) and breadth-first search (BFS). These algorithms allow you to walk through a graph systematically, just like we want to systematically solve these puzzles. If you are new to this, just read on because you will learn about them now. Let us use the pancake sorting problem as an example because it is less complex than Sudoku and you can see a different setting for game states and actions.

Pancake Sorting 🥞

The problem can be described like this:

You have a disordered stack of n pancakes of different sizes. You want to sort this pile (smallest pancake on top, largest one at the bottom) using a spatula by flipping parts of the stack.

See the following picture as one example of one possible action:

Let us number the pancakes according to their size, 1 being the smallest and 6 the biggest. In the picture above, we are in the game state (2, 1, 4, 6, 3, 5), top to bottom. Using the action “Flip at position 3”, we can turn the old game state into the new one (4, 1, 2, 6, 3, 5), i.e. the order of the top 3 pancakes is reversed.

Systematic Solution For 4 Pancakes

Let us assume that we start in the initial game state (4, 2, 1, 3). Again, 4 is the largest pancake, and 1 is the smallest. I record the game state from top to bottom.

We now take this state and enumerate all possible next states. We call this step expanding the current state.

Note that we can drop the “Flip at 1” action because it is just idling around, i.e. it results in the same state again.

You probably know the drill now. We keep expanding the four new nodes until we find our goal state:

In the end, it could look like this, omitting a lot of states:

Great, right? And the best thing is that this method does not need anything special about the pancake problem. It needs states and actions, that’s it. We can also put 9×9 sudoku fields in the nodes, chess boards (for the n queens problem), or anything we want. The important things are the following:

- We need a way to recognize a goal state.

- Given a state, we need a way to enumerate the next states.

We will formalize this algorithm now.

First, let us define an SearchProblem base class to agree on an interface that search problems should have. This class will still have missing functionality, it is merely a template. We will derive concrete classes from it and implement the missing functionality afterward. Here we go:

class SearchProblem:

def __init__(self, state):

self.state = statedef is_solution(self):

# Implement me!

pass

def get_next_states(self):

# Implement me!

pass

def __hash__(self):

# Implement me!

pass

def __repr__(self):

return str(self.state)

def __eq__(self, other):

return self.state == other.state

__hash__ , __eq__ and __repr__ (but also __init__) are examples of the so-called magic functions or dunder methods. Implementing __hash__ allows us to use the Python hash function on objects of this class. Type hash((1, 2, 3)) as an example, that gives you the hash value of the tuple (1, 2, 3).

Hash Functions: Intuitively, hash functions should map objects to integers. While this is a deterministic process (i.e. same input leads to same output), when we feed a hash function a lot of distinct objects, the resulting distribution of the hash values should look uniformly distributed.

Why do we need this? We need both of these methods because our problem solver algorithm has to put search problem objects as keys into a dictionary to work efficiently, and keys have to be hashable, i.e. hash(our_object) should not throw an error.

Implementing __eq__ lets us compare two different objects, i.e. are they equal or not. Usually, we just check if the state is equal, hence I gave it a concrete implementation already.

Implementing __repr__ just gives a nice representation of an object of the class when printing it. Usually, just printing the state should be fine.

Great, so a general search problem can be initialized and assigned a (starting) state. We can also check if two problems are the same by checking if their state is equal, and we can print it nicely. But the most important details still have to be fleshed out and heavily depend on the problem.

Back to The Pancakes

Let us turn to the pancake sorting problem again to give concrete implementations of is_solution , get_next_states and __hash__ .

Our convention: The state will be a list consisting of integers 1 to n. Integers represents pancake sizes. The first element in the list is the top pancake. One example can be [4, 2, 1, 3].

class PancakeSortingProblem(SearchProblem):

def is_solution(self):

for i in range(len(self.state)-1):

if self.state[i] > self.state[i+1]:

return False

return Truedef get_next_states(self):

for i in range(2, len(self.state)+1):

upper = self.state[:i]

lower = self.state[i:]

next_state = upper[::-1] + lower

yield PancakeSortingProblem(next_state)

def __hash__(self):

n = max(self.state) + 1

return sum([x*n**i for i, x in enumerate(self.state)])

I think the implementations for is_solution and get_next_states should not be too complicated to follow. is_solution really just checks if an array is sorted. get_next_states takes a state (list) and returns all following states by flipping the upper part of the pancake stack for every feasible position. Let’s try this out:

p = PancakeSortingProblem([4, 2, 1, 3]) # start state = [4, 2, 1, 3]

for next_state in p.get_next_states():

print(next_state)# Output (thanks to the __repr__ method!):

# [2, 4, 1, 3]

# [1, 2, 4, 3]

# [3, 1, 2, 4]

Note: I skipped one of the four next states because the “Flip at position 1” action is basically a “Do nothing” action. It just adds complexity, hence we drop it.

Let’s address the elephant in the room: dat hash. What was I doing there? The goal was to take a state and turn it into an integer, the hash value.

How do I get there? By computing

That’s all I implemented. Basically, I treat the state [4, 2, 1, 3] as the 5-ary (like binary, just with base 5 instead of 2) number 3124 (4213 reversed) and convert it back to the decimal system. This has the effect that two different states have two different hash values (technical detail: as long as the result are less than 2⁶³), which is nice for performance reasons. Let’s leave it at that.

You can also make up another hash function yourself, just try to create something that assigns different states to different hash values most of the time, without using randomness. Doing something like this is technically possible:

def __hash__(self):

return 1

but this results in slower algorithms further down the path, so avoid doing this. Great, we have a working problem class, so we can deal with the solution now!

Defining The Search Problem Solver Class

Let us describe the algorithm in words.

- We take the starting state (in form of a problem object) and put it into a list called

frontier. - We pop a state (i.e. remove it from the list) and check if it is the goal. If not, we expand it.

- We append all of the next states that we haven’t seen so far into

frontier. This prevents us from running in circles. - Then repeat steps 2 and 3 until a goal is found, i.e. pop, check, expand, pop, check, expand, …

Basically, we fill a list (initially filled with the starting state) with all of the states we encounter. This is the list of states to be explored. Whenever this list is empty, there is nothing left to explore anymore. If we did not find the goal until then, there is no solution.

Since we insert every possible state into the list at most once, the algorithm will terminate.

In code, it can look like this:

class SearchProblemSolverDFS:

def solve(self, start_problem):

frontier = [start_problem]

self.backlinks = {start_problem: None}

self.solution = None while frontier and self.solution is None:

current_state = frontier.pop() if current_state.is_solution():

self.solution = current_state for next_state in current_state.get_next_states():

if next_state not in self.backlinks:

self.backlinks[next_state] = current_state

frontier.append(next_state) def print_solution(self):

current_state = self.solution

result = []

while current_state is not None:

result.append(current_state)

current_state = self.backlinks[current_state] return result[::-1]

Some comments about the code:

In the solve method, I also introduced the backlinks dictionary. This has two different purposes:

- Keep track of visited states, as you can see in the line

if next_state not in self.backlinks. - Keep track of how to get to each state, i.e. storing predecessors. The start state does not have a predecessor, hence I initialized it as

{start_problem: None}.

I also created a print_solution method that outputs how to get to the goal state from the start state.

Cool, let’s try it out!

Running The Code

We have done so much work, but just look how easy it is to apply everything now:

four_pancakes = PancakeSortingProblem([4, 2, 1, 3])

solver = SearchProblemSolverDFS()solver.solve(four_pancakes)

solver.print_solution()# Output:

# [[4, 2, 1, 3], [3, 1, 2, 4], [2, 1, 3, 4], [1, 2, 3, 4]]

This corresponds to the solution that we have seen in the picture above. Nice! Try it out with more pancakes, such as with

PancakeSortingProblem([4, 2, 1, 3, 5, 7, 6, 8])

The solution is loooooooooooong, and I will tell you why in a second.

The way we have implemented the SearchProblemSolver is via depth-first search (DFS). It has this name because it favors depth, and does not try to find the shortest solution. It behaves that way because we used a stack data structure to implement frontier . We always insert new states at the end of the list and pop elements from there as well (last-in-first-out or LIFO principle). That’s why new states immediately get explored. Imagine frontier to be a to-do list where newer things have a higher priority — the last thing you have written on the list gets tackled first.

It will take a long time until the states Next (a) and Next (b) get explored, although they are reachable within a single action from the starting state.

We can implement frontier differently, for example as a queue. Using this data structure, we can insert new states at the end of the list (as before) but pop elements from the start of the list (first-in-first-out or FIFO principle).

An inefficient and cheap way to do this is by replacing frontier.pop() with frontier.pop(0) . However, this is a slow operation (O(n) if there are n elements in the list), and using designated data structures to implement queues is better (O(1) then). For references, see here.

One way to do this is by using a deque:

from collections import dequeclass SearchProblemSolverBFS:

def solve(self, start_problem):

frontier = deque([start_problem])

...while frontier and self.solution is None:

current_state = frontier.popleft()...

...

This simple change creates a breadth-first search (BFS), which focuses on the shortest solutions. Let’s try it out:

eight_pancakes = PancakeSortingProblem([4, 2, 1, 3, 5, 7, 6, 8])

solver = SearchProblemSolverBFS()

solver.solve(eight_pancakes)

solver.print_solution()# Output:

#[[4, 2, 1, 3, 5, 7, 6, 8],

# [3, 1, 2, 4, 5, 7, 6, 8],

# [2, 1, 3, 4, 5, 7, 6, 8],

# [1, 2, 3, 4, 5, 7, 6, 8],

# [6, 7, 5, 4, 3, 2, 1, 8],

# [7, 6, 5, 4, 3, 2, 1, 8],

# [1, 2, 3, 4, 5, 6, 7, 8]]

Nice and short! However, the disadvantage of BFS is that it uses more memory, which can be quite a party crasher. There is also a hybrid between BFS and DFS called iterative deepening depth-first search. This finds the shortest solution without wasting too much memory, but it uses more run time.

In this article, we have seen how to solve search problems such as sudokus, sliding puzzles, or the pancake sorting problem systematically using graph search.

The solver that we have written is generic, we only have to create a problem class in which we define how the problem works, i.e.

- what does the goal look like

- and which states we can reach from any given state.

One interesting thing that we did not talk about though is the fact that some actions might be costlier than others. We cannot optimally solve these problems yet because our BFS solver only minimizes the absolute number of steps, meaning that we used an implicit constant cost of 1, for example.

In the case of pancakes, heterogenous costs could mean that maybe flipping the pancake pile at position k has a cost of k, the intuition being that flipping gets more difficult the more pancakes we flip at once. We might want a solution that minimizes the sum of these costs then. There are ways to get an optimal solution in this case as well, and we might take a look into this direction in another article. Keywords for now: Uniform-cost search (aka Dijkstra) and A*.

You can now create more problem classes and solve them without changing our problem solver classes. A simple next problem could be the burnt pancake problem (because why not 🔥🥞). There, the pancakes have a burnt and a non-burnt side. They should not only be in order but also the burnt sides of all pancakes should be at the bottom. You could express a state as something like [[4, 0], [2, 0], [1, 1], [3, 1]], meaning that we have the pancakes [4, 2, 1, 3] again, but the burnt side of pancakes 1 and 3 are on top. You only have to slightly alter the pancake class code for this.

Have fun solving puzzles!

I hope that you learned something new, interesting, and useful today. Thanks for reading!

As the last point, if you

- want to support me in writing more about machine learning and

- plan to get a Medium subscription anyway,

why not do it via this link? This would help me a lot! 😊

To be transparent, the price for you does not change, but about half of the subscription fees go directly to me.

Thanks a lot, if you consider supporting me!

If you have any questions, write me on LinkedIn!

Learn how to solve search problems such as in a systematic and general way

In the broad field of artificial intelligence, there is the exciting branch of state-space search problems that is filled with all of your favorite puzzles:

Maybe you had to write algorithms to solve some of these or other search problems of that style. Chances are also that you had a uniquely tailored algorithm for each of these problems, which is quite tedious.

In this article, I want to show you

- how these (and other) problems are connected and

- how to write a meta-algorithm that solves all of them.

SPOILER: Find the code on my Github.

The problems — which I will also call games from now — above are not as complex as chess, Go, or any advanced computer game. Still, they are interesting to study, and solving some of them can be NP-hard already, i.e. probably there exist no polynomial-time algorithms to solve them in general.

Let me sketch which kind of games we will solve now.

Games have several game states, one of them being the initial state that we will find when we start the problem. A game state is just all the essential information about the status of the game. In a Sudoku, this might be the field including all of the numbers. it can be conveniently represented as a 9×9 matrix filled with digits, with a 0 for empty fields, for example.

You can take an action to change one game state to another game state. In a Sudoku, filling in numbers are the only actions you can take. You have to specify an empty cell and a number, and then write the number into the specified cell, changing the game state.

There is also a goal state that we try to reach, that lets us win the game. In a Sudoku, each row, column and block have to show all digits 1–9 exactly once.

Okay, if you have taken a look into some of the above problems and puzzles, you have probably noticed that they all seem quite different. How can we combine them into a single framework? Well, the following is the only secret:

Solving search problems boils down to graph traversal.

So, what does that mean? I hope that you have heard about algorithms like depth-first search (DFS) and breadth-first search (BFS). These algorithms allow you to walk through a graph systematically, just like we want to systematically solve these puzzles. If you are new to this, just read on because you will learn about them now. Let us use the pancake sorting problem as an example because it is less complex than Sudoku and you can see a different setting for game states and actions.

Pancake Sorting 🥞

The problem can be described like this:

You have a disordered stack of n pancakes of different sizes. You want to sort this pile (smallest pancake on top, largest one at the bottom) using a spatula by flipping parts of the stack.

See the following picture as one example of one possible action:

Let us number the pancakes according to their size, 1 being the smallest and 6 the biggest. In the picture above, we are in the game state (2, 1, 4, 6, 3, 5), top to bottom. Using the action “Flip at position 3”, we can turn the old game state into the new one (4, 1, 2, 6, 3, 5), i.e. the order of the top 3 pancakes is reversed.

Systematic Solution For 4 Pancakes

Let us assume that we start in the initial game state (4, 2, 1, 3). Again, 4 is the largest pancake, and 1 is the smallest. I record the game state from top to bottom.

We now take this state and enumerate all possible next states. We call this step expanding the current state.

Note that we can drop the “Flip at 1” action because it is just idling around, i.e. it results in the same state again.

You probably know the drill now. We keep expanding the four new nodes until we find our goal state:

In the end, it could look like this, omitting a lot of states:

Great, right? And the best thing is that this method does not need anything special about the pancake problem. It needs states and actions, that’s it. We can also put 9×9 sudoku fields in the nodes, chess boards (for the n queens problem), or anything we want. The important things are the following:

- We need a way to recognize a goal state.

- Given a state, we need a way to enumerate the next states.

We will formalize this algorithm now.

First, let us define an SearchProblem base class to agree on an interface that search problems should have. This class will still have missing functionality, it is merely a template. We will derive concrete classes from it and implement the missing functionality afterward. Here we go:

class SearchProblem:

def __init__(self, state):

self.state = statedef is_solution(self):

# Implement me!

pass

def get_next_states(self):

# Implement me!

pass

def __hash__(self):

# Implement me!

pass

def __repr__(self):

return str(self.state)

def __eq__(self, other):

return self.state == other.state

__hash__ , __eq__ and __repr__ (but also __init__) are examples of the so-called magic functions or dunder methods. Implementing __hash__ allows us to use the Python hash function on objects of this class. Type hash((1, 2, 3)) as an example, that gives you the hash value of the tuple (1, 2, 3).

Hash Functions: Intuitively, hash functions should map objects to integers. While this is a deterministic process (i.e. same input leads to same output), when we feed a hash function a lot of distinct objects, the resulting distribution of the hash values should look uniformly distributed.

Why do we need this? We need both of these methods because our problem solver algorithm has to put search problem objects as keys into a dictionary to work efficiently, and keys have to be hashable, i.e. hash(our_object) should not throw an error.

Implementing __eq__ lets us compare two different objects, i.e. are they equal or not. Usually, we just check if the state is equal, hence I gave it a concrete implementation already.

Implementing __repr__ just gives a nice representation of an object of the class when printing it. Usually, just printing the state should be fine.

Great, so a general search problem can be initialized and assigned a (starting) state. We can also check if two problems are the same by checking if their state is equal, and we can print it nicely. But the most important details still have to be fleshed out and heavily depend on the problem.

Back to The Pancakes

Let us turn to the pancake sorting problem again to give concrete implementations of is_solution , get_next_states and __hash__ .

Our convention: The state will be a list consisting of integers 1 to n. Integers represents pancake sizes. The first element in the list is the top pancake. One example can be [4, 2, 1, 3].

class PancakeSortingProblem(SearchProblem):

def is_solution(self):

for i in range(len(self.state)-1):

if self.state[i] > self.state[i+1]:

return False

return Truedef get_next_states(self):

for i in range(2, len(self.state)+1):

upper = self.state[:i]

lower = self.state[i:]

next_state = upper[::-1] + lower

yield PancakeSortingProblem(next_state)

def __hash__(self):

n = max(self.state) + 1

return sum([x*n**i for i, x in enumerate(self.state)])

I think the implementations for is_solution and get_next_states should not be too complicated to follow. is_solution really just checks if an array is sorted. get_next_states takes a state (list) and returns all following states by flipping the upper part of the pancake stack for every feasible position. Let’s try this out:

p = PancakeSortingProblem([4, 2, 1, 3]) # start state = [4, 2, 1, 3]

for next_state in p.get_next_states():

print(next_state)# Output (thanks to the __repr__ method!):

# [2, 4, 1, 3]

# [1, 2, 4, 3]

# [3, 1, 2, 4]

Note: I skipped one of the four next states because the “Flip at position 1” action is basically a “Do nothing” action. It just adds complexity, hence we drop it.

Let’s address the elephant in the room: dat hash. What was I doing there? The goal was to take a state and turn it into an integer, the hash value.

How do I get there? By computing

That’s all I implemented. Basically, I treat the state [4, 2, 1, 3] as the 5-ary (like binary, just with base 5 instead of 2) number 3124 (4213 reversed) and convert it back to the decimal system. This has the effect that two different states have two different hash values (technical detail: as long as the result are less than 2⁶³), which is nice for performance reasons. Let’s leave it at that.

You can also make up another hash function yourself, just try to create something that assigns different states to different hash values most of the time, without using randomness. Doing something like this is technically possible:

def __hash__(self):

return 1

but this results in slower algorithms further down the path, so avoid doing this. Great, we have a working problem class, so we can deal with the solution now!

Defining The Search Problem Solver Class

Let us describe the algorithm in words.

- We take the starting state (in form of a problem object) and put it into a list called

frontier. - We pop a state (i.e. remove it from the list) and check if it is the goal. If not, we expand it.

- We append all of the next states that we haven’t seen so far into

frontier. This prevents us from running in circles. - Then repeat steps 2 and 3 until a goal is found, i.e. pop, check, expand, pop, check, expand, …

Basically, we fill a list (initially filled with the starting state) with all of the states we encounter. This is the list of states to be explored. Whenever this list is empty, there is nothing left to explore anymore. If we did not find the goal until then, there is no solution.

Since we insert every possible state into the list at most once, the algorithm will terminate.

In code, it can look like this:

class SearchProblemSolverDFS:

def solve(self, start_problem):

frontier = [start_problem]

self.backlinks = {start_problem: None}

self.solution = None while frontier and self.solution is None:

current_state = frontier.pop() if current_state.is_solution():

self.solution = current_state for next_state in current_state.get_next_states():

if next_state not in self.backlinks:

self.backlinks[next_state] = current_state

frontier.append(next_state) def print_solution(self):

current_state = self.solution

result = []

while current_state is not None:

result.append(current_state)

current_state = self.backlinks[current_state] return result[::-1]

Some comments about the code:

In the solve method, I also introduced the backlinks dictionary. This has two different purposes:

- Keep track of visited states, as you can see in the line

if next_state not in self.backlinks. - Keep track of how to get to each state, i.e. storing predecessors. The start state does not have a predecessor, hence I initialized it as

{start_problem: None}.

I also created a print_solution method that outputs how to get to the goal state from the start state.

Cool, let’s try it out!

Running The Code

We have done so much work, but just look how easy it is to apply everything now:

four_pancakes = PancakeSortingProblem([4, 2, 1, 3])

solver = SearchProblemSolverDFS()solver.solve(four_pancakes)

solver.print_solution()# Output:

# [[4, 2, 1, 3], [3, 1, 2, 4], [2, 1, 3, 4], [1, 2, 3, 4]]

This corresponds to the solution that we have seen in the picture above. Nice! Try it out with more pancakes, such as with

PancakeSortingProblem([4, 2, 1, 3, 5, 7, 6, 8])

The solution is loooooooooooong, and I will tell you why in a second.

The way we have implemented the SearchProblemSolver is via depth-first search (DFS). It has this name because it favors depth, and does not try to find the shortest solution. It behaves that way because we used a stack data structure to implement frontier . We always insert new states at the end of the list and pop elements from there as well (last-in-first-out or LIFO principle). That’s why new states immediately get explored. Imagine frontier to be a to-do list where newer things have a higher priority — the last thing you have written on the list gets tackled first.

It will take a long time until the states Next (a) and Next (b) get explored, although they are reachable within a single action from the starting state.

We can implement frontier differently, for example as a queue. Using this data structure, we can insert new states at the end of the list (as before) but pop elements from the start of the list (first-in-first-out or FIFO principle).

An inefficient and cheap way to do this is by replacing frontier.pop() with frontier.pop(0) . However, this is a slow operation (O(n) if there are n elements in the list), and using designated data structures to implement queues is better (O(1) then). For references, see here.

One way to do this is by using a deque:

from collections import dequeclass SearchProblemSolverBFS:

def solve(self, start_problem):

frontier = deque([start_problem])

...while frontier and self.solution is None:

current_state = frontier.popleft()...

...

This simple change creates a breadth-first search (BFS), which focuses on the shortest solutions. Let’s try it out:

eight_pancakes = PancakeSortingProblem([4, 2, 1, 3, 5, 7, 6, 8])

solver = SearchProblemSolverBFS()

solver.solve(eight_pancakes)

solver.print_solution()# Output:

#[[4, 2, 1, 3, 5, 7, 6, 8],

# [3, 1, 2, 4, 5, 7, 6, 8],

# [2, 1, 3, 4, 5, 7, 6, 8],

# [1, 2, 3, 4, 5, 7, 6, 8],

# [6, 7, 5, 4, 3, 2, 1, 8],

# [7, 6, 5, 4, 3, 2, 1, 8],

# [1, 2, 3, 4, 5, 6, 7, 8]]

Nice and short! However, the disadvantage of BFS is that it uses more memory, which can be quite a party crasher. There is also a hybrid between BFS and DFS called iterative deepening depth-first search. This finds the shortest solution without wasting too much memory, but it uses more run time.

In this article, we have seen how to solve search problems such as sudokus, sliding puzzles, or the pancake sorting problem systematically using graph search.

The solver that we have written is generic, we only have to create a problem class in which we define how the problem works, i.e.

- what does the goal look like

- and which states we can reach from any given state.

One interesting thing that we did not talk about though is the fact that some actions might be costlier than others. We cannot optimally solve these problems yet because our BFS solver only minimizes the absolute number of steps, meaning that we used an implicit constant cost of 1, for example.

In the case of pancakes, heterogenous costs could mean that maybe flipping the pancake pile at position k has a cost of k, the intuition being that flipping gets more difficult the more pancakes we flip at once. We might want a solution that minimizes the sum of these costs then. There are ways to get an optimal solution in this case as well, and we might take a look into this direction in another article. Keywords for now: Uniform-cost search (aka Dijkstra) and A*.

You can now create more problem classes and solve them without changing our problem solver classes. A simple next problem could be the burnt pancake problem (because why not 🔥🥞). There, the pancakes have a burnt and a non-burnt side. They should not only be in order but also the burnt sides of all pancakes should be at the bottom. You could express a state as something like [[4, 0], [2, 0], [1, 1], [3, 1]], meaning that we have the pancakes [4, 2, 1, 3] again, but the burnt side of pancakes 1 and 3 are on top. You only have to slightly alter the pancake class code for this.

Have fun solving puzzles!

I hope that you learned something new, interesting, and useful today. Thanks for reading!

As the last point, if you

- want to support me in writing more about machine learning and

- plan to get a Medium subscription anyway,

why not do it via this link? This would help me a lot! 😊

To be transparent, the price for you does not change, but about half of the subscription fees go directly to me.

Thanks a lot, if you consider supporting me!

If you have any questions, write me on LinkedIn!