Australian Organisations Need to Build Trust With Consumers Over Data & AI

Global data highlights a concerning trend, where Australian organisations are starting to fall behind in a “trust gap” between what customers expect them to do with data and privacy and what is actually happening.

New data shows that 90% of people want to see organisations transform to properly manage data and risk. With a regulatory environment that has fallen behind, for Australian organisations to deliver this, they will need to move faster than the regulatory environment.

Australian enterprises need to take seriously

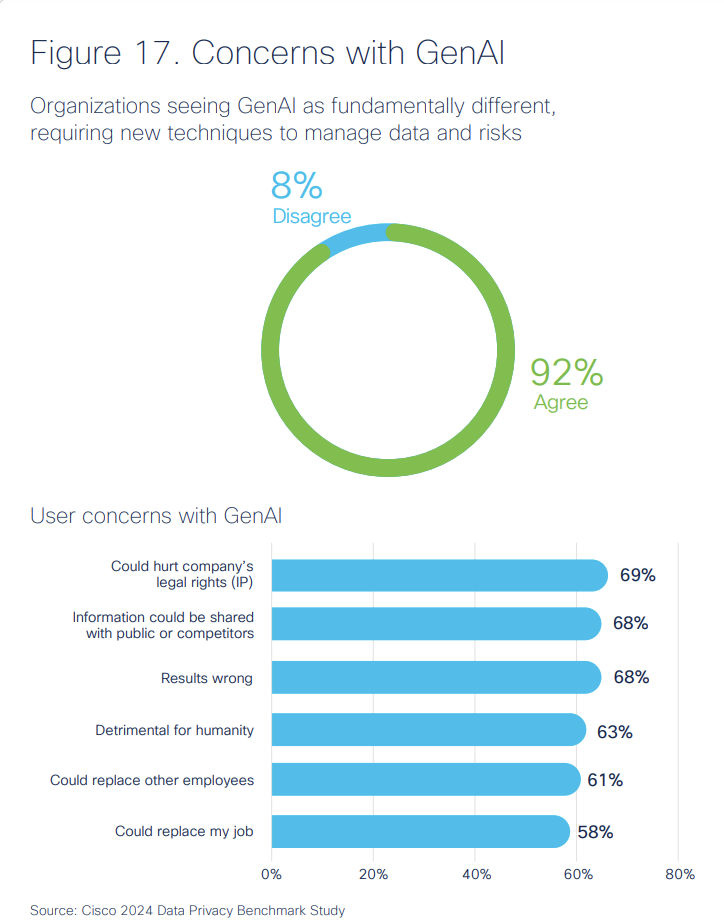

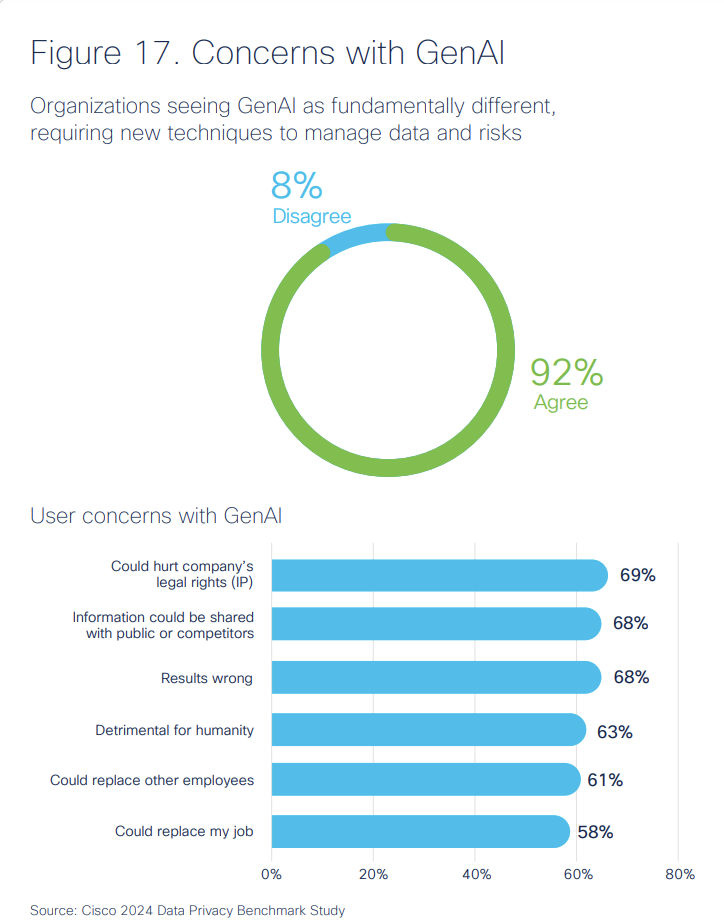

According to a recent major global Cisco study, more than 90% of people believe generative AI requires new techniques to manage data and risk (Figure A). Meanwhile, 69% are concerned with the potential for legal and IP rights to be compromised, and 68% are concerned with the risk of disclosure to the public or competitors.

Essentially, while customers appreciate the value AI can bring to them in terms of personalisation and service levels, they’re also uncomfortable with the implications to their privacy if their data is used as part of the AI models.

PREMIUM: Australian organisations should consider an AI ethics policy.

Around 8% of participants in the Cisco survey were from Australia, though the study doesn’t break down the above concerns by territory.

Australians more likely to violate data security policies despite data privacy concerns

Other research shows that Australians are particularly sensitive to the way organisations use their data. According to research by Quantum Market Research and Porter Novelli, 74% of Australians are concerned about cybercrime. In addition, 45% are concerned about their financial information being taken, and 28% are concerned about their ID documents, such as passports and driver’s licences (Figure B).

However, Australians are also twice as likely as the global average to violate data security policies at work.

As Gartner VP Analyst Nader Hanein said, organisations should be deeply concerned with this breach of customer trust because customers will be quite happy to take their wallets and walk.

“The fact is that consumers today are more than happy to cross the road over to the competition and, in some instances, pay a premium for the same service, if that is where they believe their data and their family’s data is best cared for,” Hanein said.

Voluntary regulation in Australian organisations isn’t great for data privacy

Part of the problem is that, in Australia, doing the right thing around data privacy and AI is largely voluntary.

SEE: What Australian IT leaders need to focus on ahead of privacy reforms.

“From a regulatory perspective, most Australian companies are focused on breach disclosure and reporting, given all the high-profile incidents over the past two years. But when it comes to core privacy aspects, there is little requirement on companies in Australia. Main privacy pillars such as transparency, consumer privacy rights and explicit consent are simply missing,” Hanein said.

It is only those Australian organisations that have done business abroad and run into outside regulation that have needed to improve — Hanein pointed to the GDPR and New Zealand’s privacy laws as examples. Other organisations will need to make building trust with their customers an internal priority.

Building trust in data use

While data use in AI might be largely unregulated and voluntary in Australia, there are five things the IT team can – and should – champion across the organisation:

- Transparency about data collection and use: Transparency around data collection can be achieved through clear and easy-to-understand privacy policies, consent forms and opt-out options.

- Accountability with data governance: Everyone in the organisation should recognise the importance of data quality and integrity in data collection, processing and analysis, and there should be policies in place to reinforce behaviour.

- High data quality and accuracy: Data collection and use should be accurate, as misinformation can make AI models unreliable, and that can subsequently undermine trust in data security and management.

- Proactive incident detection and response: An inadequate incident response plan can lead to damage to organisational reputation and data.

- Customer control over their own data: All services and features that involve data collection should allow the customer to access, manage and delete their data on their own terms and when they want to.

Self-regulation now to prepare for the future

Currently, data privacy law — including data collected and used in AI models — is regulated by old regulations created before AI models were even being used. Therefore, the only regulation that Australian enterprises apply is self-determined.

However, as Gartner’s Hanein said, there is a lot of consensus about the right way forward for the management of data when used in these new and transformative ways.

SEE: Australian organisations to focus on the ethics of data collection and use in 2024.

“Back in February 2023, the Privacy Act Review Report was published with a lot of good recommendations intended to modernise data protection in Australia,” Hanein said. “Seven months later in September 2023, the Federal Government responded. Of the 116 proposals in the original report the government responded favourably to 106.”

For now, some executives and boards may baulk at the idea of self-imposed regulation, but the benefit to this is that an organisation that can demonstrate it is taking these steps will benefit from a greater reputation among customers and be seen as taking their concerns around data use seriously.

Meanwhile, some within the organisation might be concerned that imposing self-regulation might impede innovation. As Hanein said in response to that: “would you have delayed the introduction of seat belts, crumple zones and air bags for fear of having these aspects slow down developments in the automotive industry?”

It is now time for IT professionals to take charge and start bridging that trust gap.

Global data highlights a concerning trend, where Australian organisations are starting to fall behind in a “trust gap” between what customers expect them to do with data and privacy and what is actually happening.

New data shows that 90% of people want to see organisations transform to properly manage data and risk. With a regulatory environment that has fallen behind, for Australian organisations to deliver this, they will need to move faster than the regulatory environment.

Australian enterprises need to take seriously

According to a recent major global Cisco study, more than 90% of people believe generative AI requires new techniques to manage data and risk (Figure A). Meanwhile, 69% are concerned with the potential for legal and IP rights to be compromised, and 68% are concerned with the risk of disclosure to the public or competitors.

Essentially, while customers appreciate the value AI can bring to them in terms of personalisation and service levels, they’re also uncomfortable with the implications to their privacy if their data is used as part of the AI models.

PREMIUM: Australian organisations should consider an AI ethics policy.

Around 8% of participants in the Cisco survey were from Australia, though the study doesn’t break down the above concerns by territory.

Australians more likely to violate data security policies despite data privacy concerns

Other research shows that Australians are particularly sensitive to the way organisations use their data. According to research by Quantum Market Research and Porter Novelli, 74% of Australians are concerned about cybercrime. In addition, 45% are concerned about their financial information being taken, and 28% are concerned about their ID documents, such as passports and driver’s licences (Figure B).

However, Australians are also twice as likely as the global average to violate data security policies at work.

As Gartner VP Analyst Nader Hanein said, organisations should be deeply concerned with this breach of customer trust because customers will be quite happy to take their wallets and walk.

“The fact is that consumers today are more than happy to cross the road over to the competition and, in some instances, pay a premium for the same service, if that is where they believe their data and their family’s data is best cared for,” Hanein said.

Voluntary regulation in Australian organisations isn’t great for data privacy

Part of the problem is that, in Australia, doing the right thing around data privacy and AI is largely voluntary.

SEE: What Australian IT leaders need to focus on ahead of privacy reforms.

“From a regulatory perspective, most Australian companies are focused on breach disclosure and reporting, given all the high-profile incidents over the past two years. But when it comes to core privacy aspects, there is little requirement on companies in Australia. Main privacy pillars such as transparency, consumer privacy rights and explicit consent are simply missing,” Hanein said.

It is only those Australian organisations that have done business abroad and run into outside regulation that have needed to improve — Hanein pointed to the GDPR and New Zealand’s privacy laws as examples. Other organisations will need to make building trust with their customers an internal priority.

Building trust in data use

While data use in AI might be largely unregulated and voluntary in Australia, there are five things the IT team can – and should – champion across the organisation:

- Transparency about data collection and use: Transparency around data collection can be achieved through clear and easy-to-understand privacy policies, consent forms and opt-out options.

- Accountability with data governance: Everyone in the organisation should recognise the importance of data quality and integrity in data collection, processing and analysis, and there should be policies in place to reinforce behaviour.

- High data quality and accuracy: Data collection and use should be accurate, as misinformation can make AI models unreliable, and that can subsequently undermine trust in data security and management.

- Proactive incident detection and response: An inadequate incident response plan can lead to damage to organisational reputation and data.

- Customer control over their own data: All services and features that involve data collection should allow the customer to access, manage and delete their data on their own terms and when they want to.

Self-regulation now to prepare for the future

Currently, data privacy law — including data collected and used in AI models — is regulated by old regulations created before AI models were even being used. Therefore, the only regulation that Australian enterprises apply is self-determined.

However, as Gartner’s Hanein said, there is a lot of consensus about the right way forward for the management of data when used in these new and transformative ways.

SEE: Australian organisations to focus on the ethics of data collection and use in 2024.

“Back in February 2023, the Privacy Act Review Report was published with a lot of good recommendations intended to modernise data protection in Australia,” Hanein said. “Seven months later in September 2023, the Federal Government responded. Of the 116 proposals in the original report the government responded favourably to 106.”

For now, some executives and boards may baulk at the idea of self-imposed regulation, but the benefit to this is that an organisation that can demonstrate it is taking these steps will benefit from a greater reputation among customers and be seen as taking their concerns around data use seriously.

Meanwhile, some within the organisation might be concerned that imposing self-regulation might impede innovation. As Hanein said in response to that: “would you have delayed the introduction of seat belts, crumple zones and air bags for fear of having these aspects slow down developments in the automotive industry?”

It is now time for IT professionals to take charge and start bridging that trust gap.