Building a Math Application with LangChain Agents

A tutorial on why LLMs struggle with math, and how to resolve these limitations using LangChain Agents, OpenAI and Chainlit

In this tutorial, I will demonstrate how to use LangChain agents to create a custom Math application utilising OpenAI’s GPT3.5 model. For the application frontend, I will be using Chainlit, an easy-to-use open-source Python framework. This generative math application, let’s call it “Math Wiz”, is designed to help users with their math or reasoning/logic questions.

Why do LLMs struggle with Math?

Large Language Models (LLMs) are known to be quite bad at Math, as well as reasoning tasks, and this is a common trait among many language models. There are a few different reasons for this:

- Lack of training data: One reasons is the limitations of their training data. Language models, trained on vast text datasets, may lack sufficient mathematical problems and solutions. This can lead to misinterpretations of numbers, forgetting important calculation steps, and a lack of quantitative reasoning skills.

- Lack of numeric representations: Another reason is that LLMs are designed to understand and generate text, operating on tokens instead of numeric values. Most text-based tasks can have multiple reasonable answers. However, math problems typically have only one correct solution.

- Generative nature: Due to the generative nature of these language models, generating consistently accurate and precise answers to math questions can be challenging for LLMs.

This makes the “Math problem” the perfect candidate for utilising LangChain agents. Agents are systems that use a language model to interact with other tools to break down a complex problem (more on this later). The code for this tutorial is available on my GitHub.

Environment Setup

We can start off by creating a new conda environment with python=3.11.

conda create -n math_assistant python=3.11

Activate the environment:

conda activate math_assistant

Next, let’s install all necessary libraries:

pip install -r requirements.txt

Sign up at OpenAI and obtain your own key to start making calls to the gpt model. Once you have the key, create a .env file in your repository and store the OpenAI key:

OPENAI_API_KEY="your_openai_api_key"

Application Flow

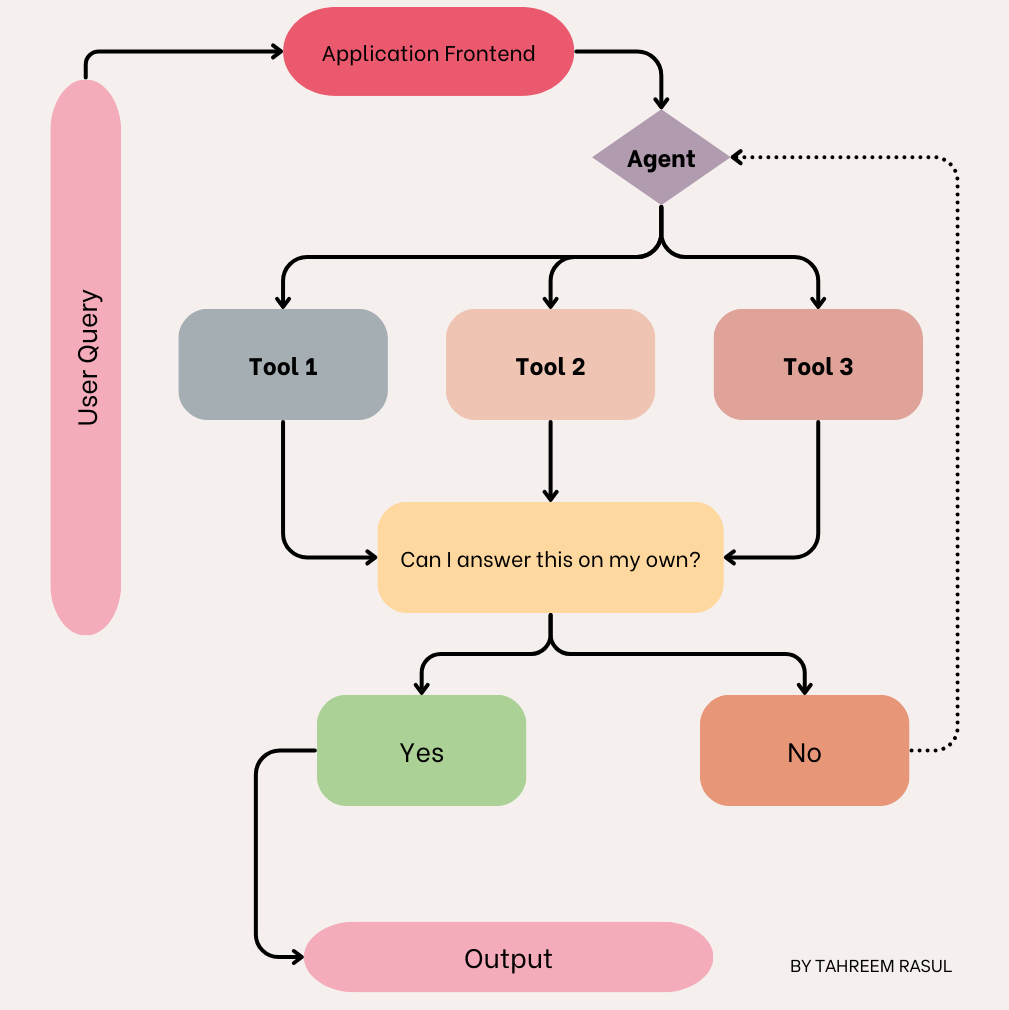

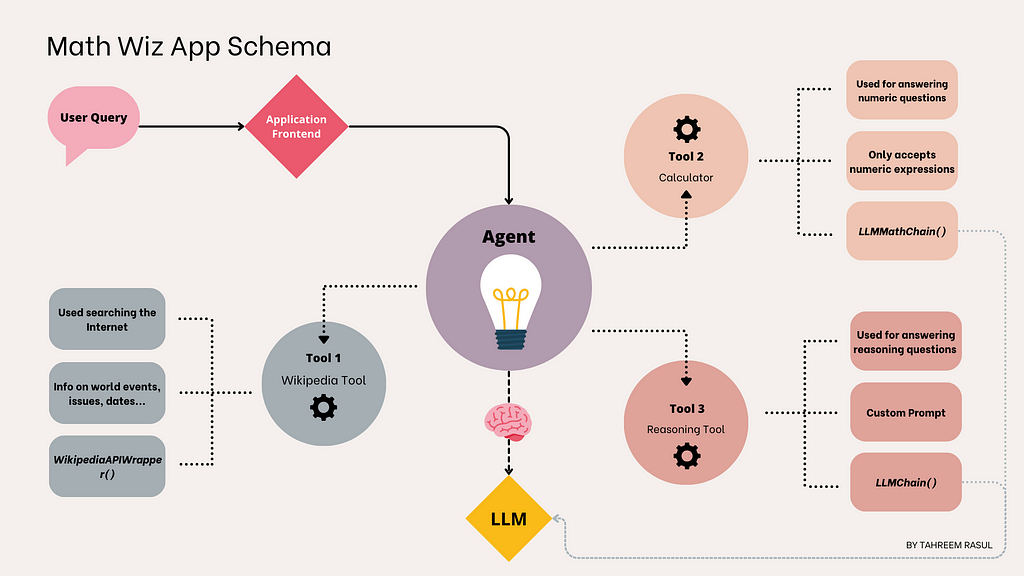

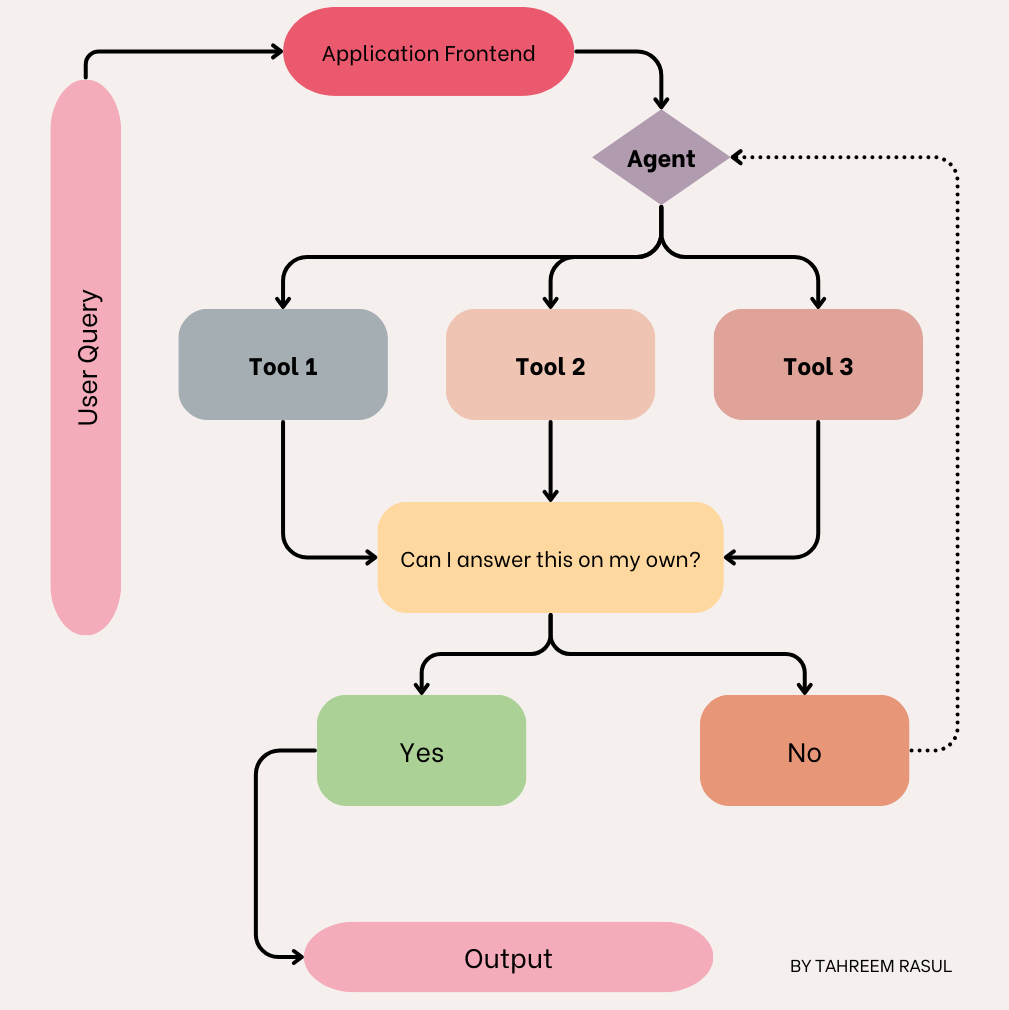

The application flow for Math Wiz is outlined in the flowchart below. The agent in our pipeline will have a set of tools at its disposal that it can use to answer a user query. The Large Language Model (LLM) serves as the “brain” of the agent, guiding its decisions. When a user submits a question, the agent uses the LLM to select the most appropriate tool or a combination of tools to provide an answer. If the agent determines it needs multiple tools, it will also specify the order in which the tools are used.

The agent for our Math Wiz app will be using the following tools:

- Wikipedia Tool: this tool will be responsible for fetching the latest information from Wikipedia using the Wikipedia API. While there are paid tools and APIs available that can be integrated inside LangChain, I would be using Wikipedia as the app’s online source of information.

- Calculator Tool: this tool would be responsible for solving a user’s math queries. This includes anything involving numerical calculations. For example, if a user asks what the square root of 4 is, this tool would be appropriate.

- Reasoning Tool: the final tool in our application setup would be a reasoning tool, responsible for tackling logical/reasoning-based user queries. Any mathematical word problems should also be handled with this tool.

Now that we have a rough application design, we can began thinking about building this application.

Understanding LangChain Agents

LangChain agents are designed to enhance interaction with language models by providing an interface for more complex and interactive tasks. We can think of an agent as an intermediary between users and a large language model. Agents seek to break down a seemingly complex user query, that our LLM might not be able to tackle on its own, into easier, actionable steps.

In our application flow, we defined a few different tools that we would like to use for our math application. Based on the user input, the agent should decide which of these tools to use. If a tool is not required, it should not be used. LangChain agents can simplify this for us. These agents use a language model to choose a sequence of actions to take. Essentially, the LLM acts as the “brain” of the agent, guiding it on which tool to use for a particular query, and in which order. This is different from LangChain chains where the sequence of actions are hardcoded in code. LangChain offers a wide set of tools that can be integrated with an agent. These tools include, and are not limited to, online search tools, API-based tools, chain-based tools etc. For more information on LangChain agents and their types, see this.

Step-by-Step Implementation

Step 1

Create a chatbot.py script and import the necessary dependencies:

from langchain_openai import OpenAI

from langchain.chains import LLMMathChain, LLMChain

from langchain.prompts import PromptTemplate

from langchain_community.utilities import WikipediaAPIWrapper

from langchain.agents.agent_types import AgentType

from langchain.agents import Tool, initialize_agent

from dotenv import load_dotenv

load_dotenv()

Step 2

Next, we will define our OpenAI-based Language Model. LangChain offers the langchain-openai package which can be used to define an instance of the OpenAI model. We will be using the gpt-3.5-turbo-instruct model from OpenAI. The dotenv package would already be handling the API key so you do not need to explicitly define it here:

llm = OpenAI(model='gpt-3.5-turbo-instruct',

temperature=0)

We would be using this LLM both within our math and reasoning chains and as the decision maker for our agent.

Step 3

When constructing your own agent, you will need to provide it with a list of tools that it can use. Besides the actual function that is called, the Tool consists of a few other parameters:

- name (str), is required and must be unique within a set of tools provided to an agent

- description (str), is optional but recommended, as it is used by an agent to determine tool use

We will now create our three tools. The first one will be the online tool using the Wikipedia API wrapper:

wikipedia = WikipediaAPIWrapper()

wikipedia_tool = Tool(name="Wikipedia",

func=wikipedia.run,

description="A useful tool for searching the Internet

to find information on world events, issues, dates, years, etc. Worth

using for general topics. Use precise questions.")

In the code above, we have defined an instance of the Wikipedia API wrapper. Afterwards, we have wrapped it inside a LangChain Tool, with the name, function and description.

Next, let’s define the tool that we will be using for calculating any numerical expressions. LangChain offers the LLMMathChain which uses the numexpr Python library to calculate mathematical expressions. It is also important that we clearly define what this tool would be used for. The description can be helpful for the agent in deciding which tool to use from a set of tools for a particular user query. For our chain-based tools, we will be using the Tool.from_function() method.

problem_chain = LLMMathChain.from_llm(llm=llm)

math_tool = Tool.from_function(name="Calculator",

func=problem_chain.run,

description="Useful for when you need to answer questions

about math. This tool is only for math questions and nothing else. Only input

math expressions.")

Finally, we will be defining the tool for logic/reasoning-based queries. We will first create a prompt to instruct the model with executing the specific task. Then we will create a simple LLMChain for this tool, passing it the LLM and the prompt.

word_problem_template = """You are a reasoning agent tasked with solving

the user's logic-based questions. Logically arrive at the solution, and be

factual. In your answers, clearly detail the steps involved and give the

final answer. Provide the response in bullet points.

Question {question} Answer"""

math_assistant_prompt = PromptTemplate(input_variables=["question"],

template=word_problem_template

)

word_problem_chain = LLMChain(llm=llm,

prompt=math_assistant_prompt)

word_problem_tool = Tool.from_function(name="Reasoning Tool",

func=word_problem_chain.run,

description="Useful for when you need

to answer logic-based/reasoning questions.",

)

Step 4

We will now initialize our agent with the tools we have created above. We will also specify the LLM to help it choose which tools to use and in what order:

agent = initialize_agent(

tools=[wikipedia_tool, math_tool, word_problem_tool],

llm=llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=False,

handle_parsing_errors=True

)

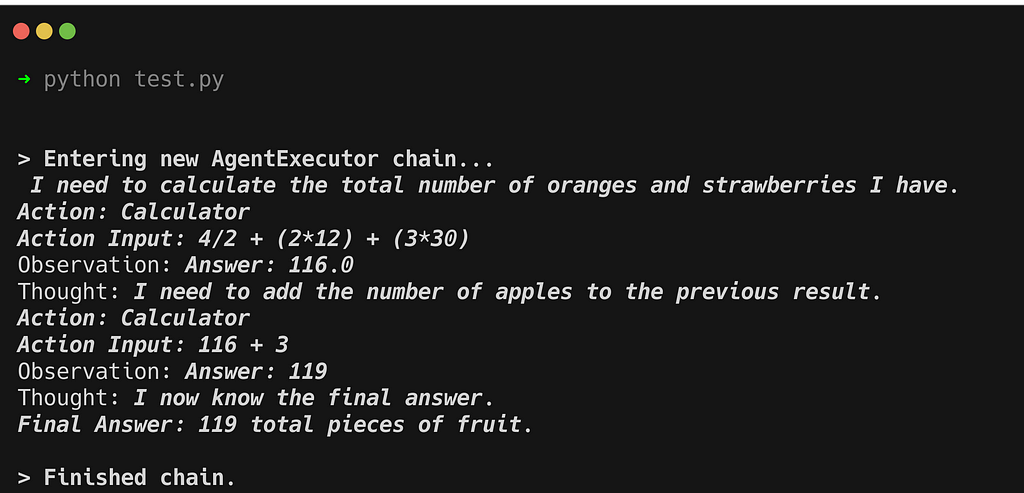

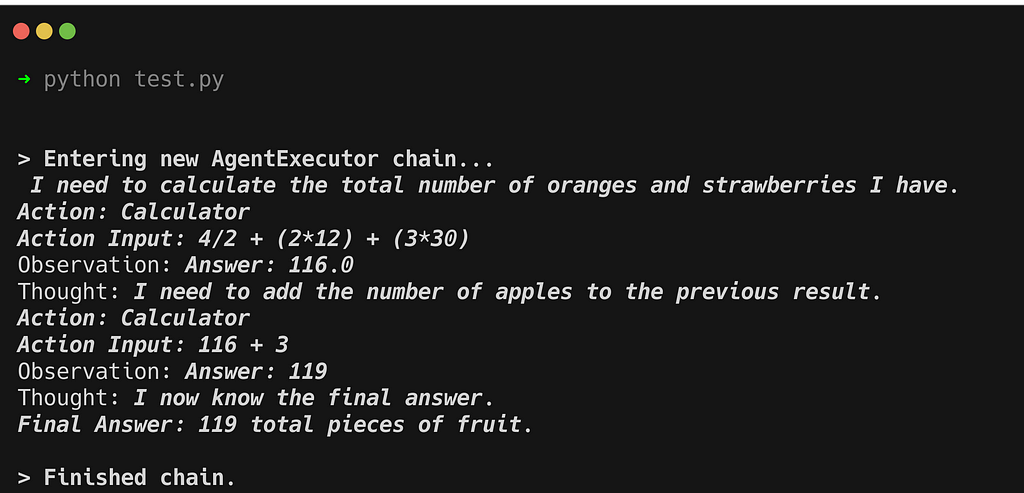

print(agent.invoke(

{"input": "I have 3 apples and 4 oranges. I give half of my oranges

away and buy two dozen new ones, alongwith three packs of

strawberries. Each pack of strawberry has 30 strawberries.

How many total pieces of fruit do I have at the end?"}))

Creating Chainlit Application

We will be using Chainlit, an open-source Python framework, to build our application. With Chainlit, you can build conversational AI applications with a few simple lines of code. To get a deeper understanding of Chainlit functionalities and how the app is set up, you can take a look at my article here:

Building a Chatbot Application with Chainlit and LangChain

We will be using two decorator functions for our application. These will be the @cl.on_chat_start and the @cl.on_message decorator functions. @cl.on_chat_start would be responsible for wrapping all code that should be executed when a user session is initiated. @cl.on_message will have the code bits that we would like to execute when a user sends in a query.

Let’s restructure our chatbot.py script with these two decorator functions. Let’s begin by importing the chainlit package to our chatbot.py script:

import chainlit as cl

Next, let’s write the wrapper function around @cl.on_chat_start decorator function. We will be adding our LLM, tools and agent initialization code to this function. We will store our agent in a variable inside the user session, to be retrieved when a user sends in a message.

@cl.on_chat_start

def math_chatbot():

llm = OpenAI(model='gpt-3.5-turbo-instruct',

temperature=0)

# prompt for reasoning based tool

word_problem_template = """You are a reasoning agent tasked with solving t he user's logic-based questions. Logically arrive at the solution, and be factual. In your answers, clearly detail the steps involved and give the final answer. Provide the response in bullet points. Question {question} Answer"""

math_assistant_prompt = PromptTemplate(

input_variables=["question"],

template=word_problem_template

)

# chain for reasoning based tool

word_problem_chain = LLMChain(llm=llm,

prompt=math_assistant_prompt)

# reasoning based tool

word_problem_tool = Tool.from_function(name="Reasoning Tool",

func=word_problem_chain.run,

description="Useful for when you need to answer logic-based/reasoning questions."

)

# calculator tool for arithmetics

problem_chain = LLMMathChain.from_llm(llm=llm)

math_tool = Tool.from_function(name="Calculator",

func=problem_chain.run,

description="Useful for when you need to answer numeric questions. This tool is only for math questions and nothing else. Only input math expressions, without text",

)

# Wikipedia Tool

wikipedia = WikipediaAPIWrapper()

wikipedia_tool = Tool(

name="Wikipedia",

func=wikipedia.run,

description="A useful tool for searching the Internet to find information on world events, issues, dates, "

"years, etc. Worth using for general topics. Use precise questions.",

)

# agent

agent = initialize_agent(

tools=[wikipedia_tool, math_tool, word_problem_tool],

llm=llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=False,

handle_parsing_errors=True

)

cl.user_session.set("agent", agent)

Next, let’s define the wrapper function for the @cl.on_message decorator. This will contain the code for when a user sends a query to our app. We will first be retrieving the agent we set at the start of the session, and then call it with our user query asynchronously.

@cl.on_message

async def process_user_query(message: cl.Message):

agent = cl.user_session.get("agent")

response = await agent.acall(message.content,

callbacks=[cl.AsyncLangchainCallbackHandler()])

await cl.Message(response["output"]).send()

We can run our application using:

chainlit run chatbot.py

The application should be available at https://localhost:8000.

Let’s also edit the chainlit.md file in our repo. This is created automatically when you run the above command.

# Welcome to Math Wiz! 🤖

Hi there! 👋 I am a reasoning tool to help you with your math or logic-based reasoning questions. How can I

help today?

Refresh the browser tab for the changes to take effect.

Demo

A demo for the app can be viewed here:

Testing and Validation

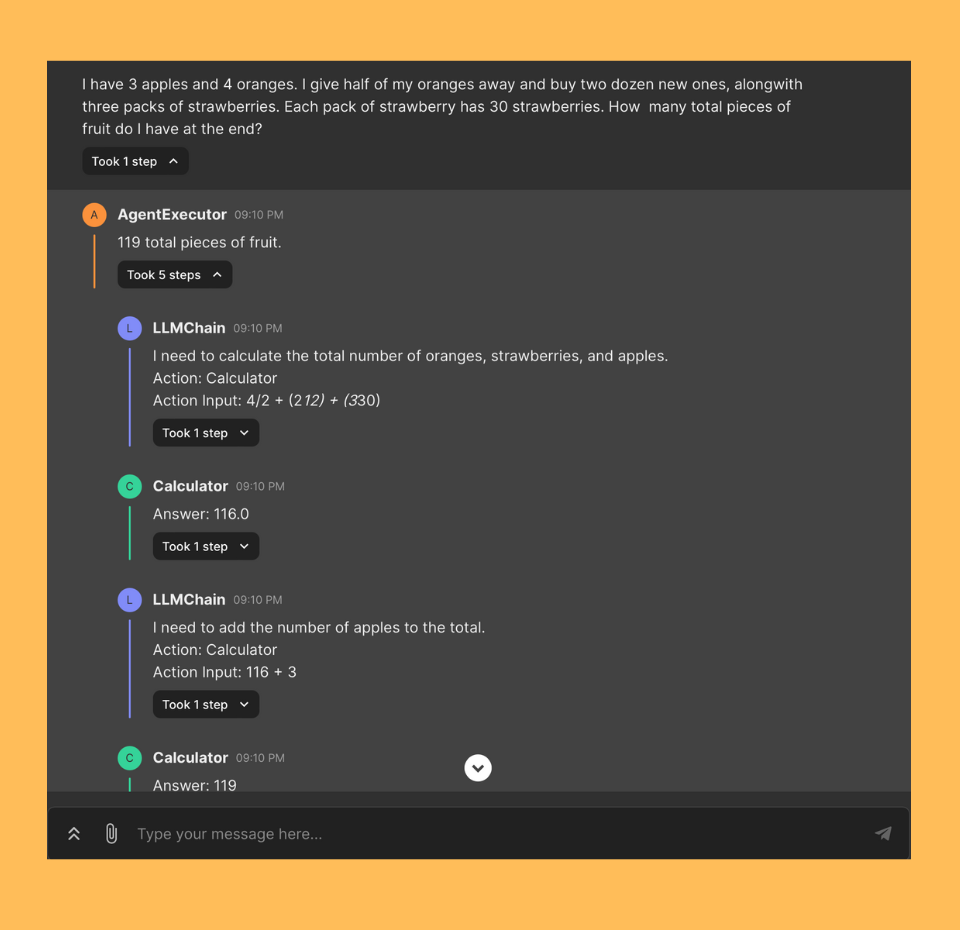

Let’s now validate the performance of our bot. We have not integrated any memory into our bot, so each query would have to be its own function call. Let’s ask our app a few math questions. For comparison, I am attaching screenshots of each response for the same query from both Chat GPT 3.5, and our Math Wiz app.

Arithmetic Questions

Question 1

What is the cube root of 625?

# correct answer = 8.5498

Our Math Wiz app was able to answer correctly. However, ChatGPT’s response is incorrect.

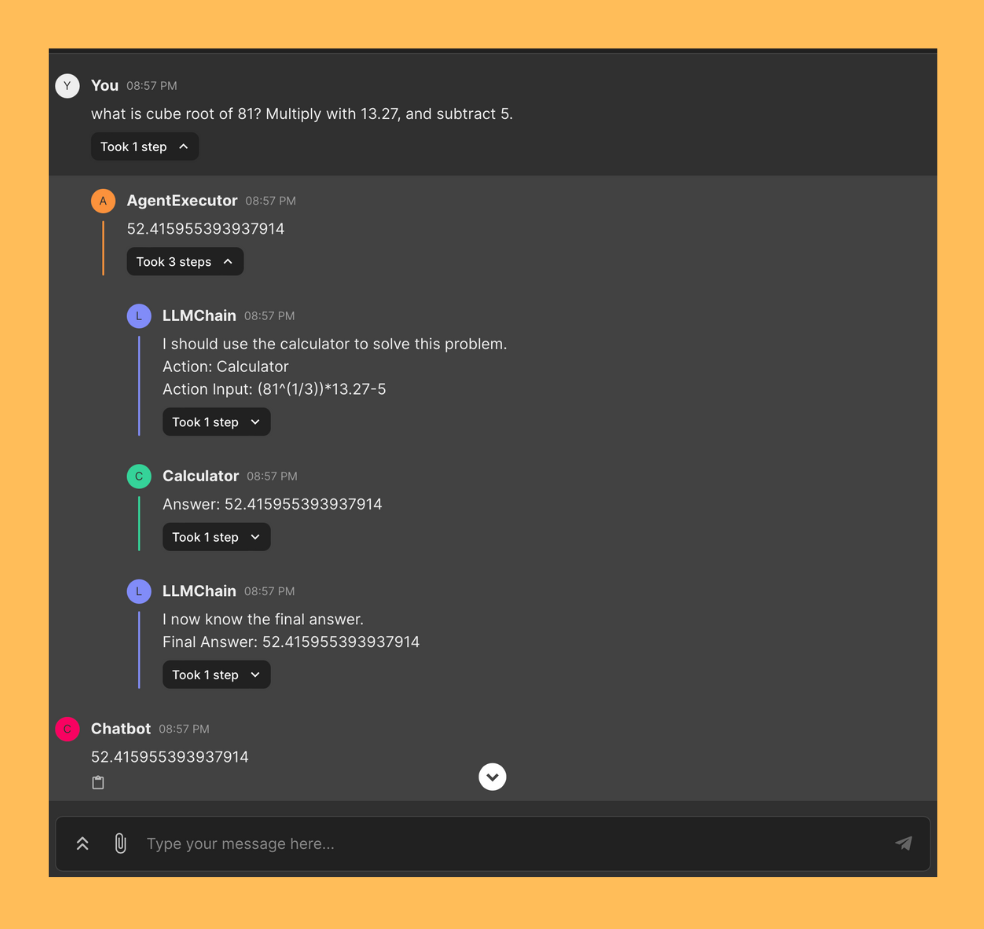

Question 2

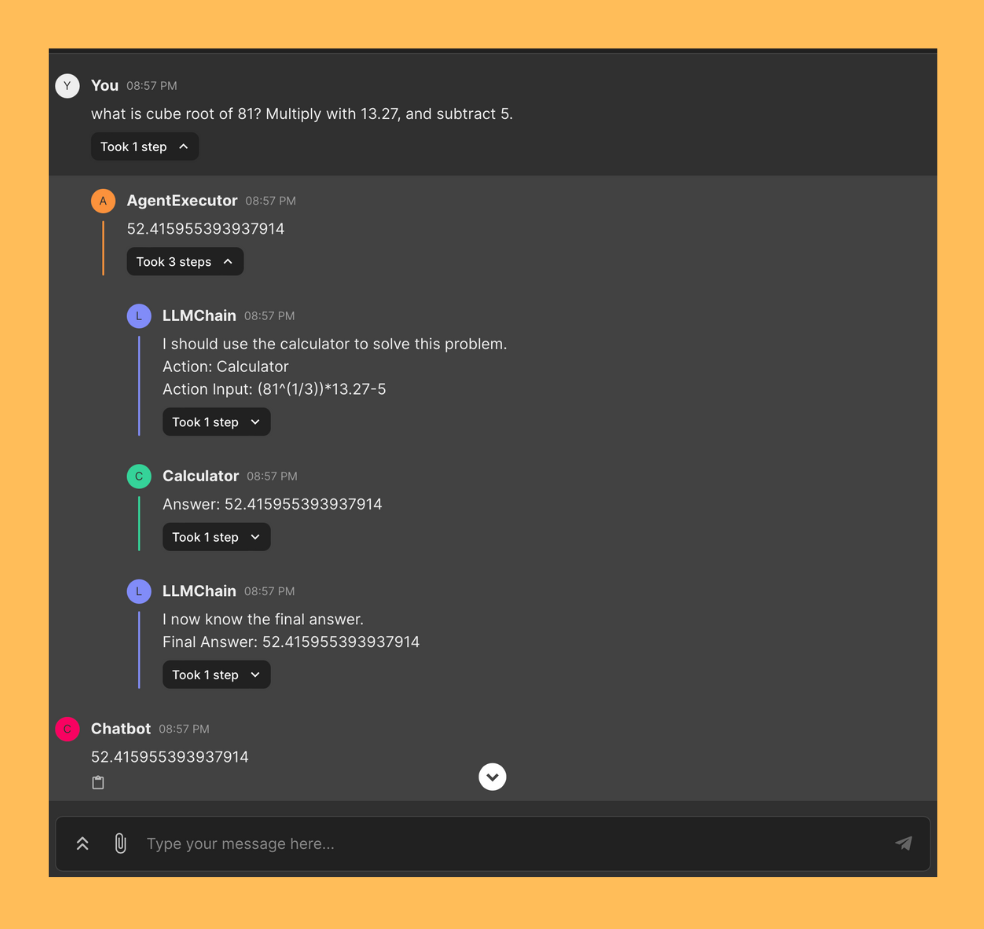

what is cube root of 81? Multiply with 13.27, and subtract 5.

# correct answer = 52.4195

Our Math Wiz app was able to correctly answer this question too. However, once again, ChatGPT’s response isn’t correct. Occasionally, ChatGPT can answer math questions correctly, but this is subject to prompt engineering and multiple inputs.

Reasoning Questions

Question 1

Let’s ask our app a few reasoning/logic questions. Some of these questions have an arithmetic component to them. I’d expect the agent to decide which tool to use in each case.

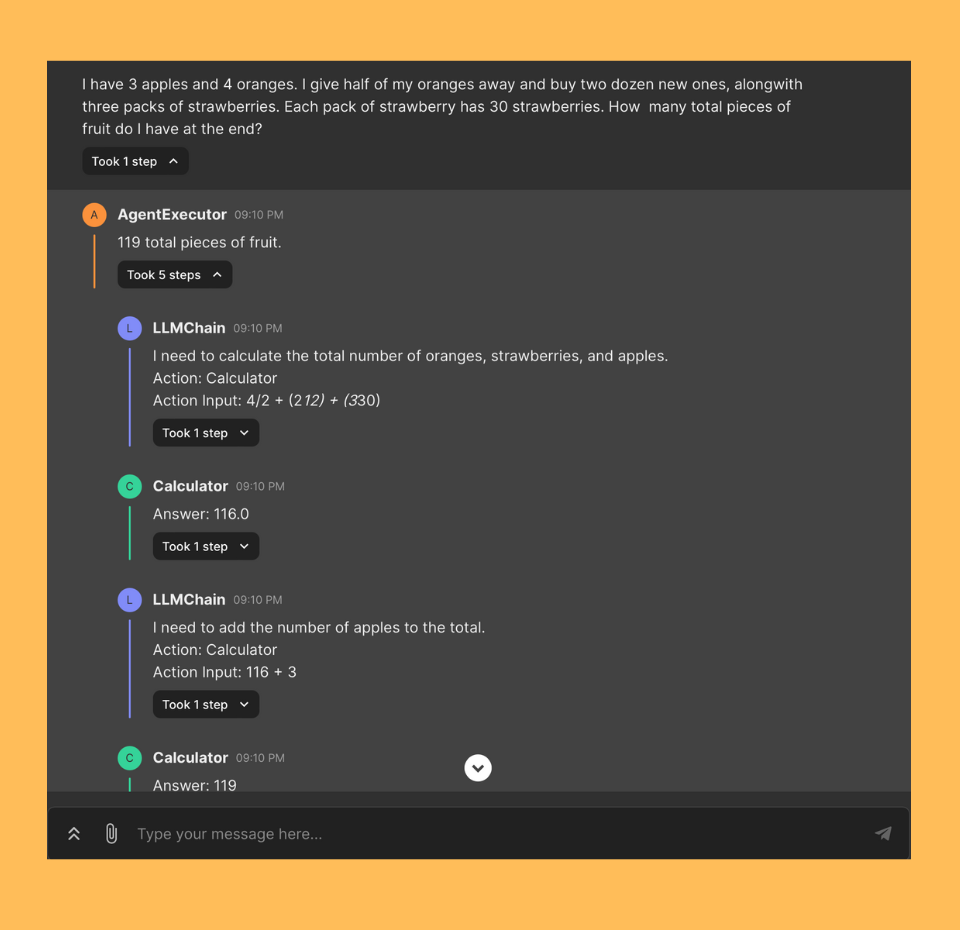

I have 3 apples and 4 oranges. I give half of my oranges away and buy two

dozen new ones, alongwith three packs of strawberries. Each pack of

strawberry has 30 strawberries. How many total pieces of fruit do I have at

the end?

# correct answer = 3 + 2 + 24 + 90 = 119

Our Math Wiz app was able to correctly answer this question. However, ChatGPT’s response is incorrect. It not only complicated the reasoning steps unnecessarily but also failed to reach the correct answer. However, on a separate occasion, ChatGPT was able to answer this question correctly. This is of course unreliable.

Question 2

Steve's sister is 10 years older than him. Steve was born when the cold war

ended. When was Steve's sister born?

# correct answer = 1991 - 10 = 1981

Our Math Wiz app was able to correctly answer this question. ChatGPT’s response is once again incorrect. Even though it was able to correctly figure out the year the Cold War ended, it messed up the calculation in the mathematical portion. Being 10 years older, the calculation for the sister’s age should have involved subtracting from the year that Steve was born. ChatGPT performed an addition, signifying a lack of reasoning ability.

Question 3

give me the year when Tom Cruise's Top Gun released raised to the power 2

# correct answer = 1987**2 = 3944196

Our Math Wiz app was able to correctly answer this question. ChatGPT’s response is once again incorrect. Even though it was able to correctly figure out the release date of the film, the final calculation was incorrect.

Conclusion & Next Steps

In this tutorial, we used LangChain agents and tools to create a math solver that could also tackle a user’s reasoning/logic questions. We saw that our Math Wiz app correctly answered all questions, however, most answers given by ChatGPT were incorrect. This is a great first step in building the tool. The LLMMathChain can however fail based on the input we are providing, if it contains string-based text. This can be tackled in a few different ways, such as by creating error handling utilities for your code, adding post-processing logic for the LLMMathChain, as well as using custom prompts. You could also increase the efficacy of the tool by including a search tool for more sophisticated and accurate results, since Wikipedia might not have updated information sometimes. You can find the code from this tutorial on my GitHub.

If you found this tutorial helpful, consider supporting by giving it fifty claps. You can follow along as I share working demos, explanations and cool side projects on things in the AI space. Come say hi on LinkedIn and X! I share guides, code snippets and other useful content there. 👋

Building a Math Application with LangChain Agents was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

A tutorial on why LLMs struggle with math, and how to resolve these limitations using LangChain Agents, OpenAI and Chainlit

In this tutorial, I will demonstrate how to use LangChain agents to create a custom Math application utilising OpenAI’s GPT3.5 model. For the application frontend, I will be using Chainlit, an easy-to-use open-source Python framework. This generative math application, let’s call it “Math Wiz”, is designed to help users with their math or reasoning/logic questions.

Why do LLMs struggle with Math?

Large Language Models (LLMs) are known to be quite bad at Math, as well as reasoning tasks, and this is a common trait among many language models. There are a few different reasons for this:

- Lack of training data: One reasons is the limitations of their training data. Language models, trained on vast text datasets, may lack sufficient mathematical problems and solutions. This can lead to misinterpretations of numbers, forgetting important calculation steps, and a lack of quantitative reasoning skills.

- Lack of numeric representations: Another reason is that LLMs are designed to understand and generate text, operating on tokens instead of numeric values. Most text-based tasks can have multiple reasonable answers. However, math problems typically have only one correct solution.

- Generative nature: Due to the generative nature of these language models, generating consistently accurate and precise answers to math questions can be challenging for LLMs.

This makes the “Math problem” the perfect candidate for utilising LangChain agents. Agents are systems that use a language model to interact with other tools to break down a complex problem (more on this later). The code for this tutorial is available on my GitHub.

Environment Setup

We can start off by creating a new conda environment with python=3.11.

conda create -n math_assistant python=3.11

Activate the environment:

conda activate math_assistant

Next, let’s install all necessary libraries:

pip install -r requirements.txt

Sign up at OpenAI and obtain your own key to start making calls to the gpt model. Once you have the key, create a .env file in your repository and store the OpenAI key:

OPENAI_API_KEY="your_openai_api_key"

Application Flow

The application flow for Math Wiz is outlined in the flowchart below. The agent in our pipeline will have a set of tools at its disposal that it can use to answer a user query. The Large Language Model (LLM) serves as the “brain” of the agent, guiding its decisions. When a user submits a question, the agent uses the LLM to select the most appropriate tool or a combination of tools to provide an answer. If the agent determines it needs multiple tools, it will also specify the order in which the tools are used.

The agent for our Math Wiz app will be using the following tools:

- Wikipedia Tool: this tool will be responsible for fetching the latest information from Wikipedia using the Wikipedia API. While there are paid tools and APIs available that can be integrated inside LangChain, I would be using Wikipedia as the app’s online source of information.

- Calculator Tool: this tool would be responsible for solving a user’s math queries. This includes anything involving numerical calculations. For example, if a user asks what the square root of 4 is, this tool would be appropriate.

- Reasoning Tool: the final tool in our application setup would be a reasoning tool, responsible for tackling logical/reasoning-based user queries. Any mathematical word problems should also be handled with this tool.

Now that we have a rough application design, we can began thinking about building this application.

Understanding LangChain Agents

LangChain agents are designed to enhance interaction with language models by providing an interface for more complex and interactive tasks. We can think of an agent as an intermediary between users and a large language model. Agents seek to break down a seemingly complex user query, that our LLM might not be able to tackle on its own, into easier, actionable steps.

In our application flow, we defined a few different tools that we would like to use for our math application. Based on the user input, the agent should decide which of these tools to use. If a tool is not required, it should not be used. LangChain agents can simplify this for us. These agents use a language model to choose a sequence of actions to take. Essentially, the LLM acts as the “brain” of the agent, guiding it on which tool to use for a particular query, and in which order. This is different from LangChain chains where the sequence of actions are hardcoded in code. LangChain offers a wide set of tools that can be integrated with an agent. These tools include, and are not limited to, online search tools, API-based tools, chain-based tools etc. For more information on LangChain agents and their types, see this.

Step-by-Step Implementation

Step 1

Create a chatbot.py script and import the necessary dependencies:

from langchain_openai import OpenAI

from langchain.chains import LLMMathChain, LLMChain

from langchain.prompts import PromptTemplate

from langchain_community.utilities import WikipediaAPIWrapper

from langchain.agents.agent_types import AgentType

from langchain.agents import Tool, initialize_agent

from dotenv import load_dotenv

load_dotenv()

Step 2

Next, we will define our OpenAI-based Language Model. LangChain offers the langchain-openai package which can be used to define an instance of the OpenAI model. We will be using the gpt-3.5-turbo-instruct model from OpenAI. The dotenv package would already be handling the API key so you do not need to explicitly define it here:

llm = OpenAI(model='gpt-3.5-turbo-instruct',

temperature=0)

We would be using this LLM both within our math and reasoning chains and as the decision maker for our agent.

Step 3

When constructing your own agent, you will need to provide it with a list of tools that it can use. Besides the actual function that is called, the Tool consists of a few other parameters:

- name (str), is required and must be unique within a set of tools provided to an agent

- description (str), is optional but recommended, as it is used by an agent to determine tool use

We will now create our three tools. The first one will be the online tool using the Wikipedia API wrapper:

wikipedia = WikipediaAPIWrapper()

wikipedia_tool = Tool(name="Wikipedia",

func=wikipedia.run,

description="A useful tool for searching the Internet

to find information on world events, issues, dates, years, etc. Worth

using for general topics. Use precise questions.")

In the code above, we have defined an instance of the Wikipedia API wrapper. Afterwards, we have wrapped it inside a LangChain Tool, with the name, function and description.

Next, let’s define the tool that we will be using for calculating any numerical expressions. LangChain offers the LLMMathChain which uses the numexpr Python library to calculate mathematical expressions. It is also important that we clearly define what this tool would be used for. The description can be helpful for the agent in deciding which tool to use from a set of tools for a particular user query. For our chain-based tools, we will be using the Tool.from_function() method.

problem_chain = LLMMathChain.from_llm(llm=llm)

math_tool = Tool.from_function(name="Calculator",

func=problem_chain.run,

description="Useful for when you need to answer questions

about math. This tool is only for math questions and nothing else. Only input

math expressions.")

Finally, we will be defining the tool for logic/reasoning-based queries. We will first create a prompt to instruct the model with executing the specific task. Then we will create a simple LLMChain for this tool, passing it the LLM and the prompt.

word_problem_template = """You are a reasoning agent tasked with solving

the user's logic-based questions. Logically arrive at the solution, and be

factual. In your answers, clearly detail the steps involved and give the

final answer. Provide the response in bullet points.

Question {question} Answer"""

math_assistant_prompt = PromptTemplate(input_variables=["question"],

template=word_problem_template

)

word_problem_chain = LLMChain(llm=llm,

prompt=math_assistant_prompt)

word_problem_tool = Tool.from_function(name="Reasoning Tool",

func=word_problem_chain.run,

description="Useful for when you need

to answer logic-based/reasoning questions.",

)

Step 4

We will now initialize our agent with the tools we have created above. We will also specify the LLM to help it choose which tools to use and in what order:

agent = initialize_agent(

tools=[wikipedia_tool, math_tool, word_problem_tool],

llm=llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=False,

handle_parsing_errors=True

)

print(agent.invoke(

{"input": "I have 3 apples and 4 oranges. I give half of my oranges

away and buy two dozen new ones, alongwith three packs of

strawberries. Each pack of strawberry has 30 strawberries.

How many total pieces of fruit do I have at the end?"}))

Creating Chainlit Application

We will be using Chainlit, an open-source Python framework, to build our application. With Chainlit, you can build conversational AI applications with a few simple lines of code. To get a deeper understanding of Chainlit functionalities and how the app is set up, you can take a look at my article here:

Building a Chatbot Application with Chainlit and LangChain

We will be using two decorator functions for our application. These will be the @cl.on_chat_start and the @cl.on_message decorator functions. @cl.on_chat_start would be responsible for wrapping all code that should be executed when a user session is initiated. @cl.on_message will have the code bits that we would like to execute when a user sends in a query.

Let’s restructure our chatbot.py script with these two decorator functions. Let’s begin by importing the chainlit package to our chatbot.py script:

import chainlit as cl

Next, let’s write the wrapper function around @cl.on_chat_start decorator function. We will be adding our LLM, tools and agent initialization code to this function. We will store our agent in a variable inside the user session, to be retrieved when a user sends in a message.

@cl.on_chat_start

def math_chatbot():

llm = OpenAI(model='gpt-3.5-turbo-instruct',

temperature=0)

# prompt for reasoning based tool

word_problem_template = """You are a reasoning agent tasked with solving t he user's logic-based questions. Logically arrive at the solution, and be factual. In your answers, clearly detail the steps involved and give the final answer. Provide the response in bullet points. Question {question} Answer"""

math_assistant_prompt = PromptTemplate(

input_variables=["question"],

template=word_problem_template

)

# chain for reasoning based tool

word_problem_chain = LLMChain(llm=llm,

prompt=math_assistant_prompt)

# reasoning based tool

word_problem_tool = Tool.from_function(name="Reasoning Tool",

func=word_problem_chain.run,

description="Useful for when you need to answer logic-based/reasoning questions."

)

# calculator tool for arithmetics

problem_chain = LLMMathChain.from_llm(llm=llm)

math_tool = Tool.from_function(name="Calculator",

func=problem_chain.run,

description="Useful for when you need to answer numeric questions. This tool is only for math questions and nothing else. Only input math expressions, without text",

)

# Wikipedia Tool

wikipedia = WikipediaAPIWrapper()

wikipedia_tool = Tool(

name="Wikipedia",

func=wikipedia.run,

description="A useful tool for searching the Internet to find information on world events, issues, dates, "

"years, etc. Worth using for general topics. Use precise questions.",

)

# agent

agent = initialize_agent(

tools=[wikipedia_tool, math_tool, word_problem_tool],

llm=llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=False,

handle_parsing_errors=True

)

cl.user_session.set("agent", agent)

Next, let’s define the wrapper function for the @cl.on_message decorator. This will contain the code for when a user sends a query to our app. We will first be retrieving the agent we set at the start of the session, and then call it with our user query asynchronously.

@cl.on_message

async def process_user_query(message: cl.Message):

agent = cl.user_session.get("agent")

response = await agent.acall(message.content,

callbacks=[cl.AsyncLangchainCallbackHandler()])

await cl.Message(response["output"]).send()

We can run our application using:

chainlit run chatbot.py

The application should be available at https://localhost:8000.

Let’s also edit the chainlit.md file in our repo. This is created automatically when you run the above command.

# Welcome to Math Wiz! 🤖

Hi there! 👋 I am a reasoning tool to help you with your math or logic-based reasoning questions. How can I

help today?

Refresh the browser tab for the changes to take effect.

Demo

A demo for the app can be viewed here:

Testing and Validation

Let’s now validate the performance of our bot. We have not integrated any memory into our bot, so each query would have to be its own function call. Let’s ask our app a few math questions. For comparison, I am attaching screenshots of each response for the same query from both Chat GPT 3.5, and our Math Wiz app.

Arithmetic Questions

Question 1

What is the cube root of 625?

# correct answer = 8.5498

Our Math Wiz app was able to answer correctly. However, ChatGPT’s response is incorrect.

Question 2

what is cube root of 81? Multiply with 13.27, and subtract 5.

# correct answer = 52.4195

Our Math Wiz app was able to correctly answer this question too. However, once again, ChatGPT’s response isn’t correct. Occasionally, ChatGPT can answer math questions correctly, but this is subject to prompt engineering and multiple inputs.

Reasoning Questions

Question 1

Let’s ask our app a few reasoning/logic questions. Some of these questions have an arithmetic component to them. I’d expect the agent to decide which tool to use in each case.

I have 3 apples and 4 oranges. I give half of my oranges away and buy two

dozen new ones, alongwith three packs of strawberries. Each pack of

strawberry has 30 strawberries. How many total pieces of fruit do I have at

the end?

# correct answer = 3 + 2 + 24 + 90 = 119

Our Math Wiz app was able to correctly answer this question. However, ChatGPT’s response is incorrect. It not only complicated the reasoning steps unnecessarily but also failed to reach the correct answer. However, on a separate occasion, ChatGPT was able to answer this question correctly. This is of course unreliable.

Question 2

Steve's sister is 10 years older than him. Steve was born when the cold war

ended. When was Steve's sister born?

# correct answer = 1991 - 10 = 1981

Our Math Wiz app was able to correctly answer this question. ChatGPT’s response is once again incorrect. Even though it was able to correctly figure out the year the Cold War ended, it messed up the calculation in the mathematical portion. Being 10 years older, the calculation for the sister’s age should have involved subtracting from the year that Steve was born. ChatGPT performed an addition, signifying a lack of reasoning ability.

Question 3

give me the year when Tom Cruise's Top Gun released raised to the power 2

# correct answer = 1987**2 = 3944196

Our Math Wiz app was able to correctly answer this question. ChatGPT’s response is once again incorrect. Even though it was able to correctly figure out the release date of the film, the final calculation was incorrect.

Conclusion & Next Steps

In this tutorial, we used LangChain agents and tools to create a math solver that could also tackle a user’s reasoning/logic questions. We saw that our Math Wiz app correctly answered all questions, however, most answers given by ChatGPT were incorrect. This is a great first step in building the tool. The LLMMathChain can however fail based on the input we are providing, if it contains string-based text. This can be tackled in a few different ways, such as by creating error handling utilities for your code, adding post-processing logic for the LLMMathChain, as well as using custom prompts. You could also increase the efficacy of the tool by including a search tool for more sophisticated and accurate results, since Wikipedia might not have updated information sometimes. You can find the code from this tutorial on my GitHub.

If you found this tutorial helpful, consider supporting by giving it fifty claps. You can follow along as I share working demos, explanations and cool side projects on things in the AI space. Come say hi on LinkedIn and X! I share guides, code snippets and other useful content there. 👋

Building a Math Application with LangChain Agents was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.