Computer Vision 101 – DZone

First Steps and Evolution

Imagine a world where machines cannot only see but also understand, where their “eyes” are powered by artificial intelligence, capable of recognizing objects and patterns as adeptly as the human eye. Thanks to the evolution of artificial intelligence, particularly the advent of deep learning and neural networks, we find ourselves at the threshold of this breathtaking reality.

Computer Vision, a field that originated in 1959 with the advent of the first digital image scanner, has undergone a remarkable evolution. Initially, the development of computer vision relied on algorithms, such as kernels, homographies, and graph models, which enabled computers to interpret and process visual data. However, there came a point when the effectiveness of these methods reached its limitations. The computational demands of image recognition and semantic segmentation were simply too vast for the computing technology of that era.

The turning point in the evolution of computer vision came with the surge in computing power during the 2000s and 2010s. This transformation enabled the adoption of neural networks that necessitated millions and even billions of calculations, ultimately giving rise to Convolutional Neural Networks (CNNs). These networks revolutionized computer vision, paving the way for more efficient and accurate image recognition, object detection, and scene understanding. The evolution of computer vision continues to be closely intertwined with advances in computing capabilities, promising even more exciting developments in the years to come.

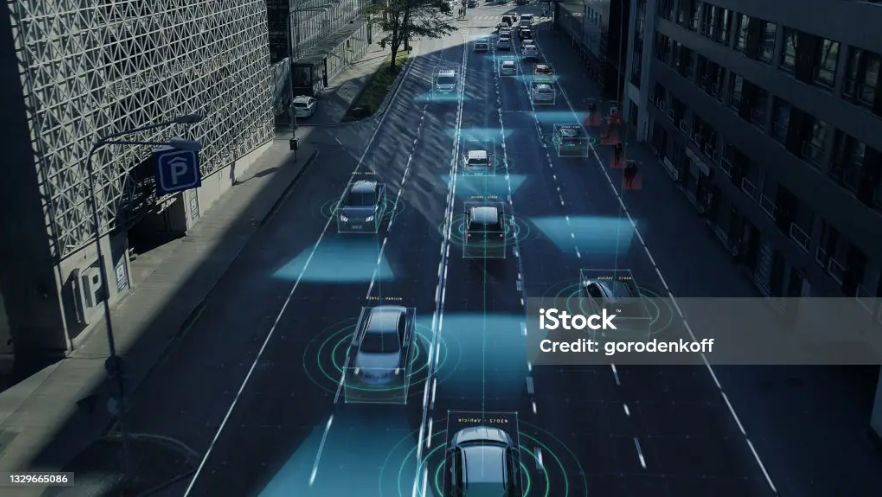

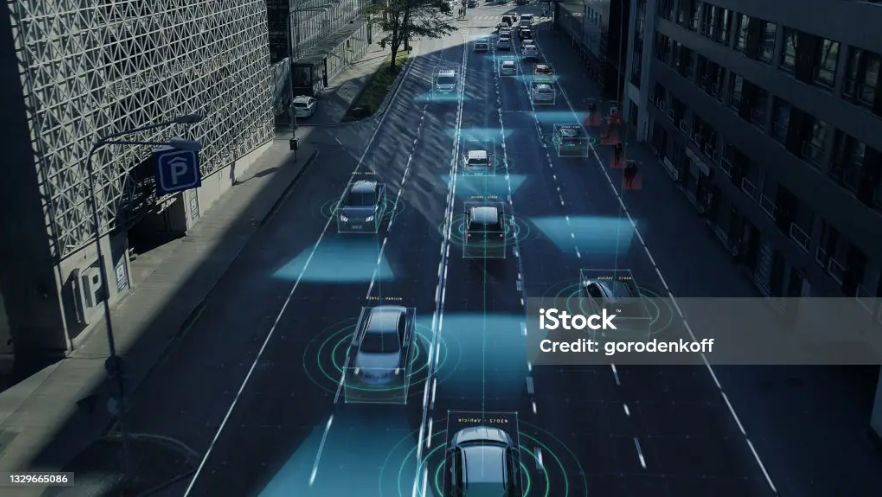

As we traverse this timeline, we encounter critical milestones: Facebook’s 2010 foray into facial recognition and Google’s introduction of TensorFlow in 2015. These events serve as heralds, foretelling the transformative power of Computer Vision. In the present day, we witness Computer Vision’s ascent, holding promises that span an array of applications — self-driving cars, accelerated medical diagnoses, frictionless checkout experiences, and more.

Core Concepts and Technologies

Computer Vision involves recognizing patterns and understanding images, enabled through training computers on vast labeled image datasets. By analyzing factors like colors, shapes, and spatial relationships, computers can learn to recognize objects in images.

Foundational pattern recognition in Computer Vision is built upon a series of fundamental concepts, each playing a crucial role in understanding and processing visual data:

1. Pixels and Color Channels

Images are composed of pixels, the smallest units that encode data about the presence and intensity of primary colors (red, green, and blue). Each pixel contains three channels, one for each color, represented by numerical values indicating the intensity of that color. These color channels combine to create the full spectrum of colors in an image.

When processed by computers, images are converted into arrays of pixels, effectively transforming them into digital matrices.

2. Matrices and Linear Algebra

To manipulate and process images, Computer Vision relies heavily on linear algebra. Images are treated as matrices, with rows and columns representing pixels. Linear algebra operations, such as matrix multiplication and transformation, are applied to these matrices to perform tasks like image filtering, transformation, and feature extraction. This mathematical foundation is at the core of many Computer Vision algorithms.

3. Convolutional Neural Networks (CNNs)

In advanced Computer Vision applications, such as CNNs, linear algebra remains a fundamental tool. CNNs utilize convolution and pooling operations to process image matrices. What sets CNNs apart is their ability to learn the values of convolutional kernels, which were previously predetermined in traditional methods like Gaussian kernels or Sobel operators. CNN, through training on large datasets, automatically determines the optimal kernel values for various pattern recognition tasks.

This approach closely mimics the intricate pattern recognition capabilities of the human brain and has revolutionized Computer Vision by achieving state-of-the-art results in tasks like image classification, object detection, and semantic segmentation.

The tasks Computer Vision performs are numerous, but the key ones include:

- Image classification: Assigning images to predefined categories.

- Object detection: Identifying objects and placing bounding boxes around them.

- Image segmentation: Dividing images into parts to distinguish objects from their surroundings.

- Facial recognition: Detecting and recognizing human faces.

- Edge detection: Identifying boundaries in objects.

- Image restoration: Reconstructing old or damaged images to restore their quality.

These tasks have evolved significantly, driven by neural networks and the deep learning revolution, enhancing the accuracy and impact of Computer Vision applications.

Latest Advancements

One of the latest breakthroughs in Computer Vision is the adoption of the Transformer architecture, which has already delivered state-of-the-art results in various Natural Language Processing (NLP) tasks. The Transformer model’s exceptional capabilities have been highlighted by the remarkable success of GPT-3, a powerful language model.

Transformers are neural network models designed to handle sequential data, making them highly adept at capturing dependencies and relationships in complex sequences, such as sentences or images.

The adoption of Transformers in the field of Computer Vision has brought about notable results in recent times, and several projects stand out:

- DETR (end-to-end object detection with transformers): This project harnesses Transformers for object detection and segmentation, emphasizing end-to-end processing.

- Vision transformer (An image is worth 16×16 words: Transformers for Image Recognition at Scale): This model applies Transformers to image classification, emphasizing self-attention mechanisms without the inclusion of traditional Convolutional Neural Networks (CNNs).

- Image GPT (generative pretraining from pixels): Using Transformers, this project focuses on pixel-level image completion, drawing parallels to the way GPT models handle text generation.

- End-to-end lane shape prediction with transformers: This project employs Transformers for lane marking detection in autonomous driving, illustrating the application of Transformers in real-world scenarios.

In addition to the use of Transformer architecture, Computer Vision has seen other recent advancements. Few-shot learning helps models understand new concepts with minimal examples. Self-supervised learning enables models to train themselves without extensive labeled data. Reinforcement Learning integrates intelligent decision-making into Computer Vision tasks. Continual learning, like human learning, accumulates knowledge as it arrives sequentially. These advancements are reshaping Computer Vision for more efficient solutions to real-world challenges.

Practical Application

Computer Vision is revolutionizing a wide range of industries. In the realm of autonomous vehicles, it plays a pivotal role in recognizing traffic signs, detecting pedestrians, and assessing road conditions, making self-driving cars a safer reality. The healthcare sector benefits from Computer Vision in medical image analysis, facilitating improved diagnosis, treatment planning, and patient monitoring.

In the retail world, Computer Vision enhances inventory management, analyses customer behavior, and enables checkout-free shopping experiences. Agriculture sees increased efficiency through crop monitoring and precise application of fertilizers and pesticides. Manufacturers employ Computer Vision for quality control, predictive maintenance, and ensuring workforce safety.

Moreover, the technology bolsters security and surveillance systems by enabling facial and car recognition, anomaly detection, and crowd analysis.

In social media, Computer Vision is the backbone of image and video analysis, content moderation, and augmented reality filters. It is also a valuable asset in wildlife conservation for tracking and monitoring animals and supporting conservation efforts.

Computer Vision also finds its place in the world of sports, contributing to player tracking, performance analysis, and injury prevention. In addition, it plays a crucial role in the realm of VFX, powering deep fakes, photo and video editing, and innovative creations like DALL-E and Midjourney.

Challenges in Computer Vision

According to the AI Accelerator Institute report, Computer Vision holds incredible promise but faces some notable challenges, too.

1. High Costs

The high costs associated with Computer Vision are primarily driven by the intensive computational requirements of working with images. To train and deploy sophisticated Computer Vision models, significant computing resources, particularly Graphics Processing Units (GPUs), are essential. These GPUs are specialized hardware designed to handle the complex mathematical operations involved in image processing and pattern recognition. Moreover, the training of advanced models demands large-scale computing facilities equipped with expensive hardware, further adding to the costs.

Additionally, the electricity consumption of these facilities is noteworthy, as the computational processes required for image analysis are energy-intensive. Consequently, the high costs of hardware, electricity, and infrastructure present a substantial barrier to entry into the field of Computer Vision, underscoring the need for substantial investment and resources to harness the potential of this technology.

2. Lack of Experienced Professionals

The skills gap in the Computer Vision field is substantial. Even though there are many AI experts worldwide, there’s a big gap in the job market. The demand for these pros has gone up because AI and deep learning are more common, and many big and small companies are getting into this field.

3. Size of Required Data Sets

A significant challenge in Computer Vision is the size of data sets needed to train models. Getting lots of images is easy, but having lots of labeled ones is hard. Labeling images accurately is time-consuming and requires careful quality control. While services like Amazon’s Mechanical Turk and Yandex. Toloka can help with labeling, but they come with a cost, making the process expensive. So, getting a large, well-labeled data set for training Computer Vision models can be a costly challenge.

4. Ethical Issues

Ethical concerns in Computer Vision are becoming acute, particularly when it comes to biases introduced by the data used to train models. One concerning issue is gender bias, where generative models, for instance, might consistently depict doctors as male, reinforcing gender stereotypes.

Similarly, racial bias can emerge, as some models may unintentionally associate certain racial groups with negative stereotypes. Biases can also extend to underrepresented communities, making it difficult for these groups to receive fair and accurate representation.

Final Thoughts

As Computer Vision continues to advance, it holds immense potential for the future. Its transformative impact spans across various industries, from healthcare and autonomous vehicles to agriculture and security. With the ability to enhance diagnosis, streamline operations, and automate tasks, Computer Vision is set to drive further technological advancements.

To explore the vast world of Computer Vision and harness its capabilities, you can study specific technologies and applications relevant to your fields. Whether it is OpenCV for real-time Computer Vision, TensorFlow for machine learning-powered applications, or MATLAB‘s versatile platform for engineers and scientists, the tools and resources are readily available to embark on this exciting journey of innovation and discovery.

First Steps and Evolution

Imagine a world where machines cannot only see but also understand, where their “eyes” are powered by artificial intelligence, capable of recognizing objects and patterns as adeptly as the human eye. Thanks to the evolution of artificial intelligence, particularly the advent of deep learning and neural networks, we find ourselves at the threshold of this breathtaking reality.

Computer Vision, a field that originated in 1959 with the advent of the first digital image scanner, has undergone a remarkable evolution. Initially, the development of computer vision relied on algorithms, such as kernels, homographies, and graph models, which enabled computers to interpret and process visual data. However, there came a point when the effectiveness of these methods reached its limitations. The computational demands of image recognition and semantic segmentation were simply too vast for the computing technology of that era.

The turning point in the evolution of computer vision came with the surge in computing power during the 2000s and 2010s. This transformation enabled the adoption of neural networks that necessitated millions and even billions of calculations, ultimately giving rise to Convolutional Neural Networks (CNNs). These networks revolutionized computer vision, paving the way for more efficient and accurate image recognition, object detection, and scene understanding. The evolution of computer vision continues to be closely intertwined with advances in computing capabilities, promising even more exciting developments in the years to come.

As we traverse this timeline, we encounter critical milestones: Facebook’s 2010 foray into facial recognition and Google’s introduction of TensorFlow in 2015. These events serve as heralds, foretelling the transformative power of Computer Vision. In the present day, we witness Computer Vision’s ascent, holding promises that span an array of applications — self-driving cars, accelerated medical diagnoses, frictionless checkout experiences, and more.

Core Concepts and Technologies

Computer Vision involves recognizing patterns and understanding images, enabled through training computers on vast labeled image datasets. By analyzing factors like colors, shapes, and spatial relationships, computers can learn to recognize objects in images.

Foundational pattern recognition in Computer Vision is built upon a series of fundamental concepts, each playing a crucial role in understanding and processing visual data:

1. Pixels and Color Channels

Images are composed of pixels, the smallest units that encode data about the presence and intensity of primary colors (red, green, and blue). Each pixel contains three channels, one for each color, represented by numerical values indicating the intensity of that color. These color channels combine to create the full spectrum of colors in an image.

When processed by computers, images are converted into arrays of pixels, effectively transforming them into digital matrices.

2. Matrices and Linear Algebra

To manipulate and process images, Computer Vision relies heavily on linear algebra. Images are treated as matrices, with rows and columns representing pixels. Linear algebra operations, such as matrix multiplication and transformation, are applied to these matrices to perform tasks like image filtering, transformation, and feature extraction. This mathematical foundation is at the core of many Computer Vision algorithms.

3. Convolutional Neural Networks (CNNs)

In advanced Computer Vision applications, such as CNNs, linear algebra remains a fundamental tool. CNNs utilize convolution and pooling operations to process image matrices. What sets CNNs apart is their ability to learn the values of convolutional kernels, which were previously predetermined in traditional methods like Gaussian kernels or Sobel operators. CNN, through training on large datasets, automatically determines the optimal kernel values for various pattern recognition tasks.

This approach closely mimics the intricate pattern recognition capabilities of the human brain and has revolutionized Computer Vision by achieving state-of-the-art results in tasks like image classification, object detection, and semantic segmentation.

The tasks Computer Vision performs are numerous, but the key ones include:

- Image classification: Assigning images to predefined categories.

- Object detection: Identifying objects and placing bounding boxes around them.

- Image segmentation: Dividing images into parts to distinguish objects from their surroundings.

- Facial recognition: Detecting and recognizing human faces.

- Edge detection: Identifying boundaries in objects.

- Image restoration: Reconstructing old or damaged images to restore their quality.

These tasks have evolved significantly, driven by neural networks and the deep learning revolution, enhancing the accuracy and impact of Computer Vision applications.

Latest Advancements

One of the latest breakthroughs in Computer Vision is the adoption of the Transformer architecture, which has already delivered state-of-the-art results in various Natural Language Processing (NLP) tasks. The Transformer model’s exceptional capabilities have been highlighted by the remarkable success of GPT-3, a powerful language model.

Transformers are neural network models designed to handle sequential data, making them highly adept at capturing dependencies and relationships in complex sequences, such as sentences or images.

The adoption of Transformers in the field of Computer Vision has brought about notable results in recent times, and several projects stand out:

- DETR (end-to-end object detection with transformers): This project harnesses Transformers for object detection and segmentation, emphasizing end-to-end processing.

- Vision transformer (An image is worth 16×16 words: Transformers for Image Recognition at Scale): This model applies Transformers to image classification, emphasizing self-attention mechanisms without the inclusion of traditional Convolutional Neural Networks (CNNs).

- Image GPT (generative pretraining from pixels): Using Transformers, this project focuses on pixel-level image completion, drawing parallels to the way GPT models handle text generation.

- End-to-end lane shape prediction with transformers: This project employs Transformers for lane marking detection in autonomous driving, illustrating the application of Transformers in real-world scenarios.

In addition to the use of Transformer architecture, Computer Vision has seen other recent advancements. Few-shot learning helps models understand new concepts with minimal examples. Self-supervised learning enables models to train themselves without extensive labeled data. Reinforcement Learning integrates intelligent decision-making into Computer Vision tasks. Continual learning, like human learning, accumulates knowledge as it arrives sequentially. These advancements are reshaping Computer Vision for more efficient solutions to real-world challenges.

Practical Application

Computer Vision is revolutionizing a wide range of industries. In the realm of autonomous vehicles, it plays a pivotal role in recognizing traffic signs, detecting pedestrians, and assessing road conditions, making self-driving cars a safer reality. The healthcare sector benefits from Computer Vision in medical image analysis, facilitating improved diagnosis, treatment planning, and patient monitoring.

In the retail world, Computer Vision enhances inventory management, analyses customer behavior, and enables checkout-free shopping experiences. Agriculture sees increased efficiency through crop monitoring and precise application of fertilizers and pesticides. Manufacturers employ Computer Vision for quality control, predictive maintenance, and ensuring workforce safety.

Moreover, the technology bolsters security and surveillance systems by enabling facial and car recognition, anomaly detection, and crowd analysis.

In social media, Computer Vision is the backbone of image and video analysis, content moderation, and augmented reality filters. It is also a valuable asset in wildlife conservation for tracking and monitoring animals and supporting conservation efforts.

Computer Vision also finds its place in the world of sports, contributing to player tracking, performance analysis, and injury prevention. In addition, it plays a crucial role in the realm of VFX, powering deep fakes, photo and video editing, and innovative creations like DALL-E and Midjourney.

Challenges in Computer Vision

According to the AI Accelerator Institute report, Computer Vision holds incredible promise but faces some notable challenges, too.

1. High Costs

The high costs associated with Computer Vision are primarily driven by the intensive computational requirements of working with images. To train and deploy sophisticated Computer Vision models, significant computing resources, particularly Graphics Processing Units (GPUs), are essential. These GPUs are specialized hardware designed to handle the complex mathematical operations involved in image processing and pattern recognition. Moreover, the training of advanced models demands large-scale computing facilities equipped with expensive hardware, further adding to the costs.

Additionally, the electricity consumption of these facilities is noteworthy, as the computational processes required for image analysis are energy-intensive. Consequently, the high costs of hardware, electricity, and infrastructure present a substantial barrier to entry into the field of Computer Vision, underscoring the need for substantial investment and resources to harness the potential of this technology.

2. Lack of Experienced Professionals

The skills gap in the Computer Vision field is substantial. Even though there are many AI experts worldwide, there’s a big gap in the job market. The demand for these pros has gone up because AI and deep learning are more common, and many big and small companies are getting into this field.

3. Size of Required Data Sets

A significant challenge in Computer Vision is the size of data sets needed to train models. Getting lots of images is easy, but having lots of labeled ones is hard. Labeling images accurately is time-consuming and requires careful quality control. While services like Amazon’s Mechanical Turk and Yandex. Toloka can help with labeling, but they come with a cost, making the process expensive. So, getting a large, well-labeled data set for training Computer Vision models can be a costly challenge.

4. Ethical Issues

Ethical concerns in Computer Vision are becoming acute, particularly when it comes to biases introduced by the data used to train models. One concerning issue is gender bias, where generative models, for instance, might consistently depict doctors as male, reinforcing gender stereotypes.

Similarly, racial bias can emerge, as some models may unintentionally associate certain racial groups with negative stereotypes. Biases can also extend to underrepresented communities, making it difficult for these groups to receive fair and accurate representation.

Final Thoughts

As Computer Vision continues to advance, it holds immense potential for the future. Its transformative impact spans across various industries, from healthcare and autonomous vehicles to agriculture and security. With the ability to enhance diagnosis, streamline operations, and automate tasks, Computer Vision is set to drive further technological advancements.

To explore the vast world of Computer Vision and harness its capabilities, you can study specific technologies and applications relevant to your fields. Whether it is OpenCV for real-time Computer Vision, TensorFlow for machine learning-powered applications, or MATLAB‘s versatile platform for engineers and scientists, the tools and resources are readily available to embark on this exciting journey of innovation and discovery.