Extracting intersectional stereotypes from English text

Mining huge datasets of English reveals stereotypes about gender, race, and class prevalent in English-speaking societies. Tessa Charlesworth and colleagues developed a stepwise procedure, Flexible Intersectional Stereotype Extraction (FISE), which they applied to billions of words of English Internet text.

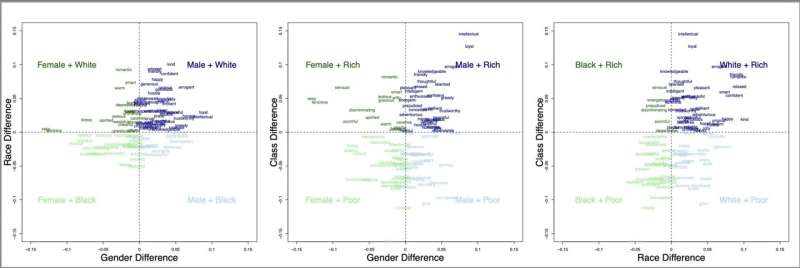

This procedure allowed them to explore traits associated with intersectional identities, by quantifying how often occupation labels or trait adjectives were deployed near phrases that referred to multiple identities, such as “Black Women,” “Rich Men,” “Poor Women,” or “White Men.”

In their analysis, published in PNAS Nexus, the authors first show that the method is a valid way of extracting stereotypes: occupations that were, in reality, dominated by certain identities (e.g., architect, engineer, manager are dominated by white men) are also, in language, strongly associated with that same intersectional group at a rate significantly above chance—about 70%.

Next, the authors looked at personality traits. The FISE procedure found that 59% of studied traits were associated with “White Men” but just 5% of traits were associated with “Black Women.”

According to the authors, the imbalances in trait frequencies indicate a pervasive androcentric (male-centric) and ethnocentric (white-centric) bias in English. The valence (positivity/negativity) of the associated traits were also imbalanced. Some 78% of traits associated with “White Rich” were positive while only 21% of traits associated with “Black Poor” were positive.

Patterns such as these have downstream consequences in AI, computer translation, and text generation, according to the authors. In addition to understanding how intersectional bias shapes such outcomes, the authors note that FISE can be used to research a range of intersectional identities across languages and even across history.

More information:

Tessa E S Charlesworth et al, Extracting intersectional stereotypes from embeddings: Developing and validating the Flexible Intersectional Stereotype Extraction procedure, PNAS Nexus (2024). DOI: 10.1093/pnasnexus/pgae089

Citation:

Extracting intersectional stereotypes from English text (2024, March 20)

retrieved 20 March 2024

from https://phys.org/news/2024-03-intersectional-stereotypes-english-text.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Mining huge datasets of English reveals stereotypes about gender, race, and class prevalent in English-speaking societies. Tessa Charlesworth and colleagues developed a stepwise procedure, Flexible Intersectional Stereotype Extraction (FISE), which they applied to billions of words of English Internet text.

This procedure allowed them to explore traits associated with intersectional identities, by quantifying how often occupation labels or trait adjectives were deployed near phrases that referred to multiple identities, such as “Black Women,” “Rich Men,” “Poor Women,” or “White Men.”

In their analysis, published in PNAS Nexus, the authors first show that the method is a valid way of extracting stereotypes: occupations that were, in reality, dominated by certain identities (e.g., architect, engineer, manager are dominated by white men) are also, in language, strongly associated with that same intersectional group at a rate significantly above chance—about 70%.

Next, the authors looked at personality traits. The FISE procedure found that 59% of studied traits were associated with “White Men” but just 5% of traits were associated with “Black Women.”

According to the authors, the imbalances in trait frequencies indicate a pervasive androcentric (male-centric) and ethnocentric (white-centric) bias in English. The valence (positivity/negativity) of the associated traits were also imbalanced. Some 78% of traits associated with “White Rich” were positive while only 21% of traits associated with “Black Poor” were positive.

Patterns such as these have downstream consequences in AI, computer translation, and text generation, according to the authors. In addition to understanding how intersectional bias shapes such outcomes, the authors note that FISE can be used to research a range of intersectional identities across languages and even across history.

More information:

Tessa E S Charlesworth et al, Extracting intersectional stereotypes from embeddings: Developing and validating the Flexible Intersectional Stereotype Extraction procedure, PNAS Nexus (2024). DOI: 10.1093/pnasnexus/pgae089

Citation:

Extracting intersectional stereotypes from English text (2024, March 20)

retrieved 20 March 2024

from https://phys.org/news/2024-03-intersectional-stereotypes-english-text.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.