Google’s Minerva, Solving Math Problems with AI | by Salvatore Raieli | Jul, 2022

Quantitative reasoning is hard for humans and it is hard for computers. Google’s new model just got astonishing results in solving math problems.

We are used now to language models such as GPT-3, but in general, their output is textual. Quantitative reasoning is difficult (many of us still have nightmares about calculus from the university). It is hard also for language models, where their performances are far from reaching human-level performance. Solving mathematical or scientific questions require different skills as they state in their blog post:

Correctly parsing a question with natural language and mathematical notation, recalling relevant formulas and constants, and generating step-by-step solutions involving numerical calculations and symbolic manipulation.

Overcoming these challenges is hard and thus it was forecasted that a model was reaching state-of-the-art accuracy on the MATH dataset (a dataset containing 12,000 math problems from high school) in 2025. we are three years in advance and Google AI research announced Minerva.

In their article, they presented a model that has reached incredible results in solving problems from different subjects (such as algebra, probability, physics, number theory, geometry, biology, astronomy, chemistry, machine learning, and so on). They write:

Minerva solves such problems by generating solutions that include numerical calculations and symbolic manipulation without relying on external tools such as a calculator.

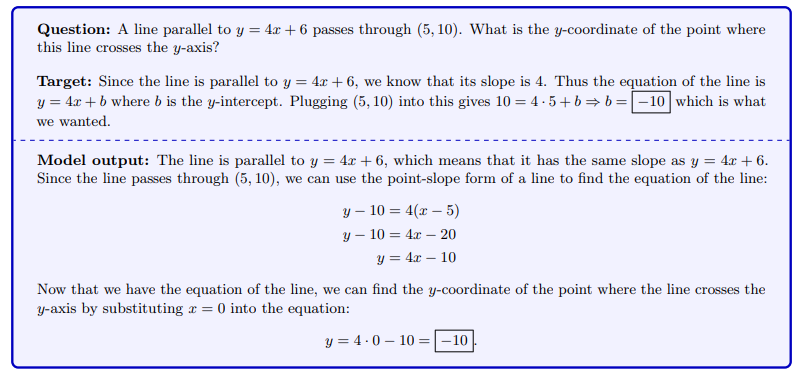

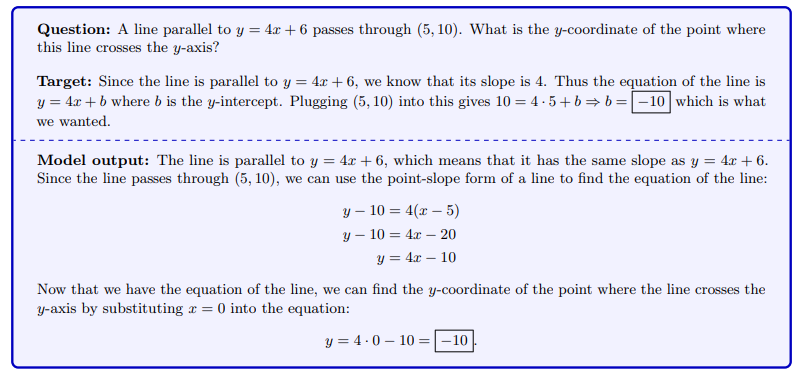

Here is an example with algebra, where Minerva has as an input a problem and responds with the solution. Notice, that the model is capable of solving the equation by simplifying and substituting variables.

You can also have a look at the interactive sample explorer to see other examples of problems from other STEM disciplines. Here, I am pasting just few examples showing how advanced is:

or in biology:

chemistry:

or machine learning:

Minerva is based on the Pathways Language Model (PaLM) which has been further trained with 118 gigabytes of scientific papers from arXiv (which are quite dense in math expressions). The PaLM model which was published in April 2022 is a 540 Billion parameter language model able to generalize across different domains and tasks. The blog post explained an important passage of the training:

Standard text cleaning procedures often remove symbols and formatting that are essential to the semantic meaning of mathematical expressions. By maintaining this information in the training data, the model learns to converse using standard mathematical notation.

In other words, a different approach for the preprocessing of the text was necessary to train the model.

Interestingly, Minerva is not generating only one solution but different solutions (where the steps are different but as they wrote, in general, they arrive to the same final answer). The different solutions have assigned a different probability and then the most common answer is chosen as the solution (majority voting, which proved to be a successful strategy in PaLM).

Then, they evaluate their approach on different benchmark datasets. They used the MATH (High school math competition level problems) but also more complex datasets such as OCWCourses (a collection of college and graduate problems collected by MIT OpenCourseWare). In all these cases, they reached state-of-the-art results:

These results are impressive, however, the model is still far from perfect as stated:

Minerva still makes its fair share of mistakes.

In the article they suggested to what these mistakes are due:

About half are calculation mistakes, and the other half are reasoning errors, where the solution steps do not follow a logical chain of thought.

As for human students, it is also possible that the model could arrive at the right final answer but use faulty reasoning (which in the paper is defined as “false positives”). But as they observed these cases are less frequent (ironically, it is the same for human students):

In our analysis, we find that the rate of false positives is relatively low (Minerva 62B produces less than 8% false positives on MATH).

They also noticed that false positives were more common when the problems were more difficult (on a scale from 1 to 5, the false-positive rate was higher when the model encountered problems of difficulty 5).

In the article, they describe this as a limitation because is not possible to automatically identify the cases when the model is predicting the correct answer but using faulty reasoning.

Here is an example of an error:

Sometimes the mistake was that the model misunderstood the question or used an incorrect fact to answer. Another type of error was answers that were too short (directly incorrect without reasoning, which we could assimilate to students trying to guess an answer when they do not know the solution). In some cases, the model produces what they called “Hallucinated math objects” which is intended when the model generated facts or equations that are not real. However, these cases are rarer and the prevalent errors were incorrect reasoning and incorrect calculation.

In the paper, the researcher discussed also the potential social impact of their model. As recognized by them, the impact could be huge:

Artificial neural networks capable of solving quantitative reasoning problems in a general setting have the potential of substantial societal impact

However, for the moment two main limitations are reducing the potential impact:

Minerva, while a step in this direction, is still far from achieving this goal, and its potential societal impact is therefore limited. The model’s performance is still well below human performance, and furthermore, we do not have an automatic way of verifying the correctness of its outputs.

According to the researchers future, these models can be helpful for tutors in order to reduce educational inequalities. However, calculators and computers have not reduced educational inequalities but actually incremented the gap between countries where these resources are easily available and countries where education is underfunded. In any case, it represents the first step for a powerful tool that can be useful for many domains. For example, researchers can found highly beneficial as a tool that helps them in their work.

You can look for my other articles, you can also subscribe to get notified when I publish articles, and you can also connect or reach me on LinkedIn. Thanks for your support!

Here, is the link to my Github repository where I am planning to collect code, and many resources related to machine learning, artificial intelligence, and more.

Quantitative reasoning is hard for humans and it is hard for computers. Google’s new model just got astonishing results in solving math problems.

We are used now to language models such as GPT-3, but in general, their output is textual. Quantitative reasoning is difficult (many of us still have nightmares about calculus from the university). It is hard also for language models, where their performances are far from reaching human-level performance. Solving mathematical or scientific questions require different skills as they state in their blog post:

Correctly parsing a question with natural language and mathematical notation, recalling relevant formulas and constants, and generating step-by-step solutions involving numerical calculations and symbolic manipulation.

Overcoming these challenges is hard and thus it was forecasted that a model was reaching state-of-the-art accuracy on the MATH dataset (a dataset containing 12,000 math problems from high school) in 2025. we are three years in advance and Google AI research announced Minerva.

In their article, they presented a model that has reached incredible results in solving problems from different subjects (such as algebra, probability, physics, number theory, geometry, biology, astronomy, chemistry, machine learning, and so on). They write:

Minerva solves such problems by generating solutions that include numerical calculations and symbolic manipulation without relying on external tools such as a calculator.

Here is an example with algebra, where Minerva has as an input a problem and responds with the solution. Notice, that the model is capable of solving the equation by simplifying and substituting variables.

You can also have a look at the interactive sample explorer to see other examples of problems from other STEM disciplines. Here, I am pasting just few examples showing how advanced is:

or in biology:

chemistry:

or machine learning:

Minerva is based on the Pathways Language Model (PaLM) which has been further trained with 118 gigabytes of scientific papers from arXiv (which are quite dense in math expressions). The PaLM model which was published in April 2022 is a 540 Billion parameter language model able to generalize across different domains and tasks. The blog post explained an important passage of the training:

Standard text cleaning procedures often remove symbols and formatting that are essential to the semantic meaning of mathematical expressions. By maintaining this information in the training data, the model learns to converse using standard mathematical notation.

In other words, a different approach for the preprocessing of the text was necessary to train the model.

Interestingly, Minerva is not generating only one solution but different solutions (where the steps are different but as they wrote, in general, they arrive to the same final answer). The different solutions have assigned a different probability and then the most common answer is chosen as the solution (majority voting, which proved to be a successful strategy in PaLM).

Then, they evaluate their approach on different benchmark datasets. They used the MATH (High school math competition level problems) but also more complex datasets such as OCWCourses (a collection of college and graduate problems collected by MIT OpenCourseWare). In all these cases, they reached state-of-the-art results:

These results are impressive, however, the model is still far from perfect as stated:

Minerva still makes its fair share of mistakes.

In the article they suggested to what these mistakes are due:

About half are calculation mistakes, and the other half are reasoning errors, where the solution steps do not follow a logical chain of thought.

As for human students, it is also possible that the model could arrive at the right final answer but use faulty reasoning (which in the paper is defined as “false positives”). But as they observed these cases are less frequent (ironically, it is the same for human students):

In our analysis, we find that the rate of false positives is relatively low (Minerva 62B produces less than 8% false positives on MATH).

They also noticed that false positives were more common when the problems were more difficult (on a scale from 1 to 5, the false-positive rate was higher when the model encountered problems of difficulty 5).

In the article, they describe this as a limitation because is not possible to automatically identify the cases when the model is predicting the correct answer but using faulty reasoning.

Here is an example of an error:

Sometimes the mistake was that the model misunderstood the question or used an incorrect fact to answer. Another type of error was answers that were too short (directly incorrect without reasoning, which we could assimilate to students trying to guess an answer when they do not know the solution). In some cases, the model produces what they called “Hallucinated math objects” which is intended when the model generated facts or equations that are not real. However, these cases are rarer and the prevalent errors were incorrect reasoning and incorrect calculation.

In the paper, the researcher discussed also the potential social impact of their model. As recognized by them, the impact could be huge:

Artificial neural networks capable of solving quantitative reasoning problems in a general setting have the potential of substantial societal impact

However, for the moment two main limitations are reducing the potential impact:

Minerva, while a step in this direction, is still far from achieving this goal, and its potential societal impact is therefore limited. The model’s performance is still well below human performance, and furthermore, we do not have an automatic way of verifying the correctness of its outputs.

According to the researchers future, these models can be helpful for tutors in order to reduce educational inequalities. However, calculators and computers have not reduced educational inequalities but actually incremented the gap between countries where these resources are easily available and countries where education is underfunded. In any case, it represents the first step for a powerful tool that can be useful for many domains. For example, researchers can found highly beneficial as a tool that helps them in their work.

You can look for my other articles, you can also subscribe to get notified when I publish articles, and you can also connect or reach me on LinkedIn. Thanks for your support!

Here, is the link to my Github repository where I am planning to collect code, and many resources related to machine learning, artificial intelligence, and more.