How I Learned To Love Autonomous Generative AI Versus Auto-Complete

New generative AI for code tools help developers do their jobs more quickly with less effort by synthesizing code. ChatGPT and other LLM approaches have made this kind of generative AI accessible to a broad general audience. Developers are flocking to it.

But too often overlooked are the fundamental differences between generative AI for code tools, and those differences inform which approach is better for your developer tasks. Under the covers, generative AI for code tools fall into two basic categories: transformer-based large language models (e.g., Copilot, ChatGPT), and reinforcement learning-based systems (RL).

The biggest difference between RL and LLMs like Copilot is that Copilot is an interactive code suggestion tool for general code writing (Microsoft calls it an “AI pair programmer”), whereas reinforcement-based learning systems can create code without human help. One RL example is Diffblue Cover which can autonomously write entire suites of fully-functioning unit tests.

Copilot is designed to work with a human developer to deal with tedious and repetitive coding tasks like writing boilerplate and calling into “foreign lands” (3rd party APIs). While you can get Copilot to suggest a unit test for your code, you can’t point it at a million lines of code and come back in a few hours to tens of thousands of unit tests that all compile and run correctly.

That’s because they are inherently different things, or tools, where the developer needs to first consider what she wants to accomplish before choosing either approach.

Reinforcement learning can be a powerful tool for solving complex problems. RL systems run quickly on a laptop because they use pre-collected data to learn, making it particularly effective on large data sets.

Historically the cost of doing online training (making an AI model interact with the real world to learn) was prohibitive. The new LLMs from OpenAI, Google, and Meta sucked much of that expense out of the equation by absorbing that cost. But developers still need to review the generated code!

Take creating unit tests, for example. Copilot will write one test and then require some “prompt engineering” to coax it into writing a different test, plus the time to edit the resulting suggestions and get them to compile and run properly. RL, on the other hand, can understand the impact of each action, potentially receiving a reward and reshaping its parameters in order to maximize those rewards. This way, the model learns what actions yield rewards and defines the policy — the strategy — it will follow to maximize them. And hey, presto! It yields unit tests that compile and run autonomously.

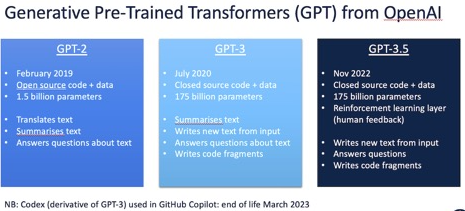

Code completion tools based on transformer models predict the next word or token in a sequence by analyzing the given text. These transformer models have evolved dramatically and put generative AI in news headlines around the world. Their output looks like magic. GPT-2, one of the first open-source models released in February 2019. Since then, the number of parameters in these models has scaled dramatically, from 1.5 billion in GPT-2 to 175 billion in GPT-3.5, released in November 2022.

OpenAI Codex, a model with approximately 5 billion parameters used in GitHub CoPilot, was specifically trained on open-source code, allowing it to excel in tasks such as generating boilerplate code from simple comments and calling APIs based on examples it has seen in the past. The one-shot prediction accuracy of these models has reached levels comparable to explicitly-trained language models. Unfortunately, GPT-4 is a black box – we don’t know how much training data or the number of parameters were used to develop the model.

As wonderful as LLM AI-powered code generation tools can appear, they’re sometimes unpredictable and rely heavily on the quality of prompts (cue posting of new prompt “engineering” gigs on LinkedIn). As they are essentially statistical models of textual patterns, they may generate code that appears reasonable but is fundamentally flawed – or famously hallucinatory.

GPT-3.5, for instance, incorporates human reinforcement learning in the loop, where answers are ranked by humans to yield improved results, as well as training on human-written responses. However, the challenge remains in identifying the subtle mistakes produced by these models, which can lead to unintended consequences, such as the ChatGPT incident involving the German coding company OpenCage. These problems do not go away, no matter how much training data and parameters are used during the training of these models.

Additionally, Large Language Models (LLMs) learn by processing text. But so much learning has absolutely nothing to do with text or language. It’s like learning basketball by reading textbooks vs playing pickup ball games.

Reinforcement learning differs from LLMs by focusing on learning by doing, rather than relying on text. And in the context of unit testing, this process allows the system to iteratively improve and generate tests with higher coverage and better readability, ultimately resulting in a more effective and efficient testing process for developers.

New generative AI for code tools help developers do their jobs more quickly with less effort by synthesizing code. ChatGPT and other LLM approaches have made this kind of generative AI accessible to a broad general audience. Developers are flocking to it.

But too often overlooked are the fundamental differences between generative AI for code tools, and those differences inform which approach is better for your developer tasks. Under the covers, generative AI for code tools fall into two basic categories: transformer-based large language models (e.g., Copilot, ChatGPT), and reinforcement learning-based systems (RL).

The biggest difference between RL and LLMs like Copilot is that Copilot is an interactive code suggestion tool for general code writing (Microsoft calls it an “AI pair programmer”), whereas reinforcement-based learning systems can create code without human help. One RL example is Diffblue Cover which can autonomously write entire suites of fully-functioning unit tests.

Copilot is designed to work with a human developer to deal with tedious and repetitive coding tasks like writing boilerplate and calling into “foreign lands” (3rd party APIs). While you can get Copilot to suggest a unit test for your code, you can’t point it at a million lines of code and come back in a few hours to tens of thousands of unit tests that all compile and run correctly.

That’s because they are inherently different things, or tools, where the developer needs to first consider what she wants to accomplish before choosing either approach.

Reinforcement learning can be a powerful tool for solving complex problems. RL systems run quickly on a laptop because they use pre-collected data to learn, making it particularly effective on large data sets.

Historically the cost of doing online training (making an AI model interact with the real world to learn) was prohibitive. The new LLMs from OpenAI, Google, and Meta sucked much of that expense out of the equation by absorbing that cost. But developers still need to review the generated code!

Take creating unit tests, for example. Copilot will write one test and then require some “prompt engineering” to coax it into writing a different test, plus the time to edit the resulting suggestions and get them to compile and run properly. RL, on the other hand, can understand the impact of each action, potentially receiving a reward and reshaping its parameters in order to maximize those rewards. This way, the model learns what actions yield rewards and defines the policy — the strategy — it will follow to maximize them. And hey, presto! It yields unit tests that compile and run autonomously.

Code completion tools based on transformer models predict the next word or token in a sequence by analyzing the given text. These transformer models have evolved dramatically and put generative AI in news headlines around the world. Their output looks like magic. GPT-2, one of the first open-source models released in February 2019. Since then, the number of parameters in these models has scaled dramatically, from 1.5 billion in GPT-2 to 175 billion in GPT-3.5, released in November 2022.

OpenAI Codex, a model with approximately 5 billion parameters used in GitHub CoPilot, was specifically trained on open-source code, allowing it to excel in tasks such as generating boilerplate code from simple comments and calling APIs based on examples it has seen in the past. The one-shot prediction accuracy of these models has reached levels comparable to explicitly-trained language models. Unfortunately, GPT-4 is a black box – we don’t know how much training data or the number of parameters were used to develop the model.

As wonderful as LLM AI-powered code generation tools can appear, they’re sometimes unpredictable and rely heavily on the quality of prompts (cue posting of new prompt “engineering” gigs on LinkedIn). As they are essentially statistical models of textual patterns, they may generate code that appears reasonable but is fundamentally flawed – or famously hallucinatory.

GPT-3.5, for instance, incorporates human reinforcement learning in the loop, where answers are ranked by humans to yield improved results, as well as training on human-written responses. However, the challenge remains in identifying the subtle mistakes produced by these models, which can lead to unintended consequences, such as the ChatGPT incident involving the German coding company OpenCage. These problems do not go away, no matter how much training data and parameters are used during the training of these models.

Additionally, Large Language Models (LLMs) learn by processing text. But so much learning has absolutely nothing to do with text or language. It’s like learning basketball by reading textbooks vs playing pickup ball games.

Reinforcement learning differs from LLMs by focusing on learning by doing, rather than relying on text. And in the context of unit testing, this process allows the system to iteratively improve and generate tests with higher coverage and better readability, ultimately resulting in a more effective and efficient testing process for developers.