Implement RAG Using Weaviate, LangChain4j, and LocalAI

In this blog, you will learn how to implement Retrieval Augmented Generation (RAG) using Weaviate, LangChain4j, and LocalAI. This implementation allows you to ask questions about your documents using natural language. Enjoy!

1. Introduction

In the previous post, Weaviate was used as a vector database in order to perform a semantic search. The source documents used are two Wikipedia documents. The discography and list of songs recorded by Bruce Springsteen are the documents used. The interesting part of these documents is that they contain facts and are mainly in a table format. Parts of these documents are converted to Markdown in order to have a better representation. The Markdown files are embedded in Collections in Weaviate. The result was amazing: all questions asked, resulted in the correct answer to the question. That is, the correct segment was returned. You still needed to extract the answer yourself, but this was quite easy.

However, can this be solved by providing the Weaviate search results to an LLM (Large Language Model) by creating the right prompt? Will the LLM be able to extract the correct answers to the questions?

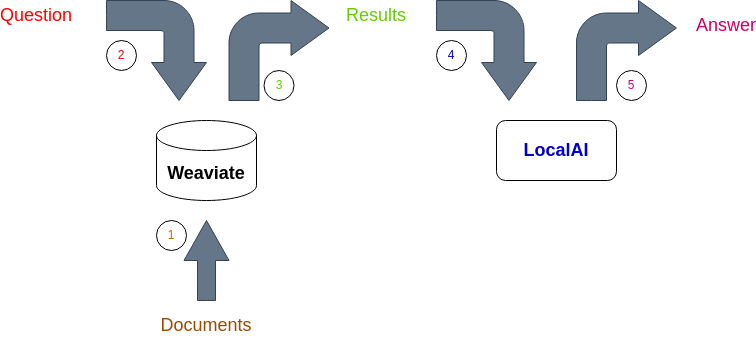

The setup is visualized in the graph below:

- The documents are embedded and stored in Weaviate;

- The question is embedded and a semantic search is performed using Weaviate;

- Weaviate returns the semantic search results;

- The result is added to a prompt and fed to LocalAI which runs an LLM using LangChain4j;

- The LLM returns the answer to the question.

Weaviate also supports RAG, so why bother using LocalAI and LangChain4j? Unfortunately, Weaviate does not support integration with LocalAI and only cloud LLMs can be used. If your documents contain sensitive information or information you do not want to send to a cloud-based LLM, you need to run a local LLM and this can be done using LocalAI and LangChain4j.

If you want to run the examples in this blog, you need to read the previous blog.

The sources used in this blog can be found on GitHub.

2. Prerequisites

The prerequisites for this blog are:

- Basic knowledge of embedding and vector stores;

- Basic Java knowledge, Java 21 is used;

- Basic knowledge of Docker;

- Basic knowledge of LangChain4j;

- You need Weaviate and the documents need to be embedded, see the previous blog on how to do so;

- You need LocalAI if you want to run the examples, see a previous blog on how you can make use of LocalAI. Version 2.2.0 is used for this blog.

- If you want to learn more about RAG, read this blog.

3. Create the Setup

Before getting started, there is some setup to do.

3.1 Setup LocalAI

LocalAI must be running and configured. How to do so is explained in the blog Running LLM’s Locally: A Step-by-Step Guide.

3.2 Setup Weaviate

Weaviate must be started. The only difference with the Weaviate blog is that you will run it on port 8081 instead of port 8080. This is because LocalAI is already running on port 8080.

Start the compose file from the root of the repository.

$ docker compose -f docker/compose-embed-8081.yamlRun class EmbedMarkdown in order to embed the documents (change the port to 8081!). Three collections are created:

- CompilationAlbum: a list of all compilation albums of Bruce Springsteen;

- Song: a list of all songs by Bruce Springsteen;

- StudioAlbum: a list of all studio albums of Bruce Springsteen.

4. Implement RAG

4.1 Semantic Search

The first part of the implementation is based on the semantic search implementation of class SearchCollectionNearText. It is assumed here, that you know in which collection (argument className) to search for.

In the previous post, you noticed that strictly spoken, you do not need to know which collection to search for. However, at this moment, it makes the implementation a bit easier and the result remains identical.

The code will take the question and with the help of NearTextArgument, the question will be embedded. The GraphQL API of Weaviate is used to perform the search.

private static void askQuestion(String className, Field[] fields, String question, String extraInstruction) {

Config config = new Config("http", "localhost:8081");

WeaviateClient client = new WeaviateClient(config);

Field additional = Field.builder()

.name("_additional")

.fields(Field.builder().name("certainty").build(), // only supported if distance==cosine

Field.builder().name("distance").build() // always supported

).build();

Field[] allFields = Arrays.copyOf(fields, fields.length + 1);

allFields[fields.length] = additional;

// Embed the question

NearTextArgument nearText = NearTextArgument.builder()

.concepts(new String[]{question})

.build();

Result<GraphQLResponse> result = client.graphQL().get()

.withClassName(className)

.withFields(allFields)

.withNearText(nearText)

.withLimit(1)

.run();

if (result.hasErrors()) {

System.out.println(result.getError());

return;

}

...4.2 Create Prompt

The result of the semantic search needs to be fed to the LLM including the question itself. A prompt is created which will instruct the LLM to answer the question using the result of the semantic search. Also, the option to add extra instructions is implemented. Later on, you will see what to do with that.

private static String createPrompt(String question, String inputData, String extraInstruction) {

return "Answer the following question: " + question + "\n" +

extraInstruction + "\n" +

"Use the following data to answer the question: " + inputData;

}4.3 Use LLM

The last thing to do is to feed the prompt to the LLM and print the question and answer to the console.

private static void askQuestion(String className, Field[] fields, String question, String extraInstruction) {

...

ChatLanguageModel model = LocalAiChatModel.builder()

.baseUrl("http://localhost:8080")

.modelName("lunademo")

.temperature(0.0)

.build();

String answer = model.generate(createPrompt(question, result.getResult().getData().toString(), extraInstruction));

System.out.println(question);

System.out.println(answer);

}4.4 Questions

The questions to be asked are the same as in the previous posts. They will invoke the code above.

public static void main(String[] args) {

askQuestion(Song.NAME, Song.getFields(), "on which album was \"adam raised a cain\" originally released?", "");

askQuestion(StudioAlbum.NAME, StudioAlbum.getFields(), "what is the highest chart position of \"Greetings from Asbury Park, N.J.\" in the US?", "");

askQuestion(CompilationAlbum.NAME, CompilationAlbum.getFields(), "what is the highest chart position of the album \"tracks\" in canada?", "");

askQuestion(Song.NAME, Song.getFields(), "in which year was \"Highway Patrolman\" released?", "");

askQuestion(Song.NAME, Song.getFields(), "who produced \"all or nothin' at all?\"", "");

}The complete source code can be viewed here.

5. Results

Run the code and the result is the following:

- On which album was “Adam Raised a Cain” originally released?

The album “Darkness on the Edge of Town” was originally released in 1978, and the song “Adam Raised a Cain” was included on that album. - What is the highest chart position of “Greetings from Asbury Park, N.J.” in the US?

The highest chart position of “Greetings from Asbury Park, N.J.” in the US is 60. - What is the highest chart position of the album “Tracks” in Canada?

Based on the provided data, the highest chart position of the album “Tracks” in Canada is -. This is because the data does not include any Canadian chart positions for this album. - In which year was “Highway Patrolman” released?

The song “Highway Patrolman” was released in 1982. - Who produced “all or nothin’ at all?”

The song “All or Nothin’ at All” was produced by Bruce Springsteen, Roy Bittan, Jon Landau, and Chuck Plotkin.

All answers to the questions are correct. The most important job has been done in the previous post, where embedding the documents in the correct way, resulted in finding the correct segments. An LLM is able to extract the answer to the question when it is fed with the correct data.

6. Caveats

During the implementation, I ran into some strange behavior which is quite important to know when you are starting to implement your use case.

6.1 Format of Weaviate Results

The Weaviate response contains a GraphQLResponse object, something like the following:

GraphQLResponse(

data={

Get={

Songs=[

{_additional={certainty=0.7534831166267395, distance=0.49303377},

originalRelease=Darkness on the Edge of Town,

producers=Jon Landau Bruce Springsteen Steven Van Zandt (assistant),

song="Adam Raised a Cain", writers=Bruce Springsteen, year=1978}

]

}

},

errors=null)In the code, the data part is used to add to the prompt.

String answer = model.generate(createPrompt(question, result.getResult().getData().toString(), extraInstruction));What happens when you add the response as-is to the prompt?

String answer = model.generate(createPrompt(question, result.getResult().toString(), extraInstruction));Running the code returns the following wrong answer for question 3 and some unnecessary additional information for question 4. The other questions are answered correctly.

- What is the highest chart position of the album “Tracks” in Canada?

Based on the provided data, the highest chart position of the album “Tracks” in Canada is 50. - In which year was “Highway Patrolman” released?

Based on the provided GraphQLResponse, “Highway Patrolman” was released in 1982.

who produced “all or nothin’ at all?”

6.2 Format of Prompt

The code contains functionality to add extra instructions to the prompt. As you have probably noticed, this functionality is not used. Let’s see what happens when you remove this from the prompt. The createPrompt method becomes the following (I did not remove everything so that only a minor code change is needed).

private static String createPrompt(String question, String inputData, String extraInstruction) {

return "Answer the following question: " + question + "\n" +

"Use the following data to answer the question: " + inputData;

}Running the code adds some extra information to the answer to question 3 which is not entirely correct. It is correct that the album has chart positions for the United States, United Kingdom, Germany, and Sweden. It is not correct that the album reached the top 10 in the UK and US charts. All other questions are answered correctly.

- What is the highest chart position of the album “Tracks” in Canada?

Based on the provided data, the highest chart position of the album “Tracks” in Canada is not specified. The data only includes chart positions for other countries such as the United States, United Kingdom, Germany, and Sweden. However, the album did reach the top 10 in the UK and US charts.

It remains a bit brittle when using an LLM. You cannot always trust the answer it is given. Changing the prompt accordingly seems to be possible to minimize the hallucinations of an LLM. It is therefore important that you collect feedback from your users in order to identify when an LLM seems to hallucinate. This way, you will be able to improve the responses to the users. An interesting blog is written by Fiddler which addresses this kind of issue.

7. Conclusion

In this blog, you learned how to implement RAG using Weaviate, LangChain4j, and LocalAI. The results are quite amazing. Embedding documents the right way, filtering the results, and feeding them to an LLM is a very powerful combination that can be used in many use cases.

In this blog, you will learn how to implement Retrieval Augmented Generation (RAG) using Weaviate, LangChain4j, and LocalAI. This implementation allows you to ask questions about your documents using natural language. Enjoy!

1. Introduction

In the previous post, Weaviate was used as a vector database in order to perform a semantic search. The source documents used are two Wikipedia documents. The discography and list of songs recorded by Bruce Springsteen are the documents used. The interesting part of these documents is that they contain facts and are mainly in a table format. Parts of these documents are converted to Markdown in order to have a better representation. The Markdown files are embedded in Collections in Weaviate. The result was amazing: all questions asked, resulted in the correct answer to the question. That is, the correct segment was returned. You still needed to extract the answer yourself, but this was quite easy.

However, can this be solved by providing the Weaviate search results to an LLM (Large Language Model) by creating the right prompt? Will the LLM be able to extract the correct answers to the questions?

The setup is visualized in the graph below:

- The documents are embedded and stored in Weaviate;

- The question is embedded and a semantic search is performed using Weaviate;

- Weaviate returns the semantic search results;

- The result is added to a prompt and fed to LocalAI which runs an LLM using LangChain4j;

- The LLM returns the answer to the question.

Weaviate also supports RAG, so why bother using LocalAI and LangChain4j? Unfortunately, Weaviate does not support integration with LocalAI and only cloud LLMs can be used. If your documents contain sensitive information or information you do not want to send to a cloud-based LLM, you need to run a local LLM and this can be done using LocalAI and LangChain4j.

If you want to run the examples in this blog, you need to read the previous blog.

The sources used in this blog can be found on GitHub.

2. Prerequisites

The prerequisites for this blog are:

- Basic knowledge of embedding and vector stores;

- Basic Java knowledge, Java 21 is used;

- Basic knowledge of Docker;

- Basic knowledge of LangChain4j;

- You need Weaviate and the documents need to be embedded, see the previous blog on how to do so;

- You need LocalAI if you want to run the examples, see a previous blog on how you can make use of LocalAI. Version 2.2.0 is used for this blog.

- If you want to learn more about RAG, read this blog.

3. Create the Setup

Before getting started, there is some setup to do.

3.1 Setup LocalAI

LocalAI must be running and configured. How to do so is explained in the blog Running LLM’s Locally: A Step-by-Step Guide.

3.2 Setup Weaviate

Weaviate must be started. The only difference with the Weaviate blog is that you will run it on port 8081 instead of port 8080. This is because LocalAI is already running on port 8080.

Start the compose file from the root of the repository.

$ docker compose -f docker/compose-embed-8081.yamlRun class EmbedMarkdown in order to embed the documents (change the port to 8081!). Three collections are created:

- CompilationAlbum: a list of all compilation albums of Bruce Springsteen;

- Song: a list of all songs by Bruce Springsteen;

- StudioAlbum: a list of all studio albums of Bruce Springsteen.

4. Implement RAG

4.1 Semantic Search

The first part of the implementation is based on the semantic search implementation of class SearchCollectionNearText. It is assumed here, that you know in which collection (argument className) to search for.

In the previous post, you noticed that strictly spoken, you do not need to know which collection to search for. However, at this moment, it makes the implementation a bit easier and the result remains identical.

The code will take the question and with the help of NearTextArgument, the question will be embedded. The GraphQL API of Weaviate is used to perform the search.

private static void askQuestion(String className, Field[] fields, String question, String extraInstruction) {

Config config = new Config("http", "localhost:8081");

WeaviateClient client = new WeaviateClient(config);

Field additional = Field.builder()

.name("_additional")

.fields(Field.builder().name("certainty").build(), // only supported if distance==cosine

Field.builder().name("distance").build() // always supported

).build();

Field[] allFields = Arrays.copyOf(fields, fields.length + 1);

allFields[fields.length] = additional;

// Embed the question

NearTextArgument nearText = NearTextArgument.builder()

.concepts(new String[]{question})

.build();

Result<GraphQLResponse> result = client.graphQL().get()

.withClassName(className)

.withFields(allFields)

.withNearText(nearText)

.withLimit(1)

.run();

if (result.hasErrors()) {

System.out.println(result.getError());

return;

}

...4.2 Create Prompt

The result of the semantic search needs to be fed to the LLM including the question itself. A prompt is created which will instruct the LLM to answer the question using the result of the semantic search. Also, the option to add extra instructions is implemented. Later on, you will see what to do with that.

private static String createPrompt(String question, String inputData, String extraInstruction) {

return "Answer the following question: " + question + "\n" +

extraInstruction + "\n" +

"Use the following data to answer the question: " + inputData;

}4.3 Use LLM

The last thing to do is to feed the prompt to the LLM and print the question and answer to the console.

private static void askQuestion(String className, Field[] fields, String question, String extraInstruction) {

...

ChatLanguageModel model = LocalAiChatModel.builder()

.baseUrl("http://localhost:8080")

.modelName("lunademo")

.temperature(0.0)

.build();

String answer = model.generate(createPrompt(question, result.getResult().getData().toString(), extraInstruction));

System.out.println(question);

System.out.println(answer);

}4.4 Questions

The questions to be asked are the same as in the previous posts. They will invoke the code above.

public static void main(String[] args) {

askQuestion(Song.NAME, Song.getFields(), "on which album was \"adam raised a cain\" originally released?", "");

askQuestion(StudioAlbum.NAME, StudioAlbum.getFields(), "what is the highest chart position of \"Greetings from Asbury Park, N.J.\" in the US?", "");

askQuestion(CompilationAlbum.NAME, CompilationAlbum.getFields(), "what is the highest chart position of the album \"tracks\" in canada?", "");

askQuestion(Song.NAME, Song.getFields(), "in which year was \"Highway Patrolman\" released?", "");

askQuestion(Song.NAME, Song.getFields(), "who produced \"all or nothin' at all?\"", "");

}The complete source code can be viewed here.

5. Results

Run the code and the result is the following:

- On which album was “Adam Raised a Cain” originally released?

The album “Darkness on the Edge of Town” was originally released in 1978, and the song “Adam Raised a Cain” was included on that album. - What is the highest chart position of “Greetings from Asbury Park, N.J.” in the US?

The highest chart position of “Greetings from Asbury Park, N.J.” in the US is 60. - What is the highest chart position of the album “Tracks” in Canada?

Based on the provided data, the highest chart position of the album “Tracks” in Canada is -. This is because the data does not include any Canadian chart positions for this album. - In which year was “Highway Patrolman” released?

The song “Highway Patrolman” was released in 1982. - Who produced “all or nothin’ at all?”

The song “All or Nothin’ at All” was produced by Bruce Springsteen, Roy Bittan, Jon Landau, and Chuck Plotkin.

All answers to the questions are correct. The most important job has been done in the previous post, where embedding the documents in the correct way, resulted in finding the correct segments. An LLM is able to extract the answer to the question when it is fed with the correct data.

6. Caveats

During the implementation, I ran into some strange behavior which is quite important to know when you are starting to implement your use case.

6.1 Format of Weaviate Results

The Weaviate response contains a GraphQLResponse object, something like the following:

GraphQLResponse(

data={

Get={

Songs=[

{_additional={certainty=0.7534831166267395, distance=0.49303377},

originalRelease=Darkness on the Edge of Town,

producers=Jon Landau Bruce Springsteen Steven Van Zandt (assistant),

song="Adam Raised a Cain", writers=Bruce Springsteen, year=1978}

]

}

},

errors=null)In the code, the data part is used to add to the prompt.

String answer = model.generate(createPrompt(question, result.getResult().getData().toString(), extraInstruction));What happens when you add the response as-is to the prompt?

String answer = model.generate(createPrompt(question, result.getResult().toString(), extraInstruction));Running the code returns the following wrong answer for question 3 and some unnecessary additional information for question 4. The other questions are answered correctly.

- What is the highest chart position of the album “Tracks” in Canada?

Based on the provided data, the highest chart position of the album “Tracks” in Canada is 50. - In which year was “Highway Patrolman” released?

Based on the provided GraphQLResponse, “Highway Patrolman” was released in 1982.

who produced “all or nothin’ at all?”

6.2 Format of Prompt

The code contains functionality to add extra instructions to the prompt. As you have probably noticed, this functionality is not used. Let’s see what happens when you remove this from the prompt. The createPrompt method becomes the following (I did not remove everything so that only a minor code change is needed).

private static String createPrompt(String question, String inputData, String extraInstruction) {

return "Answer the following question: " + question + "\n" +

"Use the following data to answer the question: " + inputData;

}Running the code adds some extra information to the answer to question 3 which is not entirely correct. It is correct that the album has chart positions for the United States, United Kingdom, Germany, and Sweden. It is not correct that the album reached the top 10 in the UK and US charts. All other questions are answered correctly.

- What is the highest chart position of the album “Tracks” in Canada?

Based on the provided data, the highest chart position of the album “Tracks” in Canada is not specified. The data only includes chart positions for other countries such as the United States, United Kingdom, Germany, and Sweden. However, the album did reach the top 10 in the UK and US charts.

It remains a bit brittle when using an LLM. You cannot always trust the answer it is given. Changing the prompt accordingly seems to be possible to minimize the hallucinations of an LLM. It is therefore important that you collect feedback from your users in order to identify when an LLM seems to hallucinate. This way, you will be able to improve the responses to the users. An interesting blog is written by Fiddler which addresses this kind of issue.

7. Conclusion

In this blog, you learned how to implement RAG using Weaviate, LangChain4j, and LocalAI. The results are quite amazing. Embedding documents the right way, filtering the results, and feeding them to an LLM is a very powerful combination that can be used in many use cases.