Implementing AI-Driven Edge Insights for Fleet Technology

In today’s tech-driven world, fleet management has become a critical part of various industries. Whether it’s tracking vehicles, optimizing routes, or monitoring vehicle health, developers are playing a pivotal role in building solutions for fleet technology. In this article, we’ll walk you through the essential steps to create effective fleet technology solutions that can help streamline operations, increase efficiency, and enhance safety.

Before we dive in, let’s take a look at the background of connected fleet vehicles because it’s the reason we’re developing innovative AI fleet solutions.

The global fleet industry is huge, with at least 120M vehicles currently installed and 22M new vehicles expected to ship by the end of 2023 worldwide. And just as global fleet sizes are expanding, the number of vehicles that use connected solutions is also rapidly growing.

It is currently estimated that by 2032, there will be a 230% growth in the size of commercial fleets globally.

It’s worth noting connected technologies have existed in the form of GPS and connectivity to the cloud in the past, and in current times, fleet telematics using cameras, AI, and central in-vehicle computers are in great demand.

What we see for tomorrow is a digital vehicle with a variety of integrated, customizable, and increasingly complex solutions available.

What’s driving this change? There are several factors.

- The growth in the use of artificial intelligence technology.

- The desire for better analytic insights when making decisions.

- Technology advancements that make solutions more accessible, and finally, the explosive growth in e-commerce.

Many features that previously required multiple, separate control systems can now all be managed via a central in-vehicle computer. Among some of the possibilities are:

- 360 View, giving a driver the ability to easily know what is going on in and around the vehicle and providing a fleet operator post-incident evident records

- Navigation, route planning, and route optimization, meaning that better, optimized routes can be provided to the driver, and combined with cargo management, fleet-wide dispatching, and planning optimization to the fleet operators, fuel and operational costs can be saved.

- Cargo management, allowing the driver a view of what and how much is in the cargo hold, what condition it’s in, and where it is when it’s time to make deliveries

- Driver management, whereby fleet managers can help coach the driver

- Idle monitoring, so fleet managers can analyze data and see if the most efficient schedules are being planned and to see if a vehicle has too much down-time that could be used for other tasks

- Predictive maintenance which means fleet managers and drivers have good visibility and notifications of a vehicle’s maintenance schedule. It also minimizes unexpected breakdowns and costly repairs from missed appointments

- Device manageability, where telemetry enables the monitoring of device health, over-the-air updates, and recovery from device failure.

Let’s see how we can implement these features with the Edge Insights for Fleet (EIF).

Edge Insights for Fleet (EIF) And How It Works

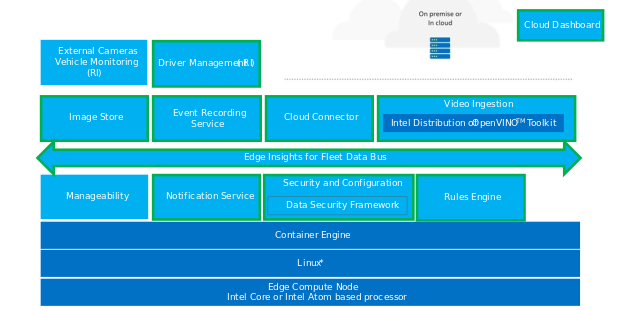

Edge Insights for Fleet (EIF) is an end-to-end reference cloud-native configurable microservice-based middleware framework specifically designed for use in fleet vehicles.

EIF aims to accelerate TTM using containers and an optimized analytics framework that is combined with OpenVINO. EIF simplifies the learning curve for developers with well-defined microservices, adds flexibility for system integrators, and accelerates the adoption of new use cases.

In addition, the framework allows for the ability to develop an end-to-end solution with a reference cloud dashboard and the ability to manage a device or receive device-specific telemetry, not just vehicle sensor data. All this can be done while creating an agnostic platform that can be used in a variety of fleet vehicles, geographies, or even end-customer configurations.

Edge Insight for Fleet offers a curated collection of pre-integrated components strategically designed to streamline the creation and deployment of solutions tailored specifically for the fleet and commercial vehicle sector.

The primary objective of this package revolves around deployment onboard the individual fleet vehicle itself, which we also call an “edge node.”

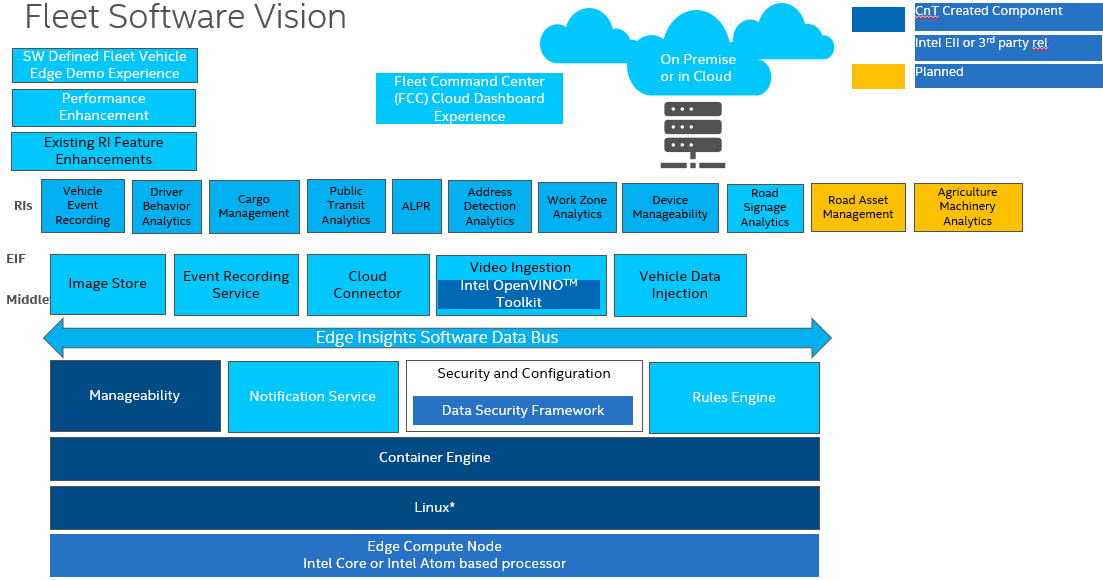

Figure 1: Architecture Diagram – Fleet Software Vision

Modules and Services

EIF aims to accelerate TTM using containers and an optimized analytics framework that is combined with OpenVINO. EIF simplifies the learning curve for developers using well-defined microservices, adds flexibility for system integrators, and accelerates the adoption of new use cases.

In addition, the framework allows for the ability to develop an end-to-end solution with a reference cloud dashboard and the ability to manage a device or receive device-specific telemetry, not just vehicle sensor data. All this can be done while creating an agnostic platform that can be used in a variety of fleet vehicles, geographies, or even end-customer configurations.

Fleet Management Use Case and Reference Implementation

To get a better understanding of how this framework can be used, look at some existing fleet management use cases/reference implementations.

There are multiple use cases and reference implementations available which can give us a quick start while developing these innovative applications for the fleet industry; some of them are:

- Driver Behavior Analytics

- Vehicle Event Recording

- Cargo Management

- Public Transit Analytics

- Automatic License Plate Recognition

- Address Detection

- Device Manageability

- Work Zone Analytics

- Road Sign Analytics

- Road Asset Management

- Agriculture Machine Analytics

Let’s explore the Driver Behavior Analytics and understand how it can be implemented.

Driver Behavior Analytics Reference Implementation

By using advanced driver behavior monitoring technology, fleets can gain real-time insights into how their drivers operate vehicles, leading to reduced operational costs and improved safety.

It functions using video analytics and AI models that are then sent to a rules engine that can be customized by the system integrator. This allows flexibility and the ability for the system integrator to define the actions required by their end customer.

You can easily download this reference implementation, including the source code, from the Intel Developer Catalog. It is open source; follow the developer documentation “Get Started” guide for download, install, build, and run.

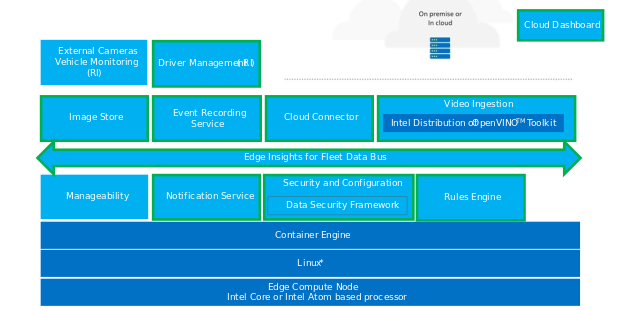

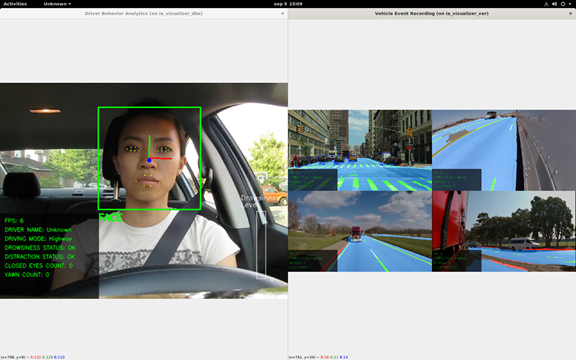

Figure 2: Architecture Diagram – Driver Behavior Monitoring

The steps below are performed before actions:

- The video analytics use cases (such as driver monitoring) are packaged in a ‘video ingestion microservice,’ in turn, packaged in a container that performs both video ingestion and analytics. AI models (added by the system integrator or integrated sales vendor) are easily deployed using UDFs (user-defined functions) that allow users to plug and play their AI models to create custom use cases such as driver monitoring.

- Driver monitoring uses AI models (biometric data) for detecting faces and facial landmarks to determine if the driver is showing signs of sleepiness — e.g., eye tracking or facial position. The use case deploys an AI model for estimating the head position to determine if the driver is looking away from the road. This output is what will be analyzed.

- Next, this inference output from the ‘video ingestion’ service is sent to the rules engine.

- The rules engine is a microservice that allows system integrators to define customized rules based on their end customer requirements. It is based on their business logic and how they want to handle the inference results. The rules defined for driver monitoring will issue various actions depending on the drowsiness and distraction level. This might look like issuing visual/cloud events as warnings or record requests for critical detections.

- The output from the rules engine could then be sent to the ‘notification service’ that will then issue the specific request to the appropriate service. For example, cloud alerts could go to the ‘cloud connector’ where they can be viewed in the Kibana dashboard, or record requests could be sent to the ‘event recording’ microservice that records a video of the detected event. The video is then uploaded to the cloud where it can be viewed by a fleet operator.

Model Description

- Head Pose: Estimates the head(s) position in the video frame.

- Facial Landmarks: Determines the facial landmarks of the identified people.

- Face Detection: Detects the face(s) in the video frame.

- Face Re-identification: Recognizes persons using the provided faces.

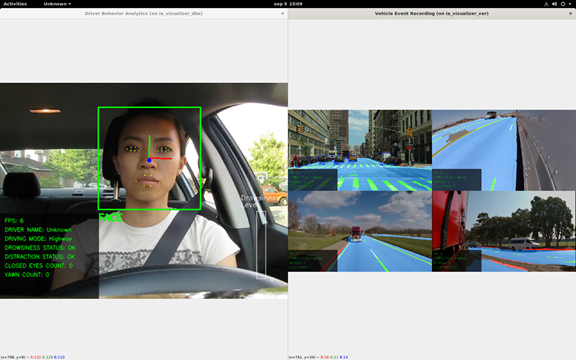

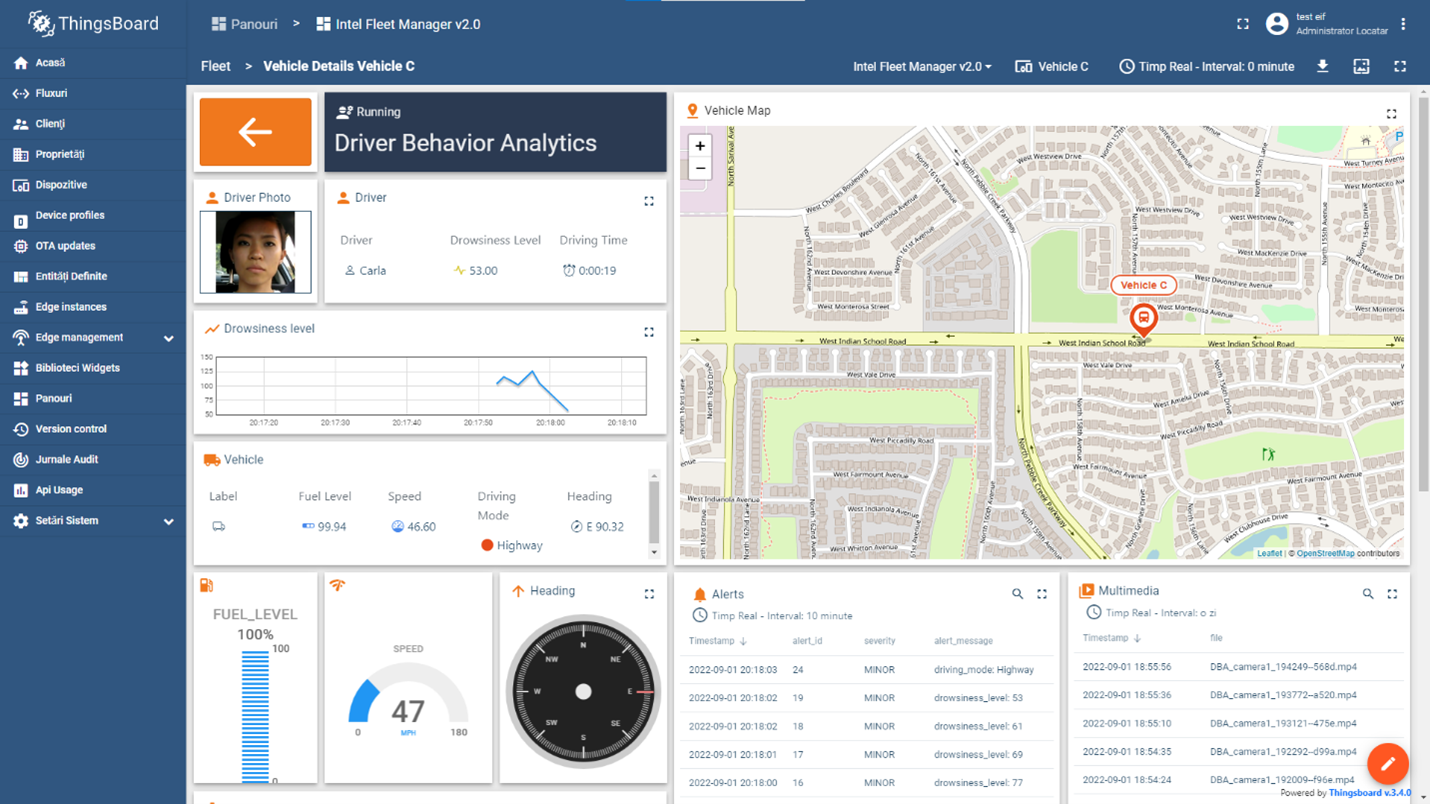

Once the application is all set up and the visualizer starts, you can see multiple data points, including Alerts, Driver photos, and a map showing the vehicle location. The Visualizer App will detect yawns, blinks, drowsiness, and distraction status and will display the name of the driver.

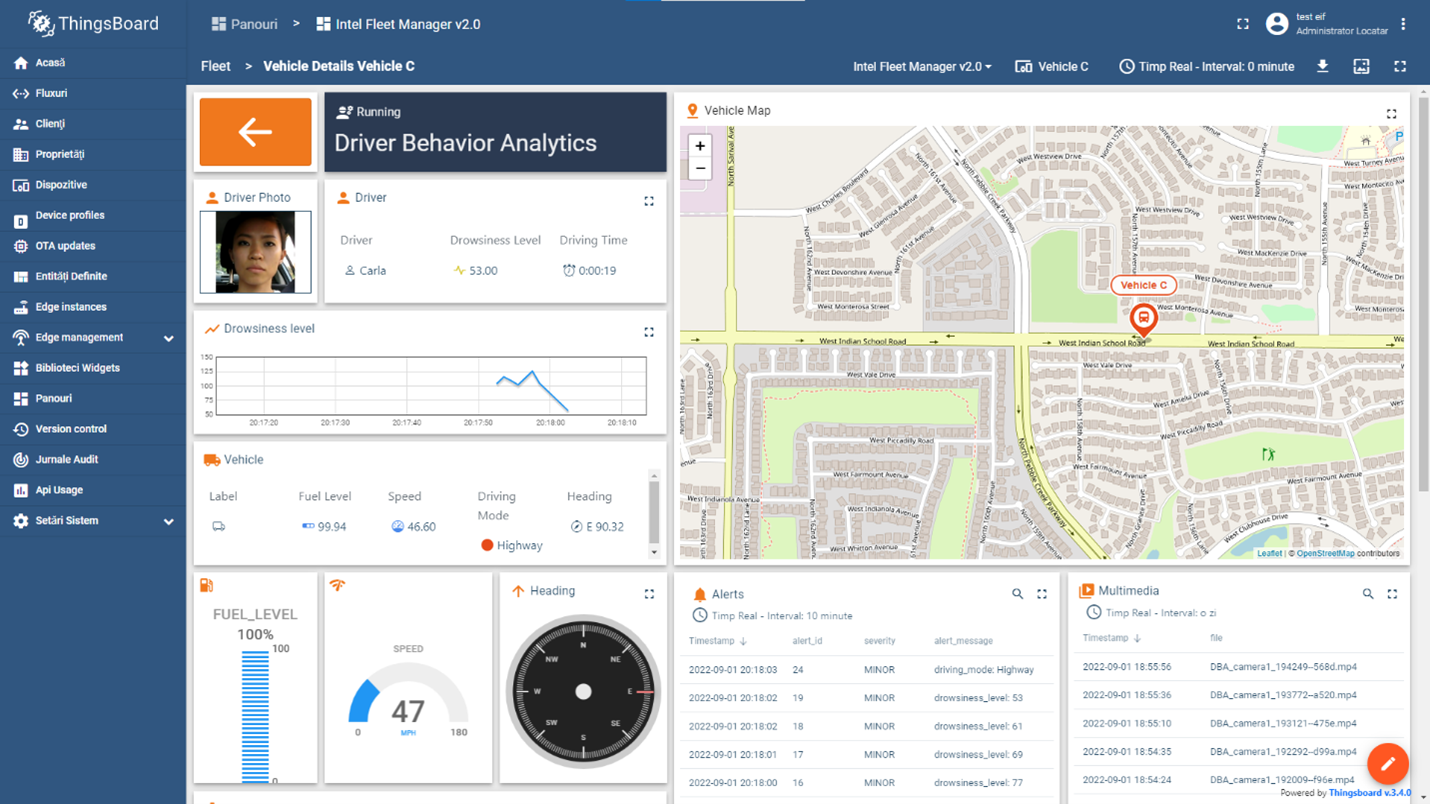

Figure 4: Intel Fleet Manager Dashboard shown in ThingsBoard

Summary

Edge Insights for Fleet (EIF) resolves the complexity of multiple concurrent use cases and lowers barriers to entry. It is a Consolidated fleet solution that allows multiple use cases to be managed from a single In-Vehicle PC. The Driver behavior reference implementations implement OpenVINO™ toolkit plugins for detecting and tracking driver behavior. It can be extended further to provide support for feed from the network stream (RTSP camera), and the algorithm can be optimized for better performance.

Reference

In today’s tech-driven world, fleet management has become a critical part of various industries. Whether it’s tracking vehicles, optimizing routes, or monitoring vehicle health, developers are playing a pivotal role in building solutions for fleet technology. In this article, we’ll walk you through the essential steps to create effective fleet technology solutions that can help streamline operations, increase efficiency, and enhance safety.

Before we dive in, let’s take a look at the background of connected fleet vehicles because it’s the reason we’re developing innovative AI fleet solutions.

The global fleet industry is huge, with at least 120M vehicles currently installed and 22M new vehicles expected to ship by the end of 2023 worldwide. And just as global fleet sizes are expanding, the number of vehicles that use connected solutions is also rapidly growing.

It is currently estimated that by 2032, there will be a 230% growth in the size of commercial fleets globally.

It’s worth noting connected technologies have existed in the form of GPS and connectivity to the cloud in the past, and in current times, fleet telematics using cameras, AI, and central in-vehicle computers are in great demand.

What we see for tomorrow is a digital vehicle with a variety of integrated, customizable, and increasingly complex solutions available.

What’s driving this change? There are several factors.

- The growth in the use of artificial intelligence technology.

- The desire for better analytic insights when making decisions.

- Technology advancements that make solutions more accessible, and finally, the explosive growth in e-commerce.

Many features that previously required multiple, separate control systems can now all be managed via a central in-vehicle computer. Among some of the possibilities are:

- 360 View, giving a driver the ability to easily know what is going on in and around the vehicle and providing a fleet operator post-incident evident records

- Navigation, route planning, and route optimization, meaning that better, optimized routes can be provided to the driver, and combined with cargo management, fleet-wide dispatching, and planning optimization to the fleet operators, fuel and operational costs can be saved.

- Cargo management, allowing the driver a view of what and how much is in the cargo hold, what condition it’s in, and where it is when it’s time to make deliveries

- Driver management, whereby fleet managers can help coach the driver

- Idle monitoring, so fleet managers can analyze data and see if the most efficient schedules are being planned and to see if a vehicle has too much down-time that could be used for other tasks

- Predictive maintenance which means fleet managers and drivers have good visibility and notifications of a vehicle’s maintenance schedule. It also minimizes unexpected breakdowns and costly repairs from missed appointments

- Device manageability, where telemetry enables the monitoring of device health, over-the-air updates, and recovery from device failure.

Let’s see how we can implement these features with the Edge Insights for Fleet (EIF).

Edge Insights for Fleet (EIF) And How It Works

Edge Insights for Fleet (EIF) is an end-to-end reference cloud-native configurable microservice-based middleware framework specifically designed for use in fleet vehicles.

EIF aims to accelerate TTM using containers and an optimized analytics framework that is combined with OpenVINO. EIF simplifies the learning curve for developers with well-defined microservices, adds flexibility for system integrators, and accelerates the adoption of new use cases.

In addition, the framework allows for the ability to develop an end-to-end solution with a reference cloud dashboard and the ability to manage a device or receive device-specific telemetry, not just vehicle sensor data. All this can be done while creating an agnostic platform that can be used in a variety of fleet vehicles, geographies, or even end-customer configurations.

Edge Insight for Fleet offers a curated collection of pre-integrated components strategically designed to streamline the creation and deployment of solutions tailored specifically for the fleet and commercial vehicle sector.

The primary objective of this package revolves around deployment onboard the individual fleet vehicle itself, which we also call an “edge node.”

Figure 1: Architecture Diagram – Fleet Software Vision

Modules and Services

EIF aims to accelerate TTM using containers and an optimized analytics framework that is combined with OpenVINO. EIF simplifies the learning curve for developers using well-defined microservices, adds flexibility for system integrators, and accelerates the adoption of new use cases.

In addition, the framework allows for the ability to develop an end-to-end solution with a reference cloud dashboard and the ability to manage a device or receive device-specific telemetry, not just vehicle sensor data. All this can be done while creating an agnostic platform that can be used in a variety of fleet vehicles, geographies, or even end-customer configurations.

Fleet Management Use Case and Reference Implementation

To get a better understanding of how this framework can be used, look at some existing fleet management use cases/reference implementations.

There are multiple use cases and reference implementations available which can give us a quick start while developing these innovative applications for the fleet industry; some of them are:

- Driver Behavior Analytics

- Vehicle Event Recording

- Cargo Management

- Public Transit Analytics

- Automatic License Plate Recognition

- Address Detection

- Device Manageability

- Work Zone Analytics

- Road Sign Analytics

- Road Asset Management

- Agriculture Machine Analytics

Let’s explore the Driver Behavior Analytics and understand how it can be implemented.

Driver Behavior Analytics Reference Implementation

By using advanced driver behavior monitoring technology, fleets can gain real-time insights into how their drivers operate vehicles, leading to reduced operational costs and improved safety.

It functions using video analytics and AI models that are then sent to a rules engine that can be customized by the system integrator. This allows flexibility and the ability for the system integrator to define the actions required by their end customer.

You can easily download this reference implementation, including the source code, from the Intel Developer Catalog. It is open source; follow the developer documentation “Get Started” guide for download, install, build, and run.

Figure 2: Architecture Diagram – Driver Behavior Monitoring

The steps below are performed before actions:

- The video analytics use cases (such as driver monitoring) are packaged in a ‘video ingestion microservice,’ in turn, packaged in a container that performs both video ingestion and analytics. AI models (added by the system integrator or integrated sales vendor) are easily deployed using UDFs (user-defined functions) that allow users to plug and play their AI models to create custom use cases such as driver monitoring.

- Driver monitoring uses AI models (biometric data) for detecting faces and facial landmarks to determine if the driver is showing signs of sleepiness — e.g., eye tracking or facial position. The use case deploys an AI model for estimating the head position to determine if the driver is looking away from the road. This output is what will be analyzed.

- Next, this inference output from the ‘video ingestion’ service is sent to the rules engine.

- The rules engine is a microservice that allows system integrators to define customized rules based on their end customer requirements. It is based on their business logic and how they want to handle the inference results. The rules defined for driver monitoring will issue various actions depending on the drowsiness and distraction level. This might look like issuing visual/cloud events as warnings or record requests for critical detections.

- The output from the rules engine could then be sent to the ‘notification service’ that will then issue the specific request to the appropriate service. For example, cloud alerts could go to the ‘cloud connector’ where they can be viewed in the Kibana dashboard, or record requests could be sent to the ‘event recording’ microservice that records a video of the detected event. The video is then uploaded to the cloud where it can be viewed by a fleet operator.

Model Description

- Head Pose: Estimates the head(s) position in the video frame.

- Facial Landmarks: Determines the facial landmarks of the identified people.

- Face Detection: Detects the face(s) in the video frame.

- Face Re-identification: Recognizes persons using the provided faces.

Once the application is all set up and the visualizer starts, you can see multiple data points, including Alerts, Driver photos, and a map showing the vehicle location. The Visualizer App will detect yawns, blinks, drowsiness, and distraction status and will display the name of the driver.

Figure 4: Intel Fleet Manager Dashboard shown in ThingsBoard

Summary

Edge Insights for Fleet (EIF) resolves the complexity of multiple concurrent use cases and lowers barriers to entry. It is a Consolidated fleet solution that allows multiple use cases to be managed from a single In-Vehicle PC. The Driver behavior reference implementations implement OpenVINO™ toolkit plugins for detecting and tracking driver behavior. It can be extended further to provide support for feed from the network stream (RTSP camera), and the algorithm can be optimized for better performance.