Making Machine Learning More Accessible for Application Developers

Introduction

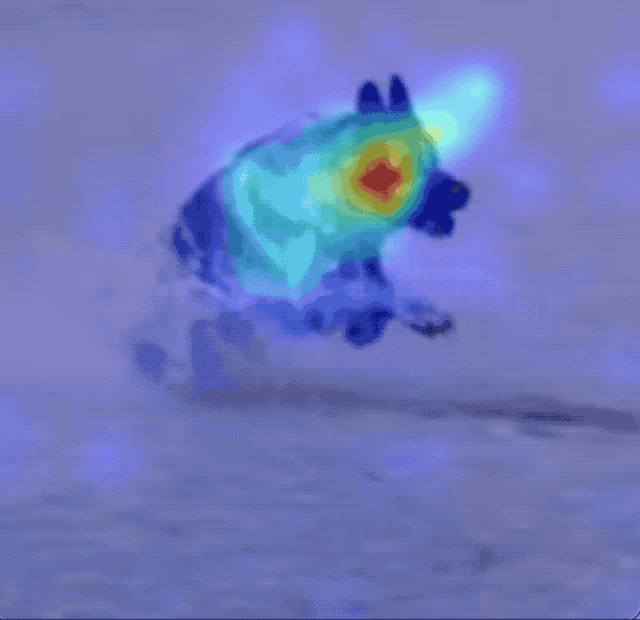

Attempts at hand-crafting algorithms for understanding human-generated content have generally been unsuccessful. For example, it is difficult for a computer to “grasp” the semantic content of an image – e.g., a car, cat, coat, etc….… – purely by analyzing its low-level pixels. Color histograms and feature detectors worked to a certain extent, but they were rarely accurate for most applications.

In the past decade, the combination of big data and deep learning has fundamentally changed the way we approach computer vision, natural language processing, and other machine learning (ML) applications; tasks ranging from spam email detection to realistic text-to-video synthesis have seen incredible strides, with accuracy metrics on specific tasks reaching superhuman levels. A significant positive side effect of these improvements is an increase in the use of embedding vectors, i.e., model artifacts generated by taking an intermediate result within a deep neural network. OpenAI’s docs page gives an excellent overview:

An embedding is a special format of data representation that can be easily utilized by machine learning models and algorithms. The embedding is an information dense representation of the semantic meaning of a piece of text. Each embedding is a vector of floating point numbers, such that the distance between two embeddings in the vector space is correlated with semantic similarity between two inputs in the original format. For example, if two texts are similar, then their vector representations should also be similar.

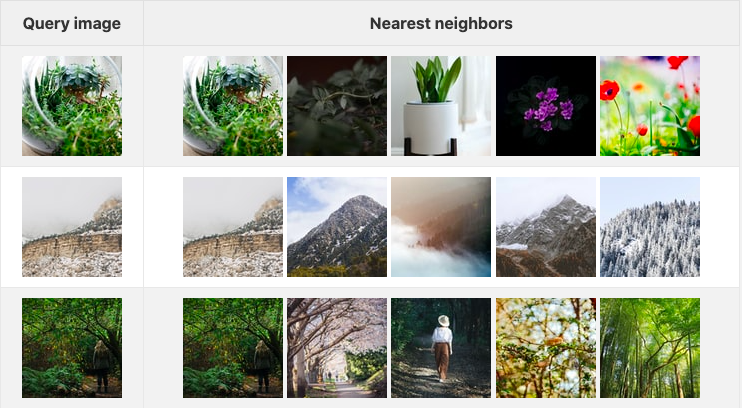

The table below shows three query images along with their corresponding top five images in embedding space (I used the first 1000 images of Unsplash Lite as the dataset):

Training a New Model for Embedding Tasks

On paper, training a new ML model and generating embeddings sounds easy: take the latest and greatest pre-built model, backed by the newest architecture, and train it with some data. Easy, right?

Not so fast. On the surface, using the latest model architecture may seem easy to achieve state-of-the-art results. This, however, could not be further from the truth. Let’s go over some common pitfalls related to training embedding models (these also apply to general machine learning models):

-

Not enough data: Training a new embedding model from scratch without enough data makes it prone to a phenomenon called overfitting. Only the largest global organizations have enough data to make training a new model from scratch worthwhile; others must rely on fine-tuning, a process where an already-trained model with large amounts of data is then distilled using a smaller dataset.

-

Poor hyperparameter selection: Hyperparameters are constants used to control the training process, such as how quickly the model learns or how much data is used for training in a single batch. Selecting an appropriate set of hyperparameters is extremely important when fine-tuning a model, as small changes to specific values can result vastly different results. Recent research has also shown accuracy improvements on ImageNet-1k of over 5% (that’s a lot) training the same model from scratch with an improved training procedure.

-

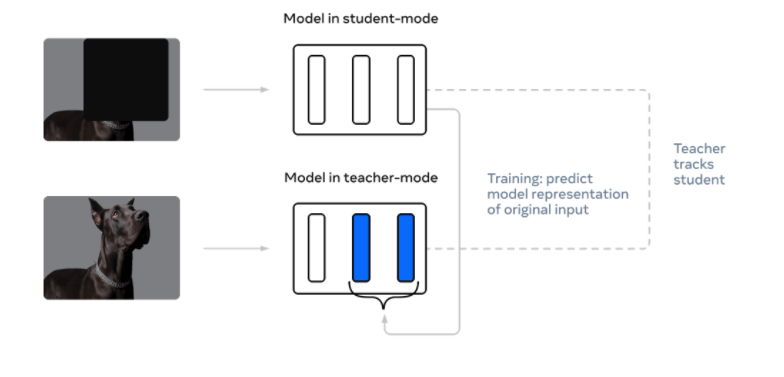

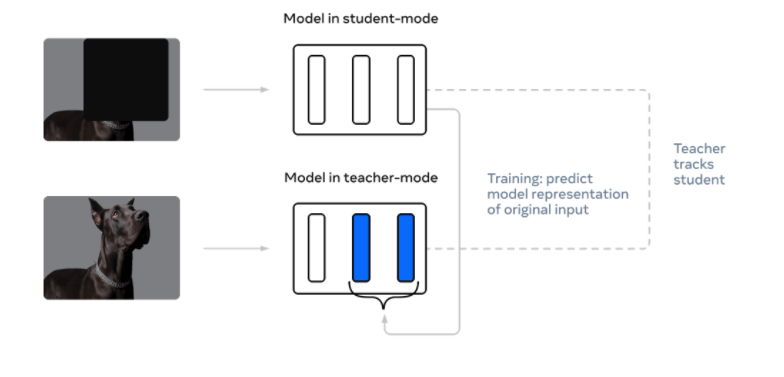

Overestimating self-supervised models: The term self-supervision refers to a training procedure where “fundamentals” of the input data are learned by leveraging data itself without labels. In general, self-supervised methods are great for pre-training (i.e., training a model in a self-supervised fashion with lots of unlabelled data before fine-tuning it with a smaller labeled dataset), but directly using self-supervised embeddings can result in suboptimal performance.

-

A common way of tackling all three of the above problems is to train a self-supervised model using a massive quantity of data before fine-tuning the model on labeled data. This has been shown to work great for NLP but not so much for CV just yet.

Using Embedding Models Has Its Pitfalls

These are just some common mistakes associated with training embedding models. As a direct result, many developers looking to use embeddings make immediate use of pre-trained models on academic datasets such as ImageNet (for image classification) and SQuAD (for question answering). However, despite the abundance of pre-trained models available today, several pitfalls should be avoided to extract maximum embedding performance:

-

Training and inference data mismatch: Using an off-the-shelf model trained by other organizations has become a popular way to develop ML applications without thousands of GPU/TPU-hours. Understanding the limitations of a particular embedding model and how that can impact application performance is extremely important; without understanding the model’s training data and methodology, it’s straightforward to misinterpret results. For example, a model trained to embed music will work poorly when applied to speech and vice versa.

-

Improper layer selection: When using a fully-supervised neural network as an embedding model, features are generally taken from activations’ second-to-last layer (known formally as the penultimate layer). However, this can result in suboptimal performance, depending on the application. For example, when using a model trained for image classification to embed images of logos and/or brands, using earlier activations may result in improved performance. This is due to better retention of low-level features (edges and corners), which are critical to classifying non-complex images.

-

Nonidentical inference conditions: Train and inference conditions must be the same to extract maximum performance from an embedding model. In practice, this is often not the case. A standard

resnet50model fromtorchvisionFor example, two completely different results are generated when downsampled using bicubic interpolation versus nearest-neighbor interpolation (see below).

BICUBIC INTERPOLATION |

NEAREST INTERPOLATION |

|

|---|---|---|

| Predicted class | coucal |

robin, American robin, Turdus migratorius |

| Probability | 27.28% |

47.65% |

| Embedding vector | [0.1392, 0.3572, 0.1988, ..., 0.2888, 0.6611, 0.2909] |

[0.3463, 0.2558, 0.5562, ..., 0.6487, 0.8155, 0.3422] |

Deploying an Embedding Model

Once you’ve jumped through all of the hurdles associated with training and validating a model, scaling and deploying becomes the next critical step. But, again, embedding model deployment is easier said than done. MLOps, a field adjacent to DevOps, exists specifically for this purpose.

-

Selecting the proper hardware: Embedding models, analogous to most other ML models, can be run on various types of hardware, ranging from standard everyday CPUs to programmable logic (FPGAs). Entire research papers have been written analyzing the tradeoffs in terms of cost versus efficiency, highlighting the difficulty most organizations face here.

-

Model deployment plNumerousnumerous MLOps and distributed computing place platforms available (including many open-source ones). Figuring out how these will fit into your application can be a challenge in and of itself.

-

Storage for embedding vectors: As your application scales, you’ll need to find a scalable and more permanent storage solution for your embedding vectors. This is where vector databases come in.

I’ll Learn How to Do It Myself!

I applaud your enthusiasm! A couple of crucial things to remember:

ML is very different from software engineering: Traditional machine learning derives its roots from statistics, a branch of mathematics that is very different from software engineering. Important machine learning concepts such as regularization and feature selection have strong fundamental roots in mathematics. While modern libraries for training and inference (PyTorch and Tensorflow being two well-known ones) have made it significantly easier to train and produce embedding models, understanding how different hyperparameters and training methodologies affect embedding model performance is still important critical.

Learning to use PyTorch or Tensorflow can be unintuitive: These libraries have significantly sped up modern ML models’ training, validation, and deployment. On the other hand, building a new model or implementing an existing one can be intuitive to seasoned ML developers or programmers familiar with HDL. Still, for most software developers, the underlying concepts can be challenging to grasp. There’s also the question of which framework to choose, as the execution engines used by these two frameworks have quite a few differences (I recommend PyTorch).

Finding an MLOps platform that fits your codebase will take time: Here’s a curated list of MLOps platforms and tools. There are hundreds of different options to choose from, and evaluating the pros and cons of each is a years-long research project in and of itself.

With all this being said, I’d like to amend my statement above to say: I applaud your enthusiasm, but I don’t recommend learning ML and MLOps. It’s a relatively long and tedious process that can take time away from what’s most important: developing a solid application that your users will love.

Supercharging Data Science With Towhee

Towhee is an open-source project that helps software engineers develop and deploy applications that utilize embeddings in just a few lines of code. Towhee affords software developers the freedom and flexibility to build their ML applications without diving deep into embedding models and machine learning.

A Quick Example

A Pipeline is a single embedding generation task that is composed of several sub-tasks (also known as Operators in Towhee). By abstracting an entire task within a Pipeline, Towhee helps users avoid many of the embedding generating pitfalls mentioned above.

>>> from towhee import pipeline

>>> embedding_pipeline = pipeline('image-embedding-resnet50')

>>> embedding = embedding_pipeline('https://docs.towhee.io/img/logo.png')In the example above, image decoding, image transformation, feature extraction, and embedding normalization are four substeps compiled into a single pipeline – no need to worry about the model and inference details yourself. In addition, Towhee provides pre-built embedding pipelines for various tasks, including audio/music embeddings, image embeddings, face embeddings, etc.

Method-Chaining API

Towhee also provides a Pythonic unstructured data processing framework called DataCollection. In short, DataCollection A method-chaining API allows developers to rapidly prototype embedding and other ML models on real-world data. In the example below, we use DataCollection compute embeddings using the resnet50 embedding model.

For this example, we’ll build an “application” that lets us filter prime numbers with a one’s digit of 3:

>>> from towhee.functional import DataCollection

>>> def is_prime(x):

... if x <= 1:

... return False

... for i in range(2, int(x/2)+1):

... if not x % i:

... return False

... return True

...

>>> dc = (

... DataCollection.range(100)

... .filter(is_prime) # stage 1, find prime

... .filter(lambda x: x%10 == 3) # stage 2, find primes that ends with '3'

... .map(str) # stage 3, convert to string

... )

...

>>> dc.to_list()DataCollection It can be used to develop entire applications in just a single line of code. For example, the following section shows how to create a reverse image search application. DataCollection – keep reading ahead to learn more.

Towhee Trainer

As mentioned above, entirely- or self-supervised trained models are often good at generic tasks. However, you’ll sometimes want to create an embedding model that’s good at something particular, e.g., differentiating between cats versus dogs. Towhee provides a training/fine-tuning framework specifically for this purpose:

>>> from towhee.trainer.training_config import TrainingConfig

>>> training_config = TrainingConfig(

... batch_size=2,

... epoch_num=2,

... output_dir="quick_start_output"

... )You’ll also need to specify a dataset to train on:

>>> train_data = dataset('train', size=20, transform=my_data_transformer)

>>> eval_data = dataset('eval', size=10, transform=my_data_transformer)With everything in place, training a new embedding model from an existing operator is a piece of cake:

>>> op.train(

... training_config,

... train_dataset=train_data,

... eval_dataset=eval_data

... )Once complete, you can use the same operator in your application with no changes to the rest of the code.

An Example Application: Reverse Image Search

To demonstrate how Towhee can be used, let’s quickly build a small reverse image search application. Reverse image search is well-known. So let’s dive right in:

>>> import towhee

>>> from towhee.functional import DataCollectionWe’ll be using a small dataset along with 10 query images. Using DataCollectionWe can then load both the dataset and query images:

>>> dataset = DataCollection.from_glob('./image_dataset/dataset/*.JPEG').unstream()

>>> query = DataCollection.from_glob('./image_dataset/query/*.JPEG').unstream()The next step is to compute embeddings over the entire dataset collection:

>>> dc_data = (

... dataset.image_decode.cv2()

... .image_embedding.timm(model_name="resnet50")

... )

...This step creates a local collection of embedding vectors – one for each image in the dataset. With this, we can now query for nearest neighbors:

>>> result = (

... query.image_decode.cv2() # decode all images in the query set

... .image_embedding.timm(model_name="resnet50") # compute embeddings using the `resnet50` embedding model

... .towhee.search_vectors(data=dc_data, cal="L2", topk=5) # search the dataset

... .map(lambda x: x.ids) # acquire IDs (file paths) of similar results

... .select_from(dataset) # get the result image

... )

...We also provide a way to deploy your application using Ray. Specify query.set_engine('ray') And you’re good to go!

Closing words

We do not consider Towhee a full-fledged, end-to-end model serving or MLOps platform, nor is that what we set out to achieve. Instead, we aim to supercharge the development of applications that require embeddings and other ML tasks. With Towhee, we hope to enable rapid prototyping of embedding models and pipelines on your local machine (Pipeline + Trainer), allow for the development of an ML-centric application in just a couple of lines of code (DataCollection), and allow for easy and rapid deployment to your cluster (via Ray).

That’s all, folks – I hope this post was informative. If you have any questions, comments, or concerns, feel free to comment below. Stay tuned for more!

Introduction

Attempts at hand-crafting algorithms for understanding human-generated content have generally been unsuccessful. For example, it is difficult for a computer to “grasp” the semantic content of an image – e.g., a car, cat, coat, etc….… – purely by analyzing its low-level pixels. Color histograms and feature detectors worked to a certain extent, but they were rarely accurate for most applications.

In the past decade, the combination of big data and deep learning has fundamentally changed the way we approach computer vision, natural language processing, and other machine learning (ML) applications; tasks ranging from spam email detection to realistic text-to-video synthesis have seen incredible strides, with accuracy metrics on specific tasks reaching superhuman levels. A significant positive side effect of these improvements is an increase in the use of embedding vectors, i.e., model artifacts generated by taking an intermediate result within a deep neural network. OpenAI’s docs page gives an excellent overview:

An embedding is a special format of data representation that can be easily utilized by machine learning models and algorithms. The embedding is an information dense representation of the semantic meaning of a piece of text. Each embedding is a vector of floating point numbers, such that the distance between two embeddings in the vector space is correlated with semantic similarity between two inputs in the original format. For example, if two texts are similar, then their vector representations should also be similar.

The table below shows three query images along with their corresponding top five images in embedding space (I used the first 1000 images of Unsplash Lite as the dataset):

Training a New Model for Embedding Tasks

On paper, training a new ML model and generating embeddings sounds easy: take the latest and greatest pre-built model, backed by the newest architecture, and train it with some data. Easy, right?

Not so fast. On the surface, using the latest model architecture may seem easy to achieve state-of-the-art results. This, however, could not be further from the truth. Let’s go over some common pitfalls related to training embedding models (these also apply to general machine learning models):

-

Not enough data: Training a new embedding model from scratch without enough data makes it prone to a phenomenon called overfitting. Only the largest global organizations have enough data to make training a new model from scratch worthwhile; others must rely on fine-tuning, a process where an already-trained model with large amounts of data is then distilled using a smaller dataset.

-

Poor hyperparameter selection: Hyperparameters are constants used to control the training process, such as how quickly the model learns or how much data is used for training in a single batch. Selecting an appropriate set of hyperparameters is extremely important when fine-tuning a model, as small changes to specific values can result vastly different results. Recent research has also shown accuracy improvements on ImageNet-1k of over 5% (that’s a lot) training the same model from scratch with an improved training procedure.

-

Overestimating self-supervised models: The term self-supervision refers to a training procedure where “fundamentals” of the input data are learned by leveraging data itself without labels. In general, self-supervised methods are great for pre-training (i.e., training a model in a self-supervised fashion with lots of unlabelled data before fine-tuning it with a smaller labeled dataset), but directly using self-supervised embeddings can result in suboptimal performance.

-

A common way of tackling all three of the above problems is to train a self-supervised model using a massive quantity of data before fine-tuning the model on labeled data. This has been shown to work great for NLP but not so much for CV just yet.

Using Embedding Models Has Its Pitfalls

These are just some common mistakes associated with training embedding models. As a direct result, many developers looking to use embeddings make immediate use of pre-trained models on academic datasets such as ImageNet (for image classification) and SQuAD (for question answering). However, despite the abundance of pre-trained models available today, several pitfalls should be avoided to extract maximum embedding performance:

-

Training and inference data mismatch: Using an off-the-shelf model trained by other organizations has become a popular way to develop ML applications without thousands of GPU/TPU-hours. Understanding the limitations of a particular embedding model and how that can impact application performance is extremely important; without understanding the model’s training data and methodology, it’s straightforward to misinterpret results. For example, a model trained to embed music will work poorly when applied to speech and vice versa.

-

Improper layer selection: When using a fully-supervised neural network as an embedding model, features are generally taken from activations’ second-to-last layer (known formally as the penultimate layer). However, this can result in suboptimal performance, depending on the application. For example, when using a model trained for image classification to embed images of logos and/or brands, using earlier activations may result in improved performance. This is due to better retention of low-level features (edges and corners), which are critical to classifying non-complex images.

-

Nonidentical inference conditions: Train and inference conditions must be the same to extract maximum performance from an embedding model. In practice, this is often not the case. A standard

resnet50model fromtorchvisionFor example, two completely different results are generated when downsampled using bicubic interpolation versus nearest-neighbor interpolation (see below).

BICUBIC INTERPOLATION |

NEAREST INTERPOLATION |

|

|---|---|---|

| Predicted class | coucal |

robin, American robin, Turdus migratorius |

| Probability | 27.28% |

47.65% |

| Embedding vector | [0.1392, 0.3572, 0.1988, ..., 0.2888, 0.6611, 0.2909] |

[0.3463, 0.2558, 0.5562, ..., 0.6487, 0.8155, 0.3422] |

Deploying an Embedding Model

Once you’ve jumped through all of the hurdles associated with training and validating a model, scaling and deploying becomes the next critical step. But, again, embedding model deployment is easier said than done. MLOps, a field adjacent to DevOps, exists specifically for this purpose.

-

Selecting the proper hardware: Embedding models, analogous to most other ML models, can be run on various types of hardware, ranging from standard everyday CPUs to programmable logic (FPGAs). Entire research papers have been written analyzing the tradeoffs in terms of cost versus efficiency, highlighting the difficulty most organizations face here.

-

Model deployment plNumerousnumerous MLOps and distributed computing place platforms available (including many open-source ones). Figuring out how these will fit into your application can be a challenge in and of itself.

-

Storage for embedding vectors: As your application scales, you’ll need to find a scalable and more permanent storage solution for your embedding vectors. This is where vector databases come in.

I’ll Learn How to Do It Myself!

I applaud your enthusiasm! A couple of crucial things to remember:

ML is very different from software engineering: Traditional machine learning derives its roots from statistics, a branch of mathematics that is very different from software engineering. Important machine learning concepts such as regularization and feature selection have strong fundamental roots in mathematics. While modern libraries for training and inference (PyTorch and Tensorflow being two well-known ones) have made it significantly easier to train and produce embedding models, understanding how different hyperparameters and training methodologies affect embedding model performance is still important critical.

Learning to use PyTorch or Tensorflow can be unintuitive: These libraries have significantly sped up modern ML models’ training, validation, and deployment. On the other hand, building a new model or implementing an existing one can be intuitive to seasoned ML developers or programmers familiar with HDL. Still, for most software developers, the underlying concepts can be challenging to grasp. There’s also the question of which framework to choose, as the execution engines used by these two frameworks have quite a few differences (I recommend PyTorch).

Finding an MLOps platform that fits your codebase will take time: Here’s a curated list of MLOps platforms and tools. There are hundreds of different options to choose from, and evaluating the pros and cons of each is a years-long research project in and of itself.

With all this being said, I’d like to amend my statement above to say: I applaud your enthusiasm, but I don’t recommend learning ML and MLOps. It’s a relatively long and tedious process that can take time away from what’s most important: developing a solid application that your users will love.

Supercharging Data Science With Towhee

Towhee is an open-source project that helps software engineers develop and deploy applications that utilize embeddings in just a few lines of code. Towhee affords software developers the freedom and flexibility to build their ML applications without diving deep into embedding models and machine learning.

A Quick Example

A Pipeline is a single embedding generation task that is composed of several sub-tasks (also known as Operators in Towhee). By abstracting an entire task within a Pipeline, Towhee helps users avoid many of the embedding generating pitfalls mentioned above.

>>> from towhee import pipeline

>>> embedding_pipeline = pipeline('image-embedding-resnet50')

>>> embedding = embedding_pipeline('https://docs.towhee.io/img/logo.png')In the example above, image decoding, image transformation, feature extraction, and embedding normalization are four substeps compiled into a single pipeline – no need to worry about the model and inference details yourself. In addition, Towhee provides pre-built embedding pipelines for various tasks, including audio/music embeddings, image embeddings, face embeddings, etc.

Method-Chaining API

Towhee also provides a Pythonic unstructured data processing framework called DataCollection. In short, DataCollection A method-chaining API allows developers to rapidly prototype embedding and other ML models on real-world data. In the example below, we use DataCollection compute embeddings using the resnet50 embedding model.

For this example, we’ll build an “application” that lets us filter prime numbers with a one’s digit of 3:

>>> from towhee.functional import DataCollection

>>> def is_prime(x):

... if x <= 1:

... return False

... for i in range(2, int(x/2)+1):

... if not x % i:

... return False

... return True

...

>>> dc = (

... DataCollection.range(100)

... .filter(is_prime) # stage 1, find prime

... .filter(lambda x: x%10 == 3) # stage 2, find primes that ends with '3'

... .map(str) # stage 3, convert to string

... )

...

>>> dc.to_list()DataCollection It can be used to develop entire applications in just a single line of code. For example, the following section shows how to create a reverse image search application. DataCollection – keep reading ahead to learn more.

Towhee Trainer

As mentioned above, entirely- or self-supervised trained models are often good at generic tasks. However, you’ll sometimes want to create an embedding model that’s good at something particular, e.g., differentiating between cats versus dogs. Towhee provides a training/fine-tuning framework specifically for this purpose:

>>> from towhee.trainer.training_config import TrainingConfig

>>> training_config = TrainingConfig(

... batch_size=2,

... epoch_num=2,

... output_dir="quick_start_output"

... )You’ll also need to specify a dataset to train on:

>>> train_data = dataset('train', size=20, transform=my_data_transformer)

>>> eval_data = dataset('eval', size=10, transform=my_data_transformer)With everything in place, training a new embedding model from an existing operator is a piece of cake:

>>> op.train(

... training_config,

... train_dataset=train_data,

... eval_dataset=eval_data

... )Once complete, you can use the same operator in your application with no changes to the rest of the code.

An Example Application: Reverse Image Search

To demonstrate how Towhee can be used, let’s quickly build a small reverse image search application. Reverse image search is well-known. So let’s dive right in:

>>> import towhee

>>> from towhee.functional import DataCollectionWe’ll be using a small dataset along with 10 query images. Using DataCollectionWe can then load both the dataset and query images:

>>> dataset = DataCollection.from_glob('./image_dataset/dataset/*.JPEG').unstream()

>>> query = DataCollection.from_glob('./image_dataset/query/*.JPEG').unstream()The next step is to compute embeddings over the entire dataset collection:

>>> dc_data = (

... dataset.image_decode.cv2()

... .image_embedding.timm(model_name="resnet50")

... )

...This step creates a local collection of embedding vectors – one for each image in the dataset. With this, we can now query for nearest neighbors:

>>> result = (

... query.image_decode.cv2() # decode all images in the query set

... .image_embedding.timm(model_name="resnet50") # compute embeddings using the `resnet50` embedding model

... .towhee.search_vectors(data=dc_data, cal="L2", topk=5) # search the dataset

... .map(lambda x: x.ids) # acquire IDs (file paths) of similar results

... .select_from(dataset) # get the result image

... )

...We also provide a way to deploy your application using Ray. Specify query.set_engine('ray') And you’re good to go!

Closing words

We do not consider Towhee a full-fledged, end-to-end model serving or MLOps platform, nor is that what we set out to achieve. Instead, we aim to supercharge the development of applications that require embeddings and other ML tasks. With Towhee, we hope to enable rapid prototyping of embedding models and pipelines on your local machine (Pipeline + Trainer), allow for the development of an ML-centric application in just a couple of lines of code (DataCollection), and allow for easy and rapid deployment to your cluster (via Ray).

That’s all, folks – I hope this post was informative. If you have any questions, comments, or concerns, feel free to comment below. Stay tuned for more!