Prompt Engineering Tutorial for AI/ML Engineers

The generative AI revolution has made significant progress in the past year, mostly in the release of Large Language Models (LLMs). It is true that generative AI is here to stay and has a great future in the world of software engineering. While models work amazingly well and produce advanced outputs, we can also influence models to produce the outputs we want. It’s an art to make language models work to produce results/outputs as expected — and this is where prompt engineering comes into play. Prompts play a vital role in talking with language models. In this article, we’ll take a deeper dive into everything you need to know about prompt engineering.

What Is Prompt Engineering?

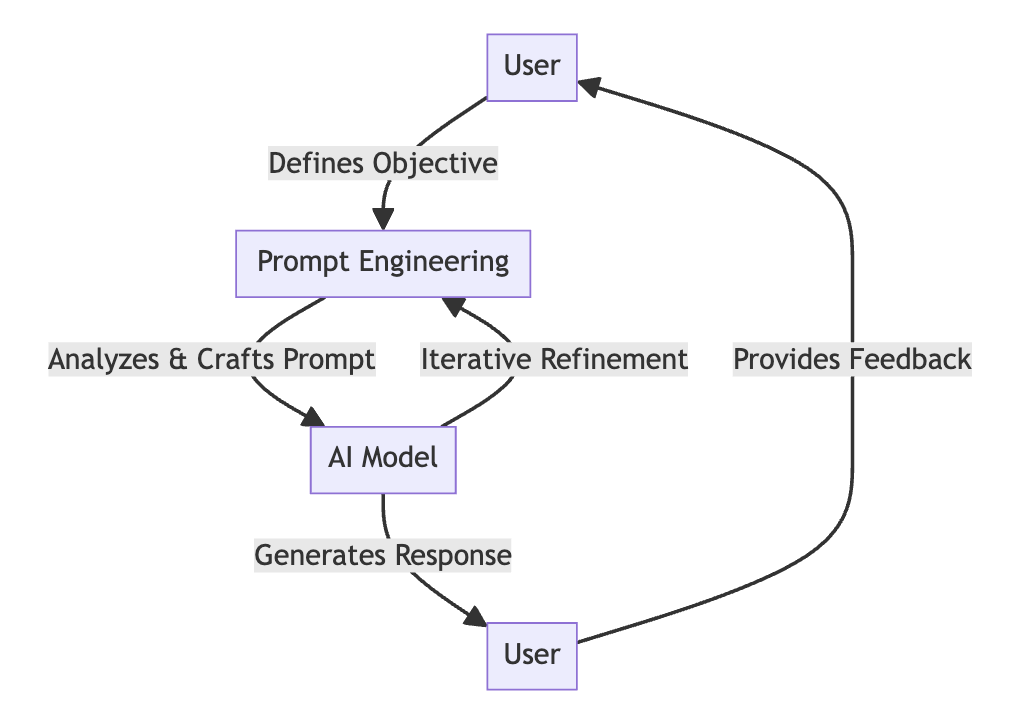

Prompt engineering is the first step toward talking with LLMs. Essentially, it’s the process of crafting meaningful instructions to generative AI models so they can produce better results and responses. This is done by carefully choosing the words and adding more context. An example would be training a puppy with positive reinforcement, using rewards and treats for obedience. Usually, large language models produce large amounts of data that can be biased, hallucinated, or fake — all of which can be reduced with prompt engineering.

Prompt Engineering Techniques

Prompt engineering involves understanding the capabilities of LLMs and crafting prompts that effectively communicate your goals. By using a mix of prompt techniques, we can tap into an endless array of possibilities — from generating news articles that feel crafted by hand to writing poems that emulate your desired tone and style. Let’s dive deep into these techniques and understand how different prompt techniques work.

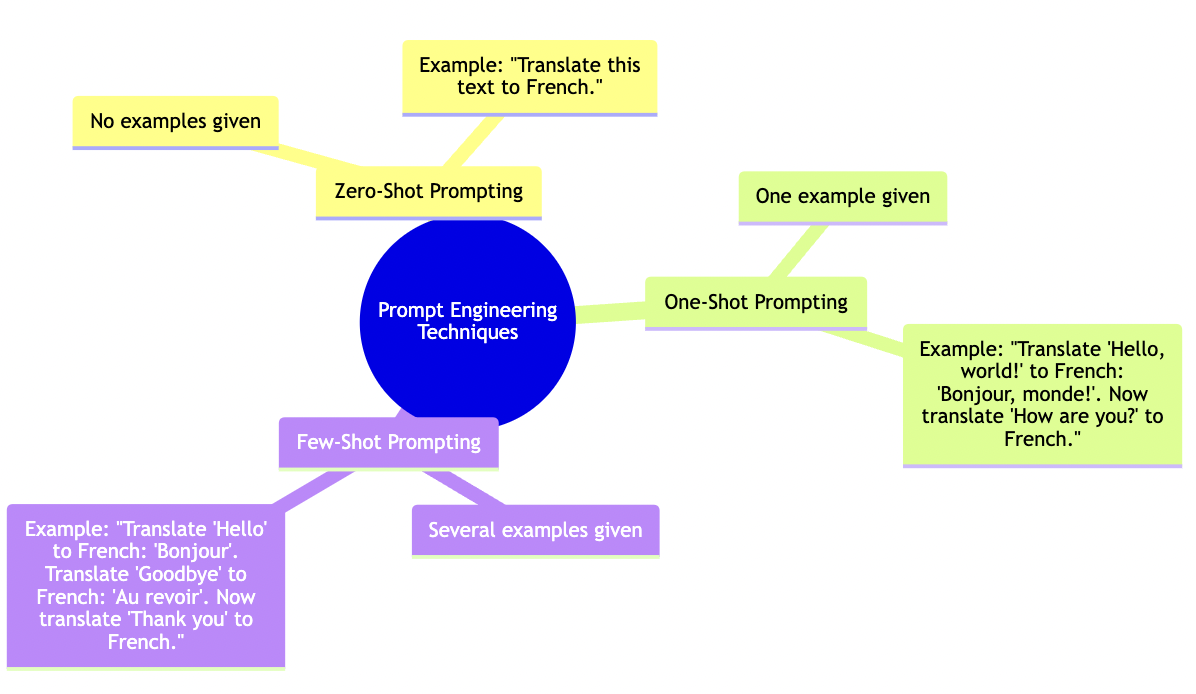

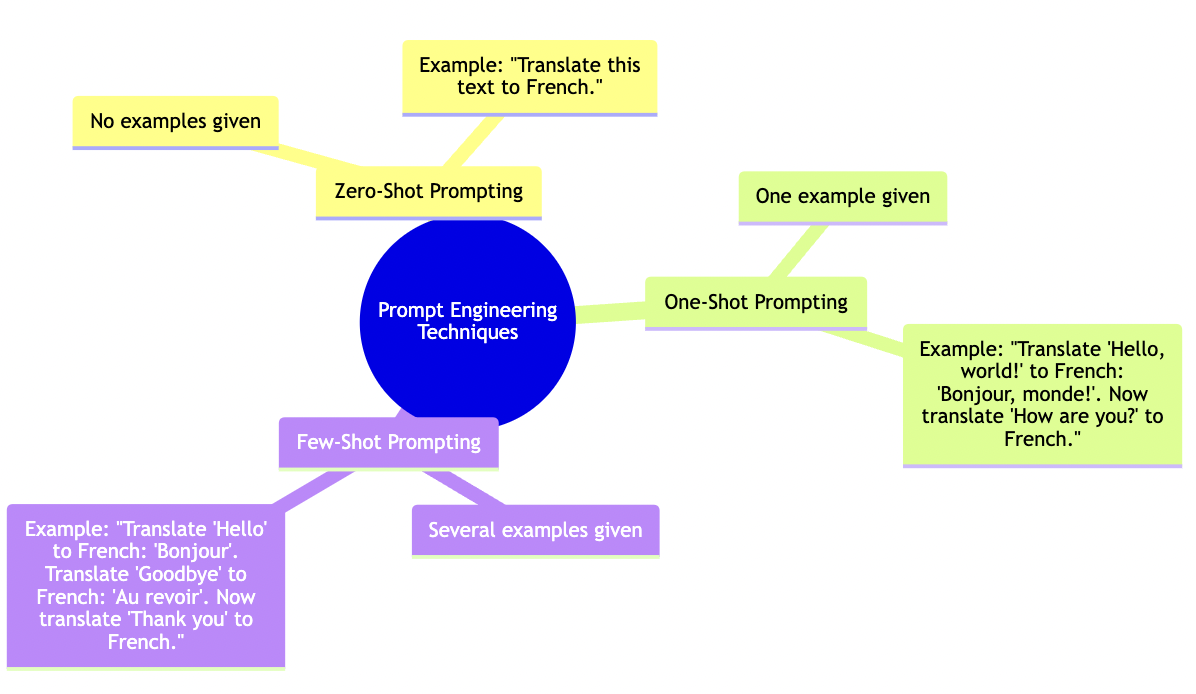

Zero-Shot Prompting

You provide a prompt directly to the LLM without any additional examples or information. This is best suited for general tasks where you trust the LLM’s ability to generate creative output based on its understanding of language and concepts.

One-Shot Prompting

You provide one example of the desired output along with the prompt. This is best suited for tasks where you want to guide the LLM toward a specific style, tone, or topic.

Few-Shot Prompting

You provide a few (usually two to four-) examples of the desired output along with the prompt. This is best suited for tasks where you need to ensure consistency and accuracy, like generating text in a specific format or domain.

Chain-Of-Thought Prompts

This focuses on breaking down complex tasks into manageable steps, fostering reasoning and logic; think of dissecting a math problem into bite-sized instructions for the LLM.

Contextual Augmentation

This involves providing relevant background information to enhance accuracy and coherence; imagine enriching a historical fiction prompt with detailed research on the era.

Meta-Prompts and Prompt Combinations

This process involves fine-tuning the overall LLM behavior and blending multiple prompting styles; think of meta-prompts as overarching principles while combinations leverage different techniques simultaneously.

Human-In-The-Loop

Lastly, this process integrates human feedback and iteratively refining prompts for optimal results; picture a collaborative dance between humans and LLM, with each iteration enhancing the outcome.

These techniques are just a glimpse into the vast toolbox of prompt engineering. Remember, experimentation is key — tailor your prompts to your specific goals and embrace the iterative process.

Prompt Engineering Best Practices

Best practices in prompt engineering involve understanding the capabilities and limitations of the model, crafting clear and concise prompts, and iteratively testing and refining prompts based on the model’s responses. Whether you’re an AI developer, researcher, or enthusiast, these best practices will enhance your interactions with advanced language technologies, leading to more accurate and efficient outcomes.

Clarity and Specificity

Be clear about your desired outcome with specific instructions, desired format, and output length. Think of it as providing detailed directions to a friend, not just pointing in a general direction.

Contextual Cues

Give the model relevant context to understand your request. Provide background information, relevant examples, or desired style and tone. Think of it as setting the scene for your desired output.

Example Power

Show the model what you want by providing examples of desired output, helping narrow down the possibilities, and guiding the model toward your vision. Think of it as showing your friend pictures of the destination — instead of just giving them the address.

Word Choice Matters

Choose clear, direct, and unambiguous language. Avoid slang, metaphors, or overly complex vocabulary. Remember, the model interprets literally, so think of it as speaking plainly and clearly to ensure understanding.

Iteration and Experimentation

Don’t expect perfect results in one try. Be prepared to revise your prompts, change context cues, and try different examples. Think of it as fine-tuning the recipe until you get the perfect dish.

Model Awareness

Understand the capabilities and limitations of the specific model you’re using. For example, some models are better at factual tasks, while others excel at creative writing — so choose the right tool for the job.

Safety and Bias

Be mindful of potential biases in both your prompts and the model’s output. Avoid discriminatory language or stereotypes, and use prompts that promote inclusivity and ethical considerations.

I hope these best practices help you craft effective prompts and unlock the full potential of large language models!

Prompt Engineering Tutorial

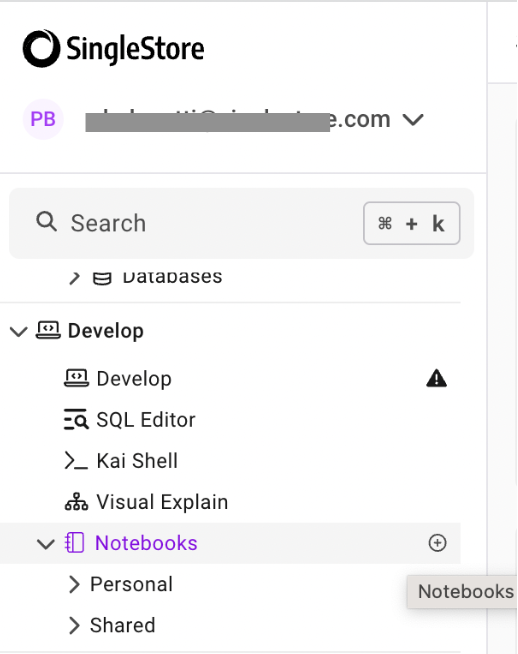

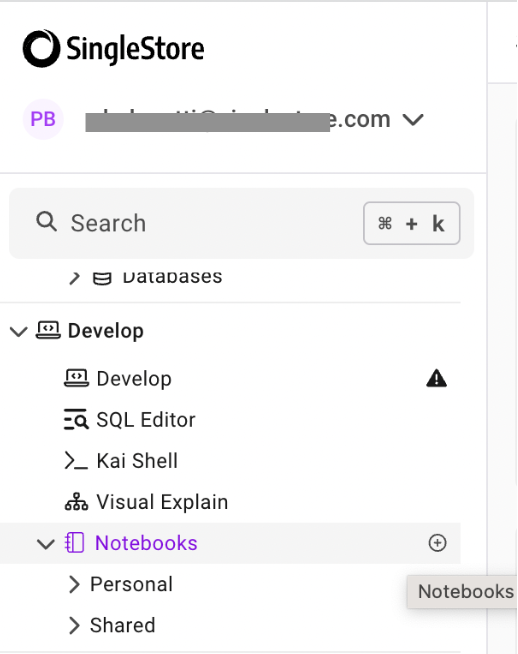

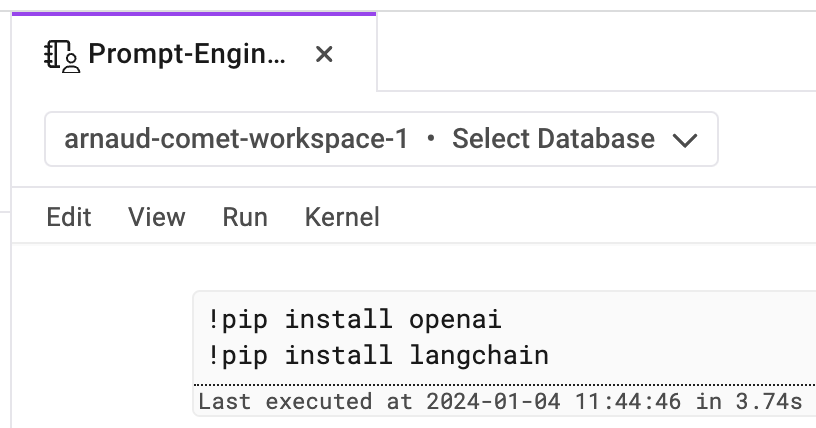

You need SingleStore Notebooks to carry out this tutorial.

The first time you sign up, you will receive $600 in free computing resources.

Now, click on the Notebook icon to start.

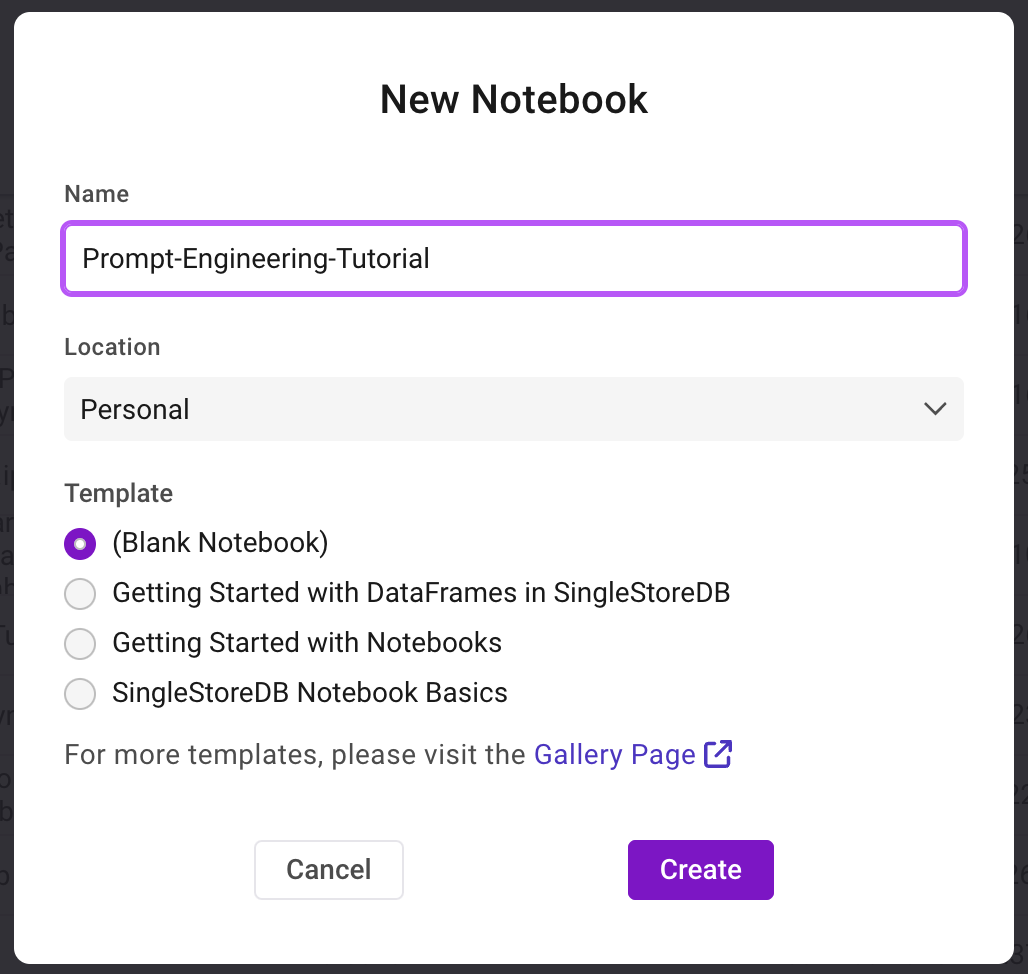

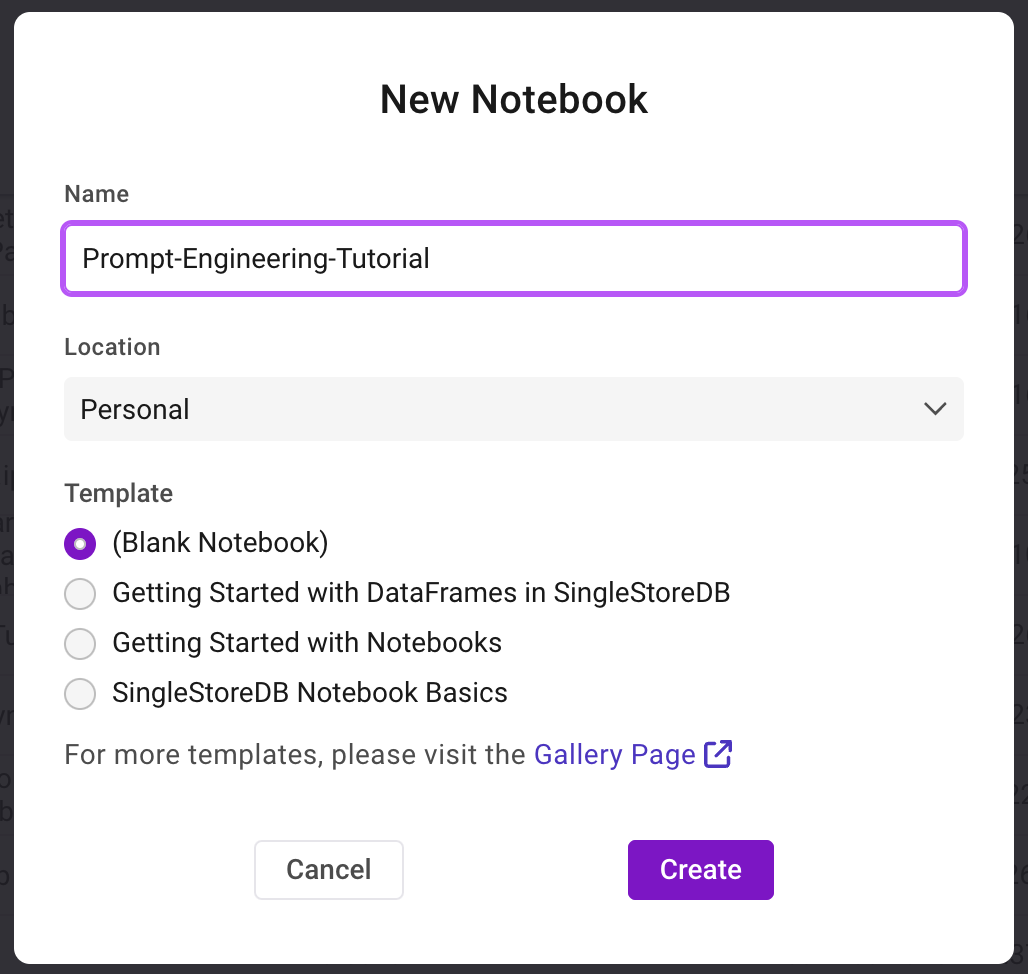

Create a new Notebook and name it as ‘Prompt-Engineering-Tutorial’

Let’s get started with understanding how prompt engineering works practically by running our code snippets inside this Notebook.

We will use the LangChain framework to create prompt templates and use them in our example tutorial. New to LangChain? Check out this beginner’s guide for everything you need to know.

LangChain provides tooling to create and work with prompt templates.

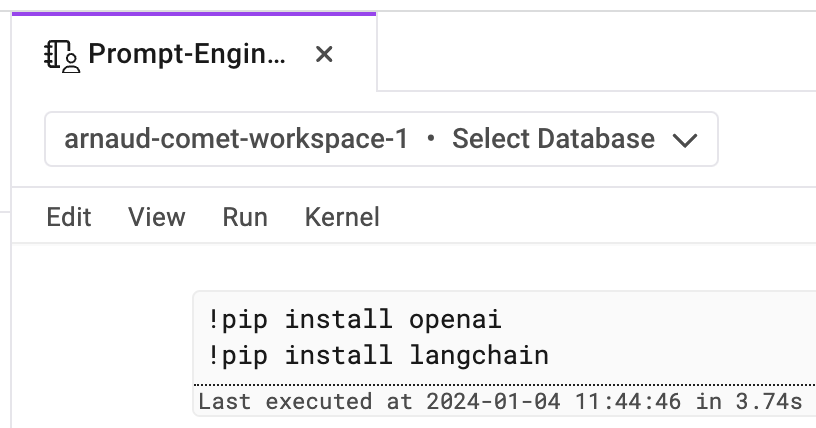

First things first, install the necessary dependencies and libraries — we need OpenAI and LangChain.

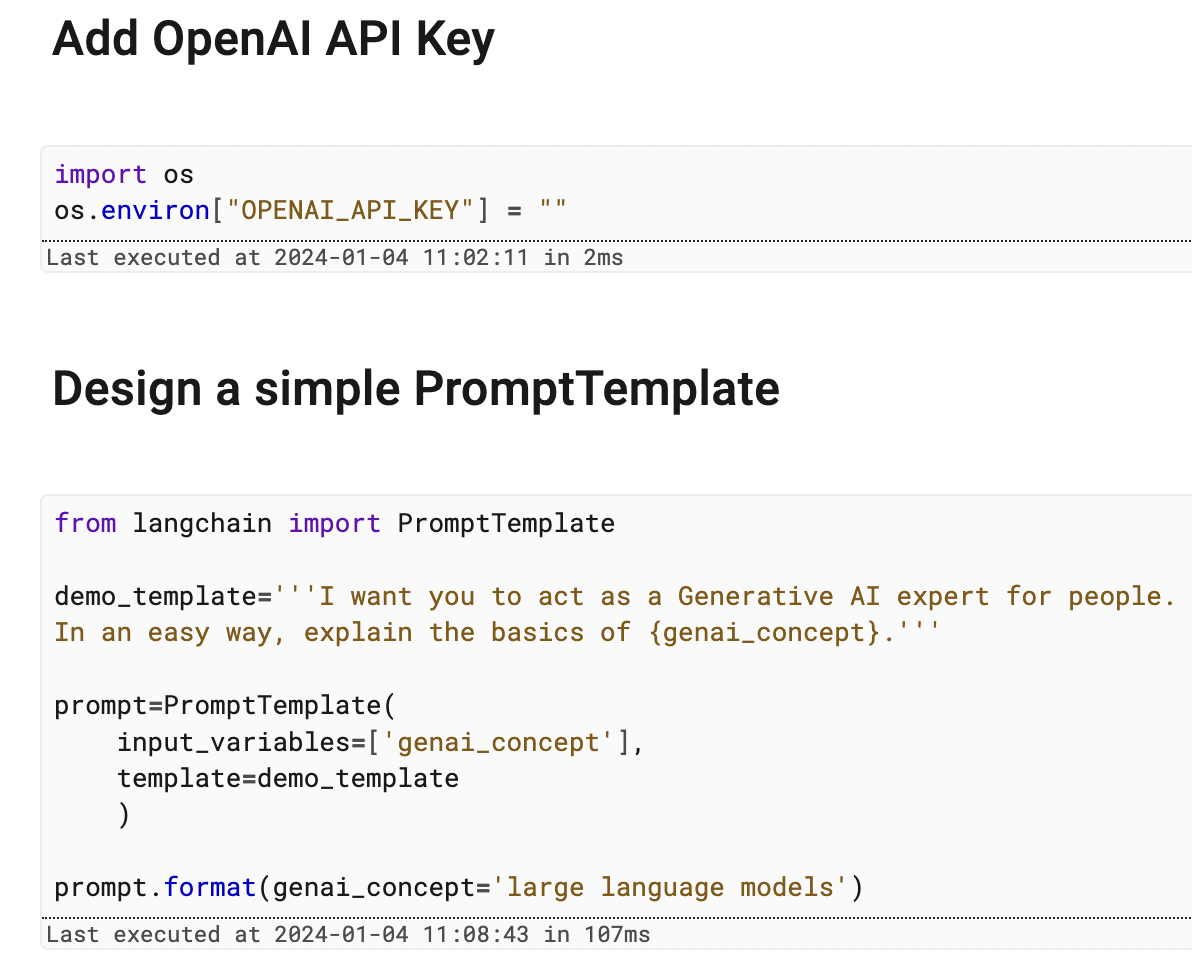

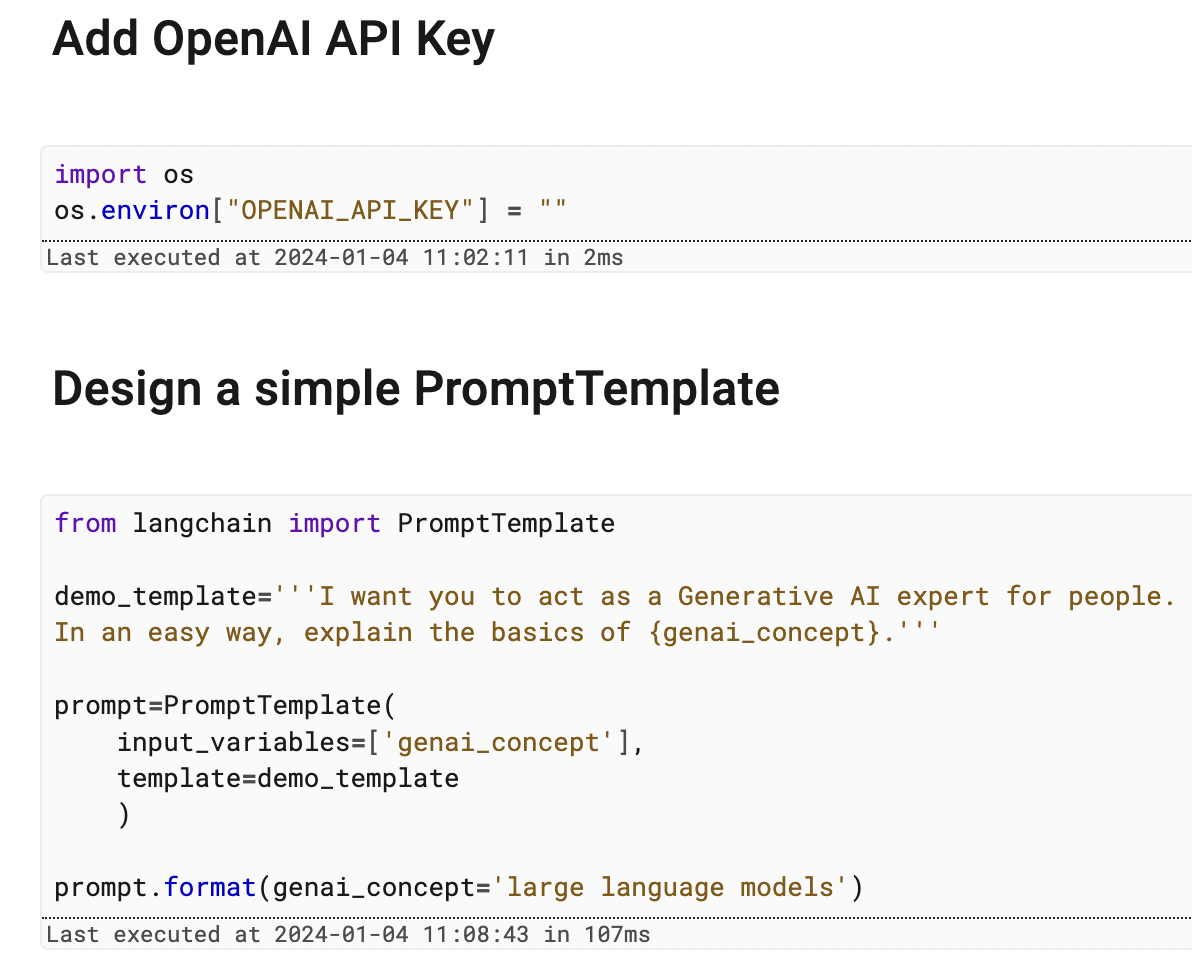

Next, provide the OpenAI API Key.

Create a prompt template as specified in the LangChain documentation.

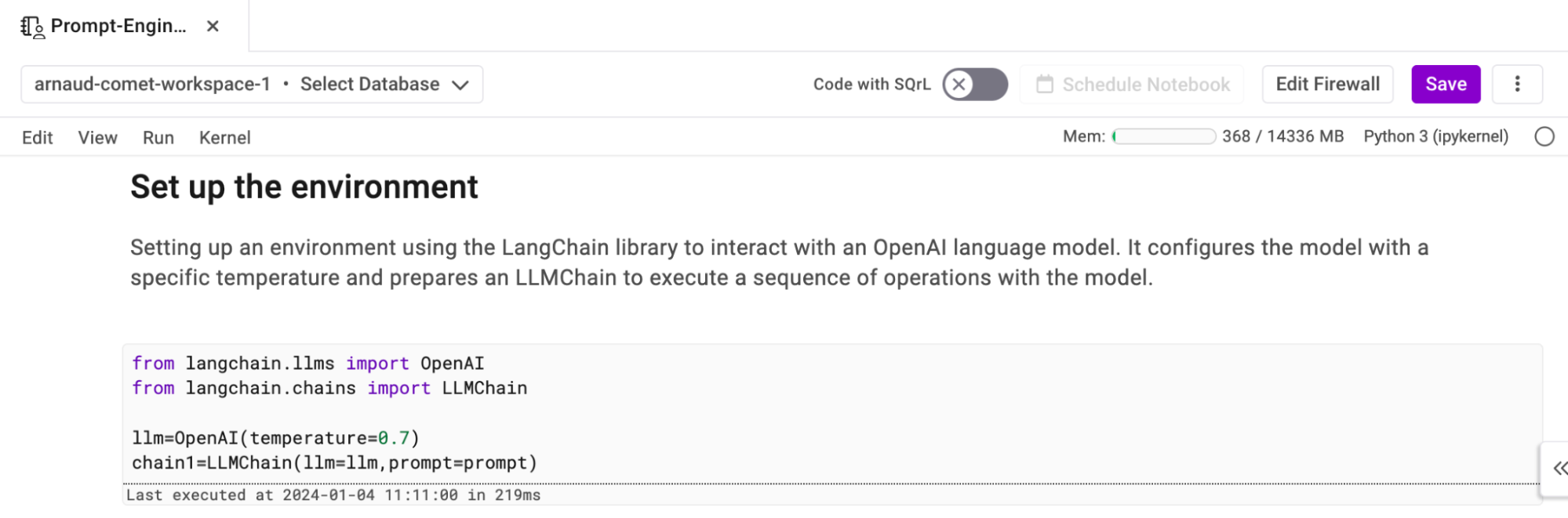

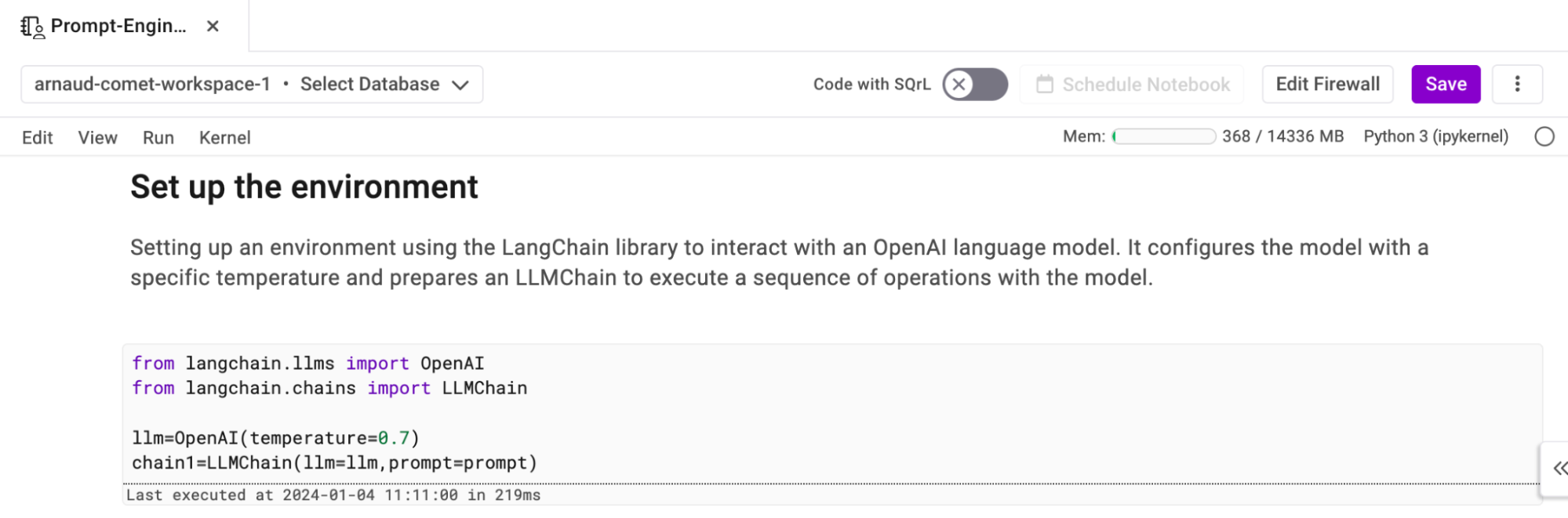

Set up the environment and create the LLMChain.

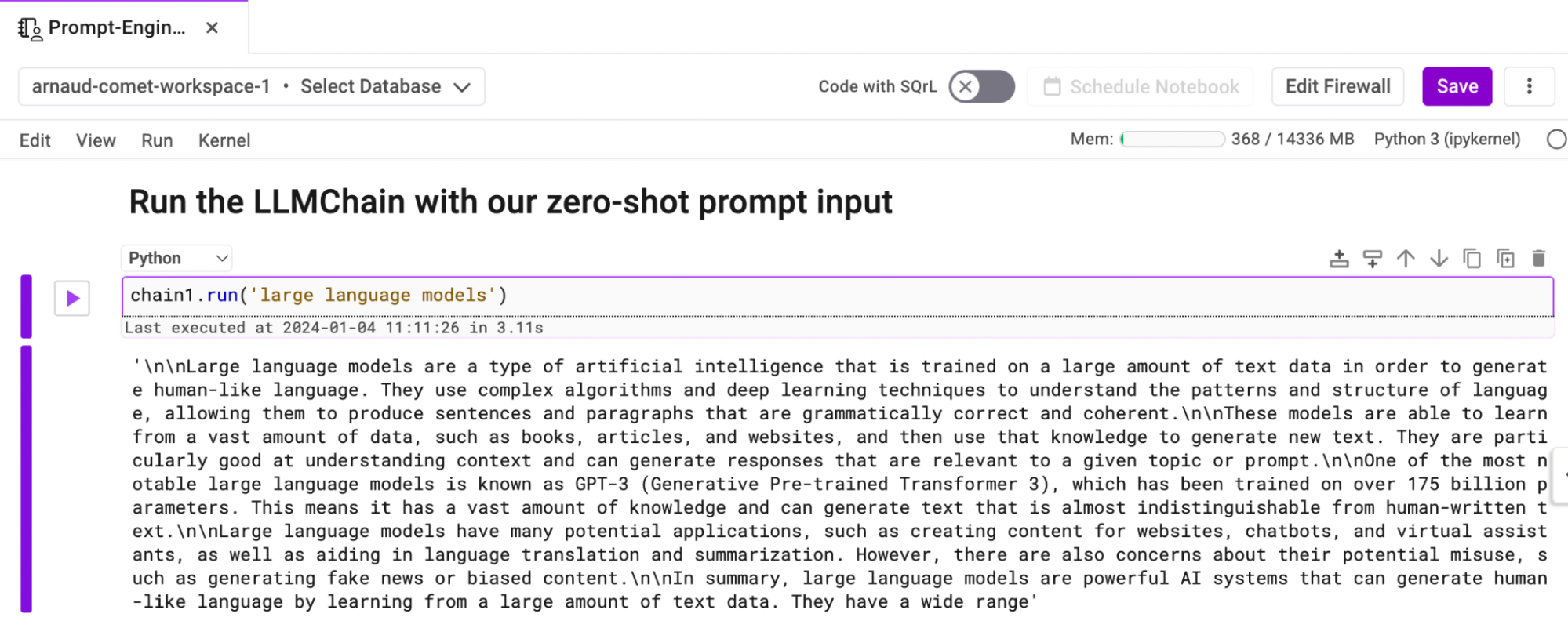

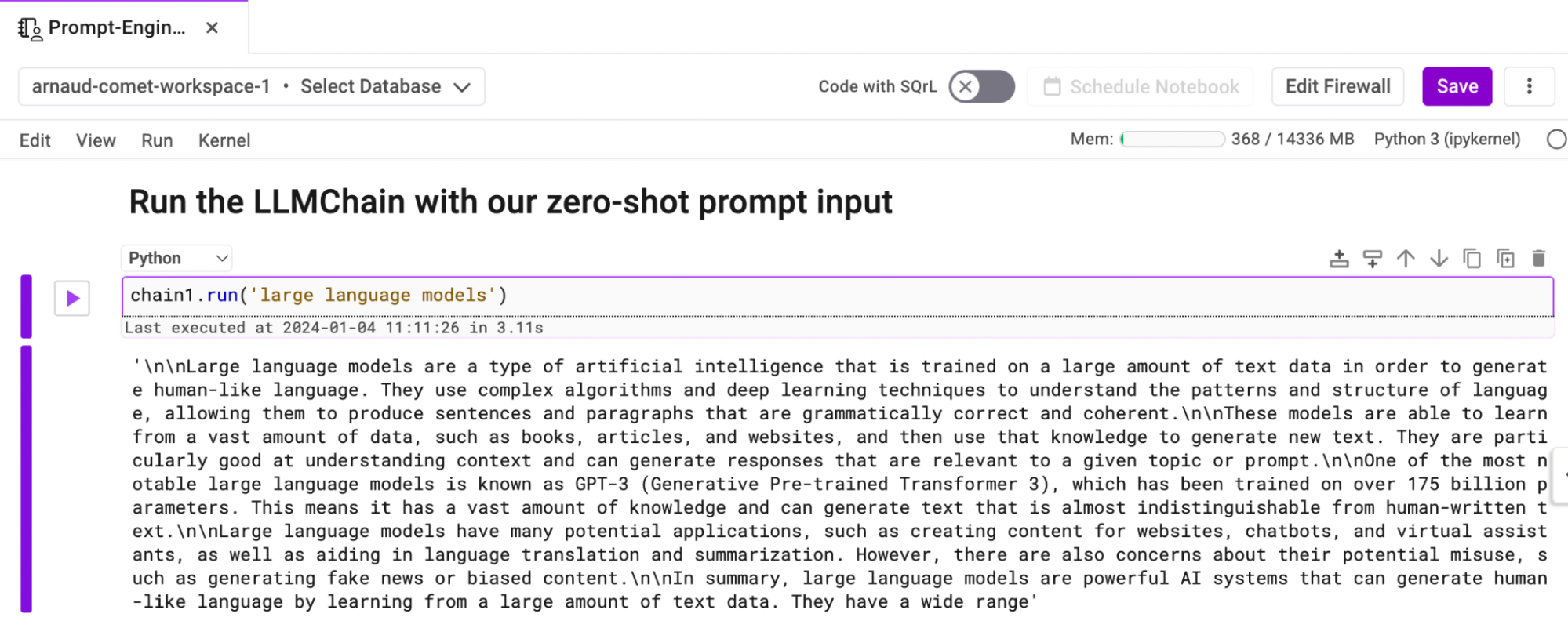

Run the LLMChain with zero-shot prompt input. Here, my input is ‘large language models.’

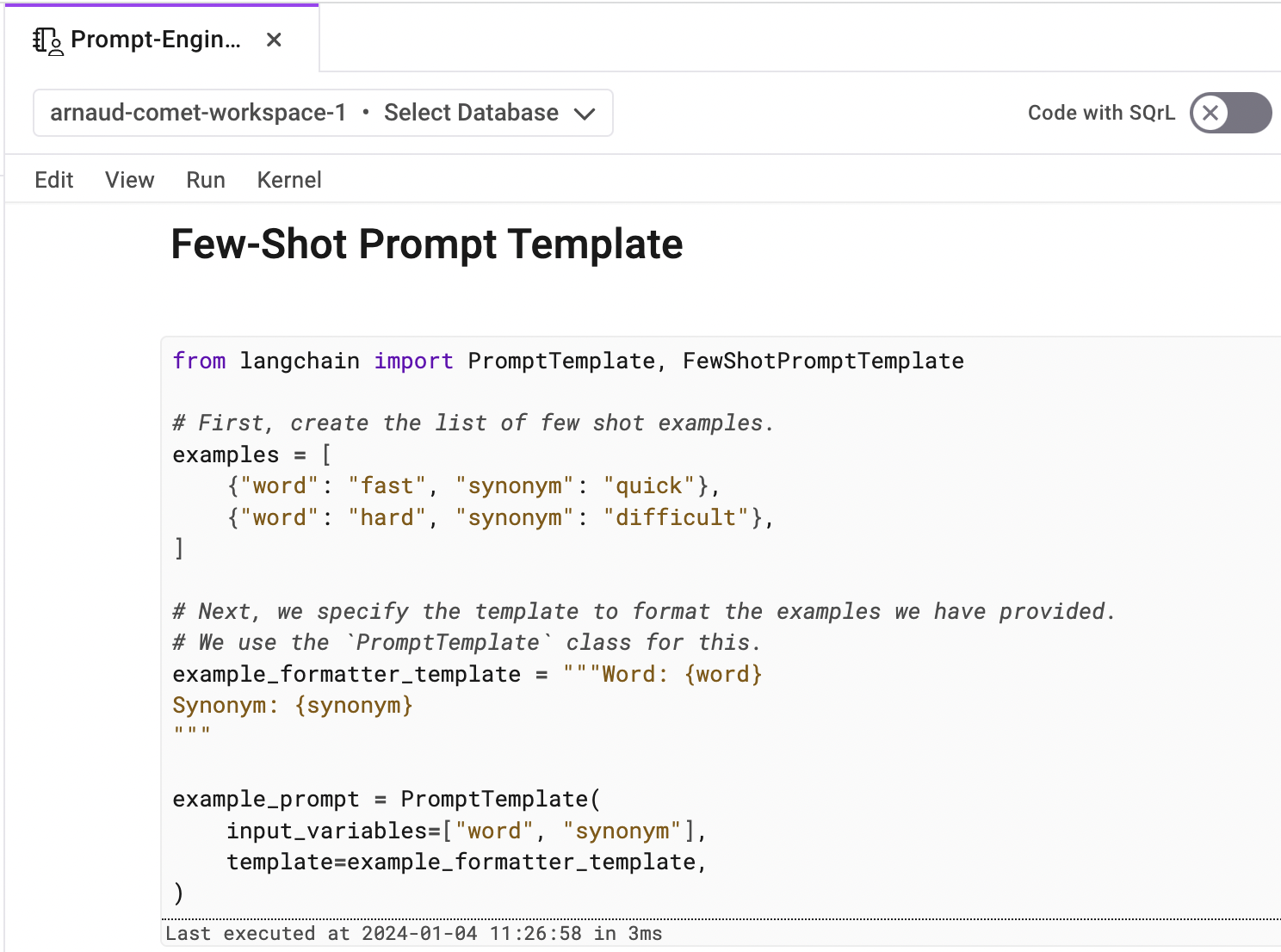

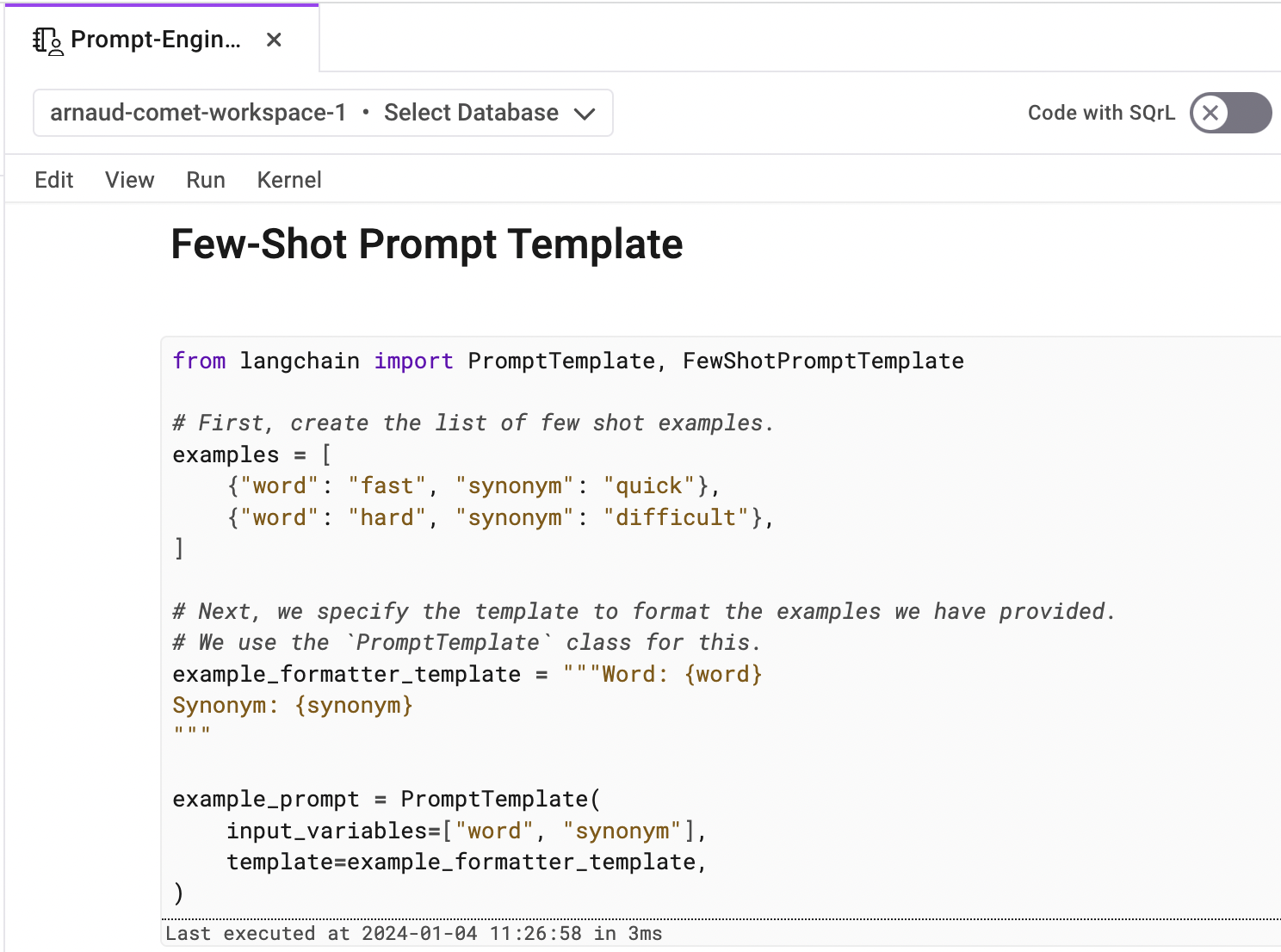

This is how a few-shot prompt template looks.

The complete Notebook code is available in this GitHub repository.

Skills Required To Become a Prompt Engineer

Understanding some common skills required to become a prompt engineer is very important. As the bridge between human intentions and artificial intelligence responses, the role demands a unique blend of technical and soft skills — we have listed six here you should consider creating meaningful and effective prompts.

Communication and Collaboration Skills

Essential for understanding user needs and working with internal teams, effective communication and collaborative skills enable prompt engineers to articulate complex ideas and integrate input from various stakeholders. They foster a productive environment, ensuring the development of prompts aligns with user intent and technical capabilities.

Creative and Critical Thinking

These skills are vital for generating innovative and effective prompts. Creative thinking allows for the exploration of novel ideas and approaches, while critical thinking ensures that prompts are logical, ethical, and meet the desired outcome. This blend of creativity and scrutiny is crucial for crafting quality prompts that engage users and perform as intended.

Basic AI and NLP Knowledge

Understanding the fundamentals of artificial intelligence and natural language processing is crucial for a prompt engineer. This knowledge helps in grasping how AI models interpret and generate language, enabling the creation of prompts that effectively communicate with the AI to produce accurate and relevant responses.

Writing Styles

Mastery of various writing styles is important for prompt engineering since different scenarios require different types of communication. Whether it’s concise instructional writing, creative storytelling, or technical documentation, the ability to adapt writing style to fit the context is key in crafting effective prompts.

Programming Knowledge

A strong foundation in programming — particularly in languages like Python — is beneficial for prompt engineers. Programming skills allow for the automation of tasks, manipulation of data, and even customizing AI models. This technical proficiency can significantly enhance the efficiency and capability of prompt development.

Knowledge of Data Analysis

Understanding data analysis is important for prompt engineers since it allows them to interpret user interactions and model performance. This knowledge helps in refining prompts based on empirical evidence, ensuring they are optimized for both user engagement and accuracy. Data analysis skills are crucial for continuous improvement of prompt effectiveness.

Prompt engineering is a critical and evolving field that enables more effective interactions with AI models. By understanding its core principles, exploring various techniques, and adhering to best practices, users can craft prompts that significantly enhance the performance and relevance of AI responses. The simple tutorial provided underscores the practicality of prompt engineering, offering a hands-on approach to mastering this art. As gen AI continues to advance, the importance of skillful prompt engineering grows —promising a future of more intuitive, efficient, and powerful human-AI collaborations.

The generative AI revolution has made significant progress in the past year, mostly in the release of Large Language Models (LLMs). It is true that generative AI is here to stay and has a great future in the world of software engineering. While models work amazingly well and produce advanced outputs, we can also influence models to produce the outputs we want. It’s an art to make language models work to produce results/outputs as expected — and this is where prompt engineering comes into play. Prompts play a vital role in talking with language models. In this article, we’ll take a deeper dive into everything you need to know about prompt engineering.

What Is Prompt Engineering?

Prompt engineering is the first step toward talking with LLMs. Essentially, it’s the process of crafting meaningful instructions to generative AI models so they can produce better results and responses. This is done by carefully choosing the words and adding more context. An example would be training a puppy with positive reinforcement, using rewards and treats for obedience. Usually, large language models produce large amounts of data that can be biased, hallucinated, or fake — all of which can be reduced with prompt engineering.

Prompt Engineering Techniques

Prompt engineering involves understanding the capabilities of LLMs and crafting prompts that effectively communicate your goals. By using a mix of prompt techniques, we can tap into an endless array of possibilities — from generating news articles that feel crafted by hand to writing poems that emulate your desired tone and style. Let’s dive deep into these techniques and understand how different prompt techniques work.

Zero-Shot Prompting

You provide a prompt directly to the LLM without any additional examples or information. This is best suited for general tasks where you trust the LLM’s ability to generate creative output based on its understanding of language and concepts.

One-Shot Prompting

You provide one example of the desired output along with the prompt. This is best suited for tasks where you want to guide the LLM toward a specific style, tone, or topic.

Few-Shot Prompting

You provide a few (usually two to four-) examples of the desired output along with the prompt. This is best suited for tasks where you need to ensure consistency and accuracy, like generating text in a specific format or domain.

Chain-Of-Thought Prompts

This focuses on breaking down complex tasks into manageable steps, fostering reasoning and logic; think of dissecting a math problem into bite-sized instructions for the LLM.

Contextual Augmentation

This involves providing relevant background information to enhance accuracy and coherence; imagine enriching a historical fiction prompt with detailed research on the era.

Meta-Prompts and Prompt Combinations

This process involves fine-tuning the overall LLM behavior and blending multiple prompting styles; think of meta-prompts as overarching principles while combinations leverage different techniques simultaneously.

Human-In-The-Loop

Lastly, this process integrates human feedback and iteratively refining prompts for optimal results; picture a collaborative dance between humans and LLM, with each iteration enhancing the outcome.

These techniques are just a glimpse into the vast toolbox of prompt engineering. Remember, experimentation is key — tailor your prompts to your specific goals and embrace the iterative process.

Prompt Engineering Best Practices

Best practices in prompt engineering involve understanding the capabilities and limitations of the model, crafting clear and concise prompts, and iteratively testing and refining prompts based on the model’s responses. Whether you’re an AI developer, researcher, or enthusiast, these best practices will enhance your interactions with advanced language technologies, leading to more accurate and efficient outcomes.

Clarity and Specificity

Be clear about your desired outcome with specific instructions, desired format, and output length. Think of it as providing detailed directions to a friend, not just pointing in a general direction.

Contextual Cues

Give the model relevant context to understand your request. Provide background information, relevant examples, or desired style and tone. Think of it as setting the scene for your desired output.

Example Power

Show the model what you want by providing examples of desired output, helping narrow down the possibilities, and guiding the model toward your vision. Think of it as showing your friend pictures of the destination — instead of just giving them the address.

Word Choice Matters

Choose clear, direct, and unambiguous language. Avoid slang, metaphors, or overly complex vocabulary. Remember, the model interprets literally, so think of it as speaking plainly and clearly to ensure understanding.

Iteration and Experimentation

Don’t expect perfect results in one try. Be prepared to revise your prompts, change context cues, and try different examples. Think of it as fine-tuning the recipe until you get the perfect dish.

Model Awareness

Understand the capabilities and limitations of the specific model you’re using. For example, some models are better at factual tasks, while others excel at creative writing — so choose the right tool for the job.

Safety and Bias

Be mindful of potential biases in both your prompts and the model’s output. Avoid discriminatory language or stereotypes, and use prompts that promote inclusivity and ethical considerations.

I hope these best practices help you craft effective prompts and unlock the full potential of large language models!

Prompt Engineering Tutorial

You need SingleStore Notebooks to carry out this tutorial.

The first time you sign up, you will receive $600 in free computing resources.

Now, click on the Notebook icon to start.

Create a new Notebook and name it as ‘Prompt-Engineering-Tutorial’

Let’s get started with understanding how prompt engineering works practically by running our code snippets inside this Notebook.

We will use the LangChain framework to create prompt templates and use them in our example tutorial. New to LangChain? Check out this beginner’s guide for everything you need to know.

LangChain provides tooling to create and work with prompt templates.

First things first, install the necessary dependencies and libraries — we need OpenAI and LangChain.

Next, provide the OpenAI API Key.

Create a prompt template as specified in the LangChain documentation.

Set up the environment and create the LLMChain.

Run the LLMChain with zero-shot prompt input. Here, my input is ‘large language models.’

This is how a few-shot prompt template looks.

The complete Notebook code is available in this GitHub repository.

Skills Required To Become a Prompt Engineer

Understanding some common skills required to become a prompt engineer is very important. As the bridge between human intentions and artificial intelligence responses, the role demands a unique blend of technical and soft skills — we have listed six here you should consider creating meaningful and effective prompts.

Communication and Collaboration Skills

Essential for understanding user needs and working with internal teams, effective communication and collaborative skills enable prompt engineers to articulate complex ideas and integrate input from various stakeholders. They foster a productive environment, ensuring the development of prompts aligns with user intent and technical capabilities.

Creative and Critical Thinking

These skills are vital for generating innovative and effective prompts. Creative thinking allows for the exploration of novel ideas and approaches, while critical thinking ensures that prompts are logical, ethical, and meet the desired outcome. This blend of creativity and scrutiny is crucial for crafting quality prompts that engage users and perform as intended.

Basic AI and NLP Knowledge

Understanding the fundamentals of artificial intelligence and natural language processing is crucial for a prompt engineer. This knowledge helps in grasping how AI models interpret and generate language, enabling the creation of prompts that effectively communicate with the AI to produce accurate and relevant responses.

Writing Styles

Mastery of various writing styles is important for prompt engineering since different scenarios require different types of communication. Whether it’s concise instructional writing, creative storytelling, or technical documentation, the ability to adapt writing style to fit the context is key in crafting effective prompts.

Programming Knowledge

A strong foundation in programming — particularly in languages like Python — is beneficial for prompt engineers. Programming skills allow for the automation of tasks, manipulation of data, and even customizing AI models. This technical proficiency can significantly enhance the efficiency and capability of prompt development.

Knowledge of Data Analysis

Understanding data analysis is important for prompt engineers since it allows them to interpret user interactions and model performance. This knowledge helps in refining prompts based on empirical evidence, ensuring they are optimized for both user engagement and accuracy. Data analysis skills are crucial for continuous improvement of prompt effectiveness.

Prompt engineering is a critical and evolving field that enables more effective interactions with AI models. By understanding its core principles, exploring various techniques, and adhering to best practices, users can craft prompts that significantly enhance the performance and relevance of AI responses. The simple tutorial provided underscores the practicality of prompt engineering, offering a hands-on approach to mastering this art. As gen AI continues to advance, the importance of skillful prompt engineering grows —promising a future of more intuitive, efficient, and powerful human-AI collaborations.