The In-Credible Robot Priest and the Limits of Robot Workers

In the heart of Kyoto, Japan, sits the more than 400-year-old Kodai-ji Temple, graced with ornate cherry blossoms, traditional maki-e art—and now a robot priest made from aluminum and silicone.

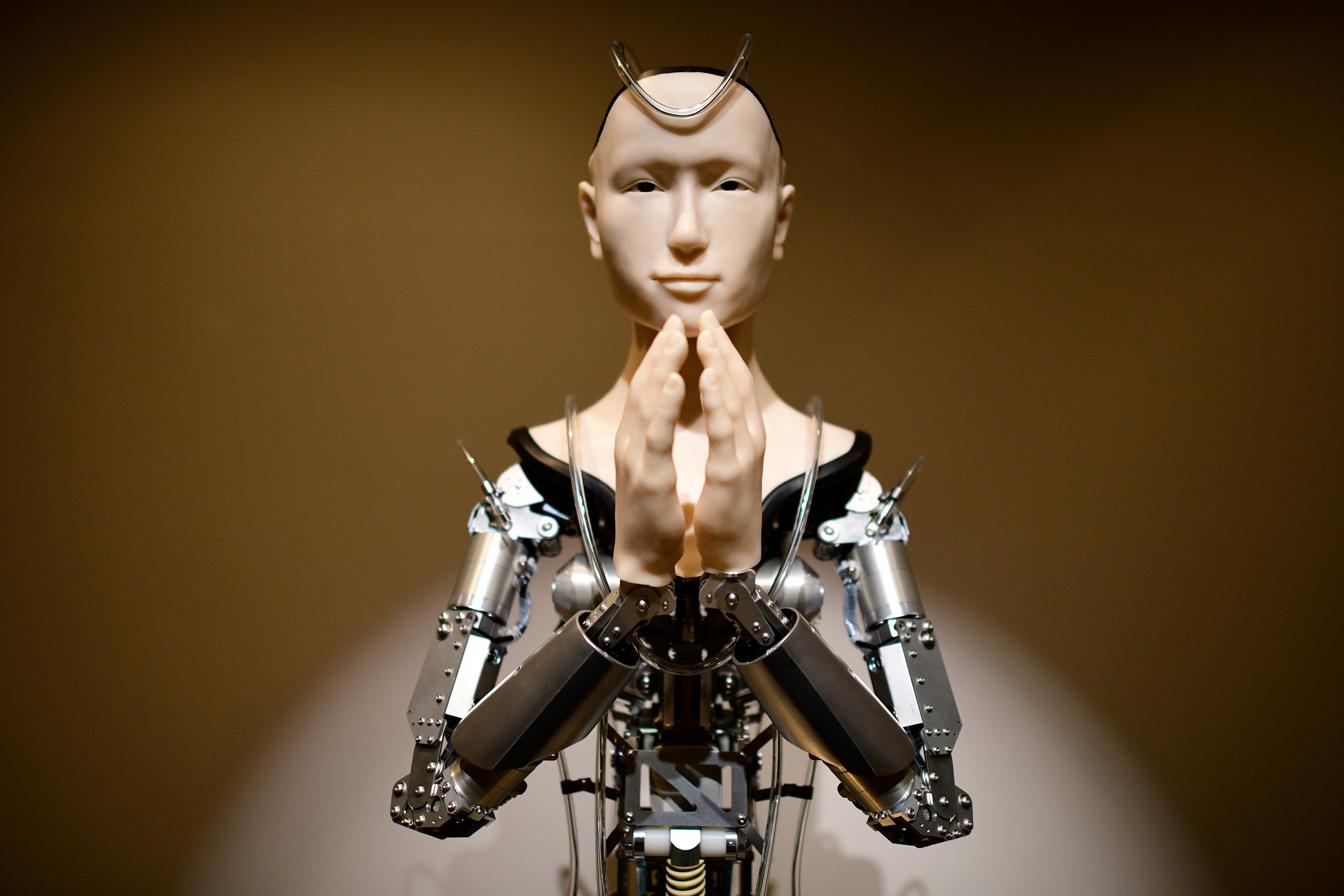

“Mindar,” a robot priest designed to resemble the Buddhist goddess of mercy, is part of a growing robotic workforce that is exacerbating job insecurity across industries. Robots have even infiltrated fields that once seemed immune to automation such as journalism and psychotherapy. Now people are debating whether robots and artificial intelligence systems can replace priests and monks. Would it be naive to think these occupations are safe? Is there anything robots cannot do?

We think that some jobs will never succumb to robot overlords. Years of studying the psychology of automation has have shown us that such machines still lack one quality: credibility. And without it, Mindar and other robot priests will never outperform humans. Engineers rarely think about credibility when they design robots, but it may define which jobs cannot be successfully automated. The implications extend far beyond religion.

What is credibility, and why is it so important? Credibility is a counterpart to capability. Capability describes whether you can do something, while credibility describes whether people trust you to do something. Credibility is one’s reputation as an authentic source of information.

Scientific studies show that earning credibility involves behaving in a way that would be tremendously costly or irrational if you did not truly hold your beliefs. When Greta Thunberg traveled to the 2019 United Nations Climate Action Summit by boat, she signaled an authentic belief that people need to act immediately to curb climate change. Religious leaders display credibility through pilgrimage and celibacy, practices that would not make sense if they did not authentically hold their beliefs. When religious leaders lose credibility, as in the wake of the Catholic Church’s sexual abuse scandals, religious institutions lose money and followers.

Robots are highly capable, but they may not be credible. Studies show that credibility requires authentic beliefs and sacrifice on behalf of these beliefs. Robots can preach sermons and write political speeches, but they do not authentically understand the beliefs they convey. Nor can robots truly engage in costly behavior such as celibacy because they do not feel the cost.

Over the past two years we have partnered with places of worship, including Kodai-ji Temple, to test whether this lack of credibility would hurt religious institutions that employ robot priests. Our hypothesis was not a foregone conclusion. Mindar and other robot priests are spectacles, with wall-to-wall video screens and immersive sound effects. Mindar swivels throughout its sermons to make eye contact with its audience while its hands are clasped together in prayer. Visitors have flocked to experience these sermons. Even with these special effects, however, we doubted whether robot priests could truly inspire people to feel committed to their faith and their religious institutions.

We conducted three related studies, which are described in a recently published paper. In our first study, we recruited people as they left Kodai-ji. Some had seen Mindar, and some had not. We also asked people about the credibility of both Mindar, and the human monks who work at the temple, and then gave them an opportunity to donate to the temple. We found that people rated Mindar as less credible than the human monks who work at Kodai-ji. We also found that people who saw the robot were 12 percent less likely to donate money to the temple (68 percent) than those who had visited the temple but did not watch Mindar (80 percent).

We then replicated this finding twice in our paper: In a follow-up study, we randomly assigned Taoists to watch either a human or a robot deliver a passage from the Tao Te Ching. In a third study, we measured Christians’ subjective religious commitment after they read a sermon that we told them was composed by either a human or a chatbot. In both studies, people rated robots as less credible than humans, and they expressed less commitment to their religious identity after a robot-delivered sermon, compared with a human-delivered one. Participants who saw the robot deliver the Tao Te Ching sermon were also 12 percent less likely to circulate a flyer advertising the temple (18 percent) than those who watched the human priest (30 percent).

We think that these studies have implications beyond religion and foreshadow the limits of automation. Current discussions about the future of automation have focused on capabilities. The website Will Robots Take My Job? uses data on robotic capabilities to estimate the likelihood that any job will be automated soon. In a recent editorial, administrators at Singapore Management University argued that the best path to employment in the age of AI was to cultivate “distinctively human capabilities.”

Focusing solely on capability, however, may be blinding us to spaces where robots are underperforming because they are not credible.

This robot credibility penalty is currently being felt in domains ranging from journalism to health care, law, the military and self-driving vehicles. Robots can capably execute activities in these domains. But they fail to inspire trust, which is crucial. People are less likely to believe news headlines produced by AI, and they do not want machines making moral decisions about who lives and who dies in drone strikes or auto collisions.

Education might also suffer from the credibility penalty. The Khan Academy, which publishes online tools for student education, recently released a generative AI, called Khanmigo, that provides developmental feedback rather than straight answers to students. But will these programs work?

Just like the pious need a leader who has sacrificed for their beliefs, students need role models who authentically care about what they teach. They need competent and credible teachers. Automating education could therefore further widen education inequality. Whereas students from wealthy backgrounds will have access to human teachers with AI assistance, those from poorer backgrounds may end up in classrooms instructed solely by AI.

Politics and social activism are other places where credibility matters. Imagine robots trying to deliver either Abraham Lincoln’s Gettysburg Address or Martin Luther King’s “I Have a Dream” speech. These speeches were so powerful because they were imbued with their authors’ authentic pain and love.

Jobs requiring credibility will operate far more effectively if they find a way to complement AI capabilities with human credibility rather than replace human workers. People may not trust robot journalists and teachers, but they will trust humans in these roles who use AI for assistance.

Properly forecasting the future of work involves recognizing the occupations that need a human touch and protecting human employees in those spaces. In the rush to advance AI technology, we must remember that there are some things robots cannot do.

This is an opinion and analysis article, and the views expressed by the author or authors are not necessarily those of Scientific American.

In the heart of Kyoto, Japan, sits the more than 400-year-old Kodai-ji Temple, graced with ornate cherry blossoms, traditional maki-e art—and now a robot priest made from aluminum and silicone.

“Mindar,” a robot priest designed to resemble the Buddhist goddess of mercy, is part of a growing robotic workforce that is exacerbating job insecurity across industries. Robots have even infiltrated fields that once seemed immune to automation such as journalism and psychotherapy. Now people are debating whether robots and artificial intelligence systems can replace priests and monks. Would it be naive to think these occupations are safe? Is there anything robots cannot do?

We think that some jobs will never succumb to robot overlords. Years of studying the psychology of automation has have shown us that such machines still lack one quality: credibility. And without it, Mindar and other robot priests will never outperform humans. Engineers rarely think about credibility when they design robots, but it may define which jobs cannot be successfully automated. The implications extend far beyond religion.

What is credibility, and why is it so important? Credibility is a counterpart to capability. Capability describes whether you can do something, while credibility describes whether people trust you to do something. Credibility is one’s reputation as an authentic source of information.

Scientific studies show that earning credibility involves behaving in a way that would be tremendously costly or irrational if you did not truly hold your beliefs. When Greta Thunberg traveled to the 2019 United Nations Climate Action Summit by boat, she signaled an authentic belief that people need to act immediately to curb climate change. Religious leaders display credibility through pilgrimage and celibacy, practices that would not make sense if they did not authentically hold their beliefs. When religious leaders lose credibility, as in the wake of the Catholic Church’s sexual abuse scandals, religious institutions lose money and followers.

Robots are highly capable, but they may not be credible. Studies show that credibility requires authentic beliefs and sacrifice on behalf of these beliefs. Robots can preach sermons and write political speeches, but they do not authentically understand the beliefs they convey. Nor can robots truly engage in costly behavior such as celibacy because they do not feel the cost.

Over the past two years we have partnered with places of worship, including Kodai-ji Temple, to test whether this lack of credibility would hurt religious institutions that employ robot priests. Our hypothesis was not a foregone conclusion. Mindar and other robot priests are spectacles, with wall-to-wall video screens and immersive sound effects. Mindar swivels throughout its sermons to make eye contact with its audience while its hands are clasped together in prayer. Visitors have flocked to experience these sermons. Even with these special effects, however, we doubted whether robot priests could truly inspire people to feel committed to their faith and their religious institutions.

We conducted three related studies, which are described in a recently published paper. In our first study, we recruited people as they left Kodai-ji. Some had seen Mindar, and some had not. We also asked people about the credibility of both Mindar, and the human monks who work at the temple, and then gave them an opportunity to donate to the temple. We found that people rated Mindar as less credible than the human monks who work at Kodai-ji. We also found that people who saw the robot were 12 percent less likely to donate money to the temple (68 percent) than those who had visited the temple but did not watch Mindar (80 percent).

We then replicated this finding twice in our paper: In a follow-up study, we randomly assigned Taoists to watch either a human or a robot deliver a passage from the Tao Te Ching. In a third study, we measured Christians’ subjective religious commitment after they read a sermon that we told them was composed by either a human or a chatbot. In both studies, people rated robots as less credible than humans, and they expressed less commitment to their religious identity after a robot-delivered sermon, compared with a human-delivered one. Participants who saw the robot deliver the Tao Te Ching sermon were also 12 percent less likely to circulate a flyer advertising the temple (18 percent) than those who watched the human priest (30 percent).

We think that these studies have implications beyond religion and foreshadow the limits of automation. Current discussions about the future of automation have focused on capabilities. The website Will Robots Take My Job? uses data on robotic capabilities to estimate the likelihood that any job will be automated soon. In a recent editorial, administrators at Singapore Management University argued that the best path to employment in the age of AI was to cultivate “distinctively human capabilities.”

Focusing solely on capability, however, may be blinding us to spaces where robots are underperforming because they are not credible.

This robot credibility penalty is currently being felt in domains ranging from journalism to health care, law, the military and self-driving vehicles. Robots can capably execute activities in these domains. But they fail to inspire trust, which is crucial. People are less likely to believe news headlines produced by AI, and they do not want machines making moral decisions about who lives and who dies in drone strikes or auto collisions.

Education might also suffer from the credibility penalty. The Khan Academy, which publishes online tools for student education, recently released a generative AI, called Khanmigo, that provides developmental feedback rather than straight answers to students. But will these programs work?

Just like the pious need a leader who has sacrificed for their beliefs, students need role models who authentically care about what they teach. They need competent and credible teachers. Automating education could therefore further widen education inequality. Whereas students from wealthy backgrounds will have access to human teachers with AI assistance, those from poorer backgrounds may end up in classrooms instructed solely by AI.

Politics and social activism are other places where credibility matters. Imagine robots trying to deliver either Abraham Lincoln’s Gettysburg Address or Martin Luther King’s “I Have a Dream” speech. These speeches were so powerful because they were imbued with their authors’ authentic pain and love.

Jobs requiring credibility will operate far more effectively if they find a way to complement AI capabilities with human credibility rather than replace human workers. People may not trust robot journalists and teachers, but they will trust humans in these roles who use AI for assistance.

Properly forecasting the future of work involves recognizing the occupations that need a human touch and protecting human employees in those spaces. In the rush to advance AI technology, we must remember that there are some things robots cannot do.

This is an opinion and analysis article, and the views expressed by the author or authors are not necessarily those of Scientific American.