Guidelines for Writing Efficient NVRAM Algorithm

In an era when data management is critical to business success, exponential data growth presents a number of challenges for technology departments, including DRAM density limitations and strict budget constraints. These issues are driving the adoption of memory tiering, a game-changing approach that alters how data is handled and stored. Non-Volatile Random Access Memory (NVRAM), which is becoming more affordable and popular, is one of the key technologies designed to work within a tiered memory architecture.

This article will investigate the fundamentals of NVRAM, compare it to traditional solutions, and provide guidelines for writing efficient NVRAM algorithms.

What Is NVRAM?

Non-Volatile Random Access Memory, or NVRAM, is a type of memory that retains data even when the power is turned off. It combines the RAM (Random Access Memory) and ROM (Read Only Memory) properties, allowing data to be read and written quickly like RAM while also being retained when the power is turned off like ROM.

It is available on Intel-based computers and employs 3D Xpoint, a revolutionary memory technology that strikes a balance between the high speed of RAM and the persistence of traditional storage, providing a new solution for high-speed, long-term data storage and processing.

NVRAM is typically used for specific purposes such as system configuration data storage rather than as a general-purpose memory for running applications.

NVRAM is used in a variety of memory modules, including:

- Dual In-line Memory Modules (DIMMs), which use NVRAM to store firmware data or provide persistent memory

- Solid State Drives (SSDs) that use NVRAM to store firmware, wear-leveling data, and sometimes to cache writes

- Motherboard Chipsets that use NVRAM to store BIOS or UEFI settings

- PCIe Cards that use NVRAM for high-speed data storage or caching

- Hybrid memory modules use NVRAM in addition to traditional RAM.

What Are the Differences Between NVRAM and RAM?

To gain a better understanding of the differences between NVRAM and RAM, it is necessary to review the concepts of both types of memory.

RAM, or Random Access Memory, is a type of memory that can be read and written to in any order and is commonly used to store working data and machine code. RAM is “volatile” memory, which means it can store data until the computer is powered down.

Unlike RAM, NVRAM can retain stored information, making it ideal for storing critical data that must persist across reboots. It may contain information such as system configurations, user settings, or application state.

Apart from this critical distinction, these types of memory differ in other ways that define their advantages and disadvantages:

- Speed: While DRAM is fast, especially when it comes to accessing and writing data, its speed is typically lower than what NVRAM strives for. NVRAM, in addition to potentially competing with DRAM (Dynamic RAM) in terms of speed, offers the durability of traditional non-volatile memory.

- Energy consumption: NVRAM consumes less power than RAM/DRAM, owing to the fact that it does not require power to retain data, whereas the latter requires constant refreshing.

- Cost and availability: NVRAM may be more expensive and less widely available at first than established memory technologies such as RAM, which is widely available and available in a variety of price ranges.

What Distinguishes NVRAM Algorithms?

Because NVRAM allows for the direct storage of important bits of information (such as program settings) in memory, it becomes a game changer in the industry. The NVRAM algorithms are defined by several key characteristics:

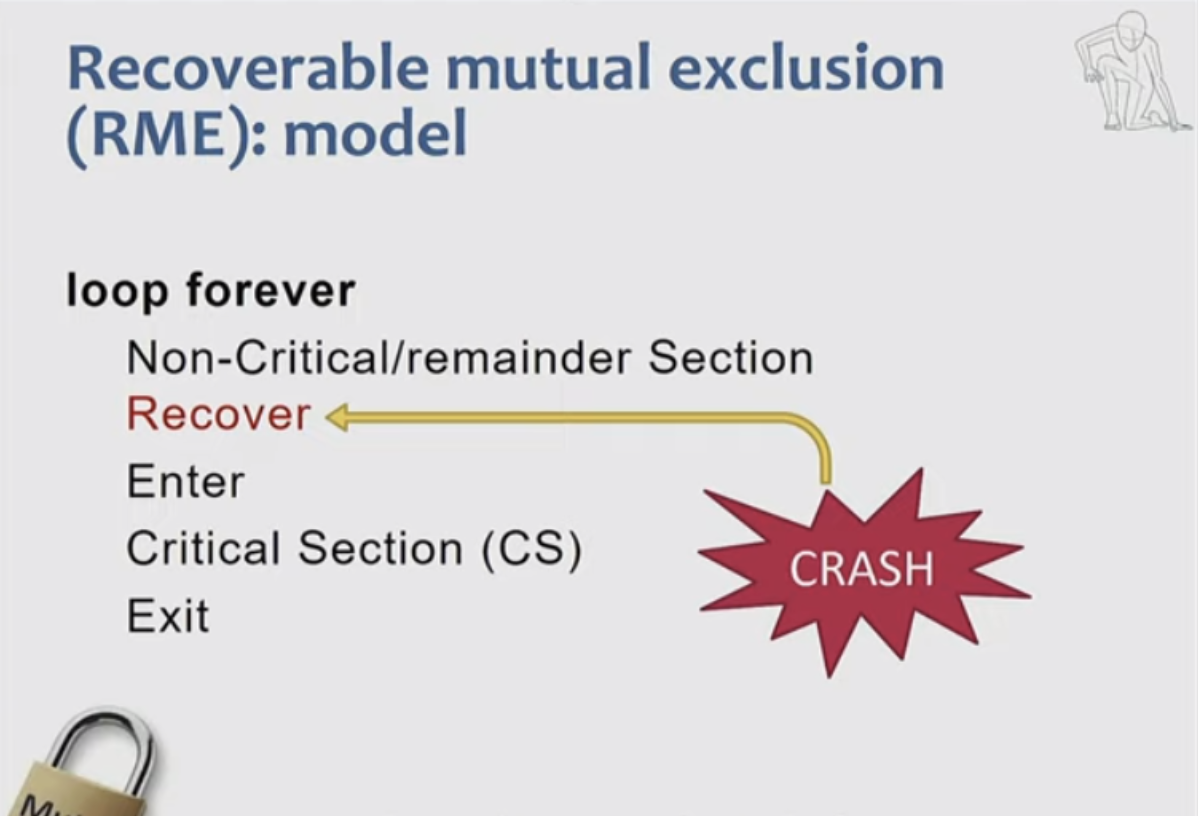

- NVRAM provides new opportunities for developing recoverable algorithms, allowing for efficient recovery of a program’s state following system or individual process failure.

- NVRAM frequently has faster read and write speeds than traditional magnetic disc drives or flash-based SSDs, making it suitable for high-performance computing and real-time tasks that require quick data access.

- Some types of NVRAM, such as flash memory, are prone to wear due to frequent rewrites. This necessitates the use of special wear-leveling algorithms, which distribute write operations evenly across the memory in order to extend its lifespan.

- Integrating NVRAM into systems necessitates taking into account its distinct characteristics, such as access speed and wear management. This could entail modifying existing algorithms and system architectures.

How To Write NVRAM Algorithms

Mutual Exclusion Algorithms

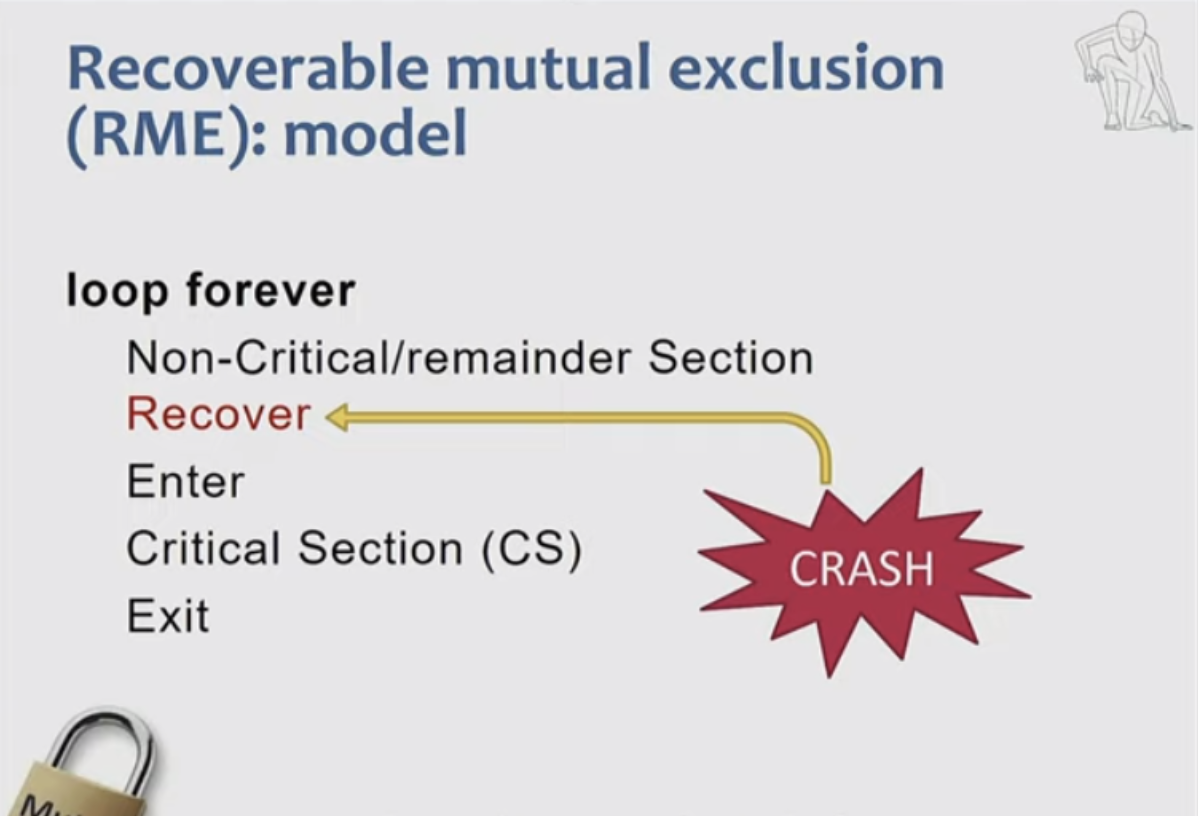

Mutex (Mutual Exclusion) algorithms are designed to ensure that multiple processes can manage access to shared resources without conflict, even in the event of system crashes or power outages.

The following are the key requirements for this type of algorithm:

- Mutual exclusion: It ensures that only one process or thread can access a critical section at the same time, preventing concurrent access to shared resources.

- Deadlock-free: This avoids situations in which processes are indefinitely waiting for each other to release resources, ensuring that programs run continuously.

- Starvation-free: This ensures that every process has access to the critical section, preventing indefinite delays for any process.

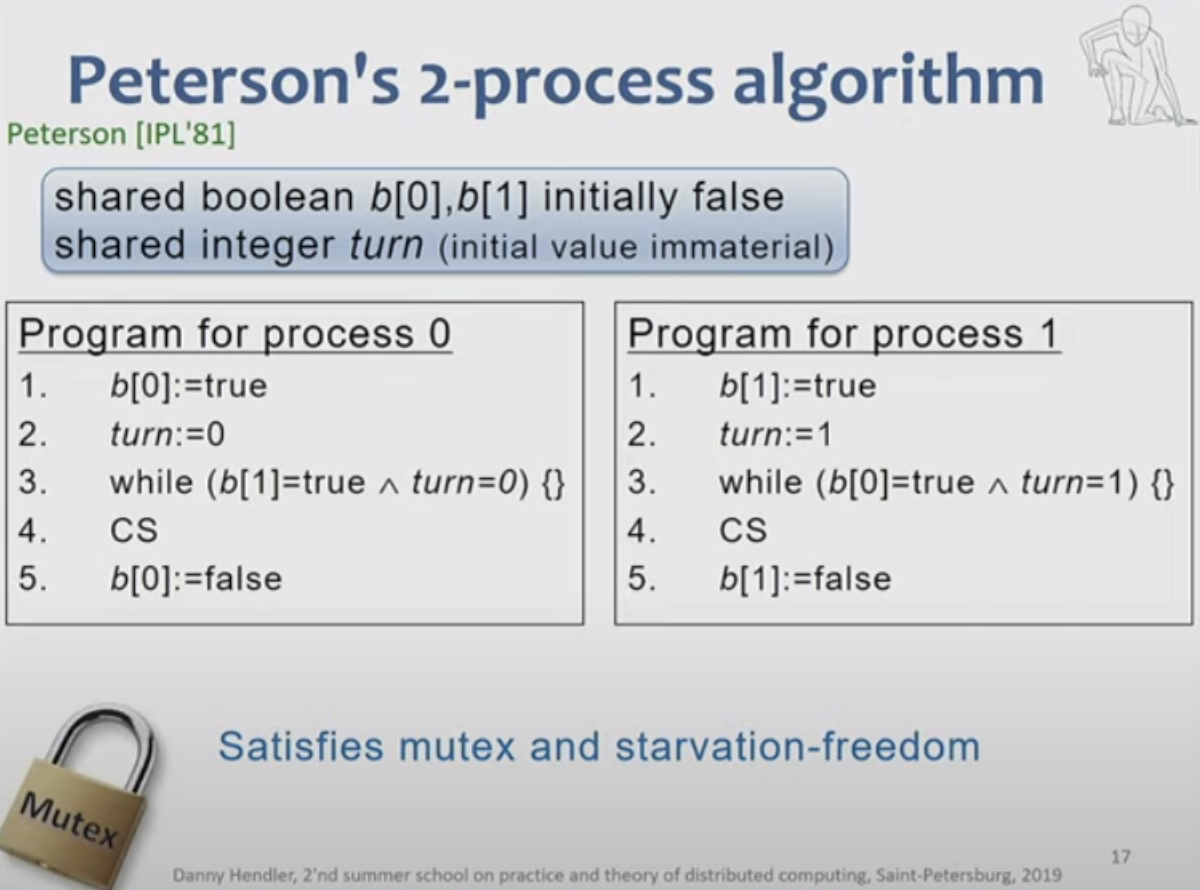

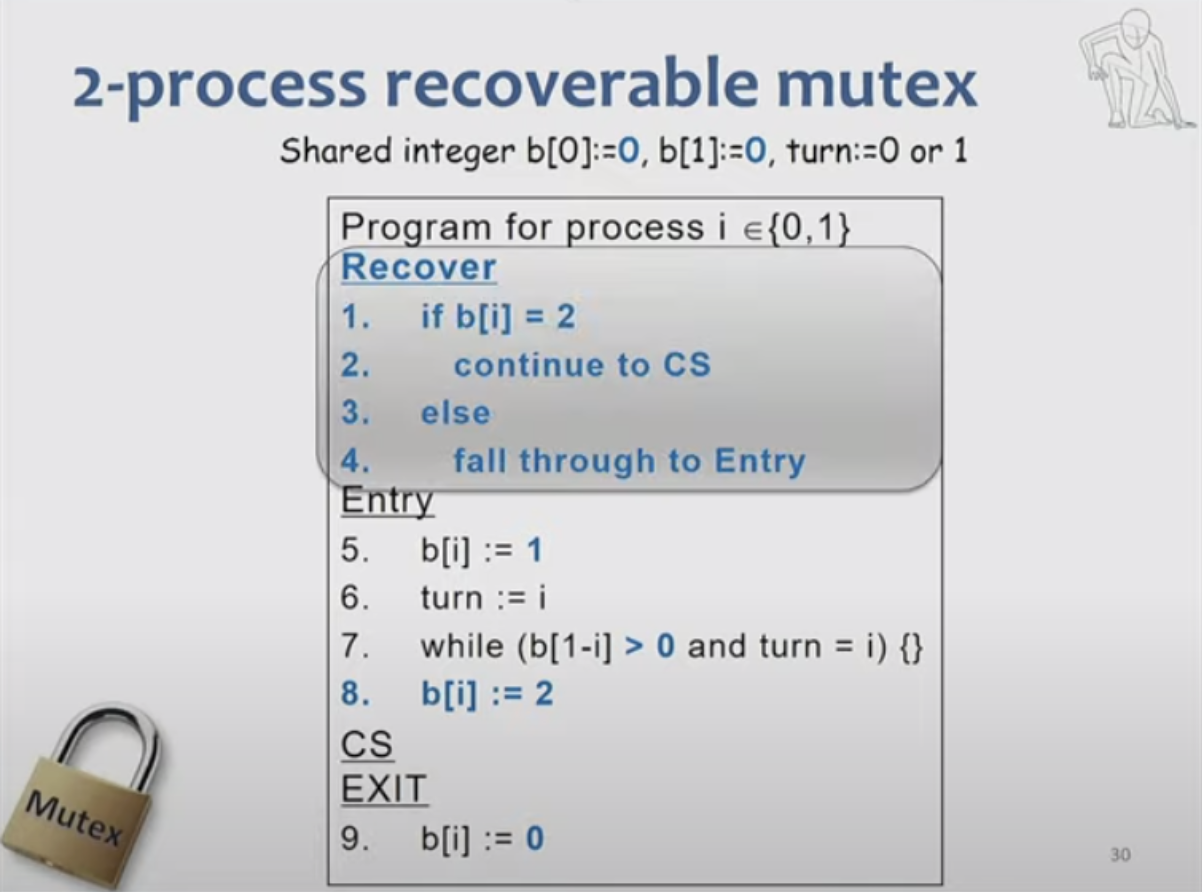

Peterson’s Algorithm for NVRAM

Peterson’s Algorithm is an example of an algorithm that can be adapted for NVRAM. In computer science, it is a concurrency control algorithm used to achieve mutual exclusion in multi-threading environments. It enables multiple processes to share a single-use resource without conflict while ensuring that only one process has access to the resource at any given time. In an NVRAM environment, Peterson’s algorithm, which was originally designed for two processes, can be extended to support multiple processes (from 0 to n-1).

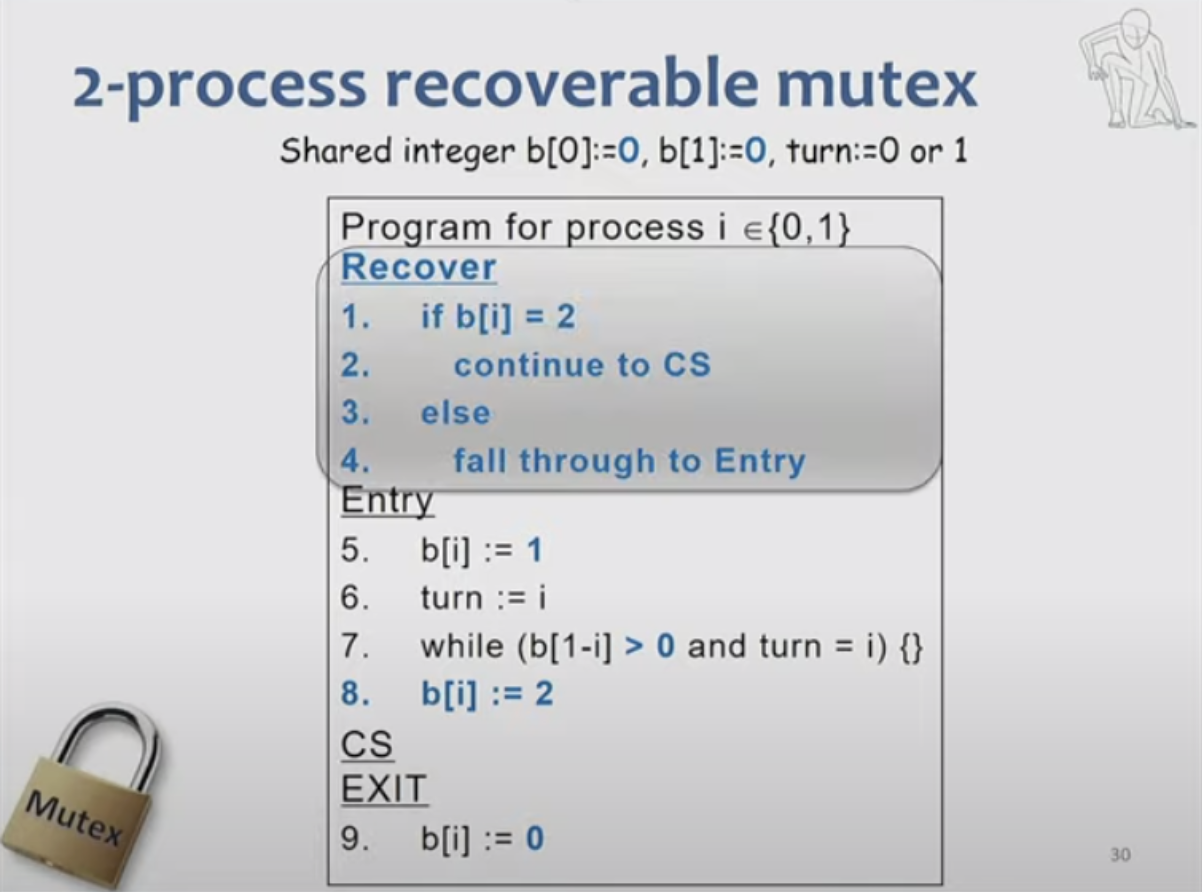

Adapting Peterson’s algorithm for NVRAM entails not only expanding it to support multiple processes but also incorporating mechanisms for post-failure recoverability.

To adapt Peterson’s algorithm for recoverability in NVRAM include specific recovery code that allows a process to re-enter the critical section after a crash. This might involve checking the state of shared variables or locks to determine the last known state before the crash.

To write the algorithm, you must first complete the following steps:

- Initialization: In NVRAM, define shared variables (flag array, turn variable). Set these variables to their default values, indicating that no processes are currently in the critical section.

- Entry section: Each process that attempts to enter the critical section sets a flag in the NVRAM. After that, the process sets the turn variable to indicate its desire to enter the critical section. It examines the status of other processes’ flags as well as the turn variable to see if it can enter the critical section.

- Critical section: Once inside, the process gets to work. NVRAM stores any state changes or operations that must be saved.

- Exit section: When the process completes its operations, it resets its flag in the NVRAM, indicating that it has exited the critical section.

- Recovery mechanism: Include code to handle crashes during entry or exit from the critical section.

- If a process fails in the entry section, it reads the state of competitors and determines whether to continue.

- If a process crashes in the exit section, it re-executes the entry section to ensure proper state updates.

- Handling process failures: Use logic to determine whether a failed process completed its operation in the critical section and take appropriate action.

- Tournament tree for process completion: Create a hierarchical tournament tree structure. Each process traverses this tree, running recovery and entry code at each level.

- If necessary, include an empty recovery code segment to indicate that the process is aware of its failure state.

Nonblocking Algorithms

Nonblocking algorithms are a type of concurrent programming algorithm that enables multiple threads to access and modify shared data without using locks or mutual exclusion mechanisms. These algorithms are intended to ensure that the failure or suspension of one thread does not prevent other threads from progressing.

The following are the primary requirements of nonblocking algorithms:

- Nonblocking algorithms are frequently lock-free, which means that at least one thread makes progress in a finite number of steps even if other threads are delayed indefinitely.

- Wait-free: A more powerful type of nonblocking algorithm is wait-free, in which each thread is guaranteed to complete its operation in a finite number of steps, regardless of the activity of other threads.

- Obstruction-free: The most basic type of nonblocking algorithm, in which a thread can finish its operation in a finite number of steps if it eventually operates without interference from other threads.

Linearizability is a key concept in concurrent programming that is associated with nonblocking algorithms. It ensures that all operations on shared resources (such as read, write, or update) appear to occur in a single, sequential order that corresponds to the actual order of operations in real time.

Nonblocking Algorithm Example

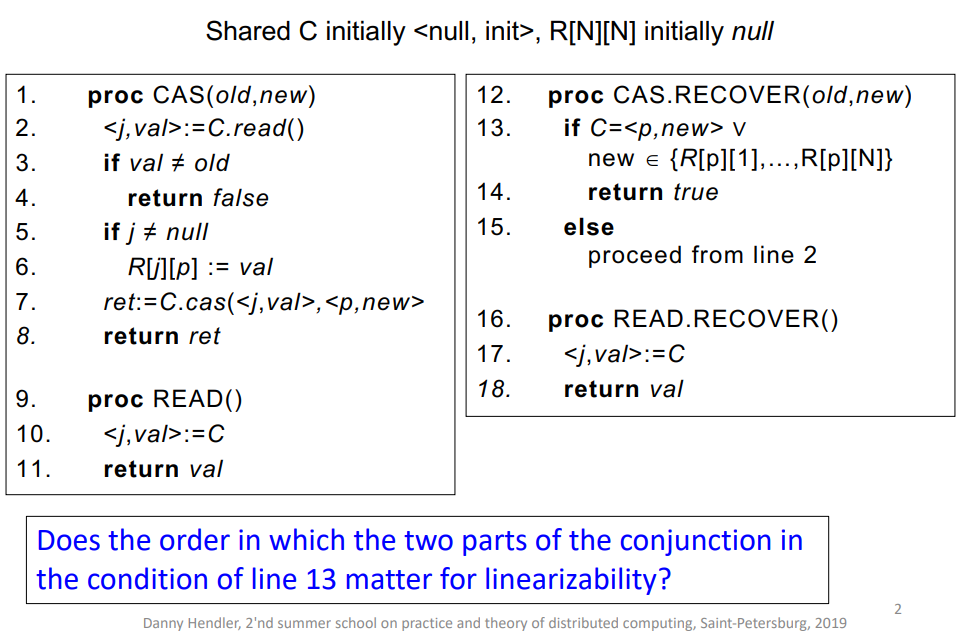

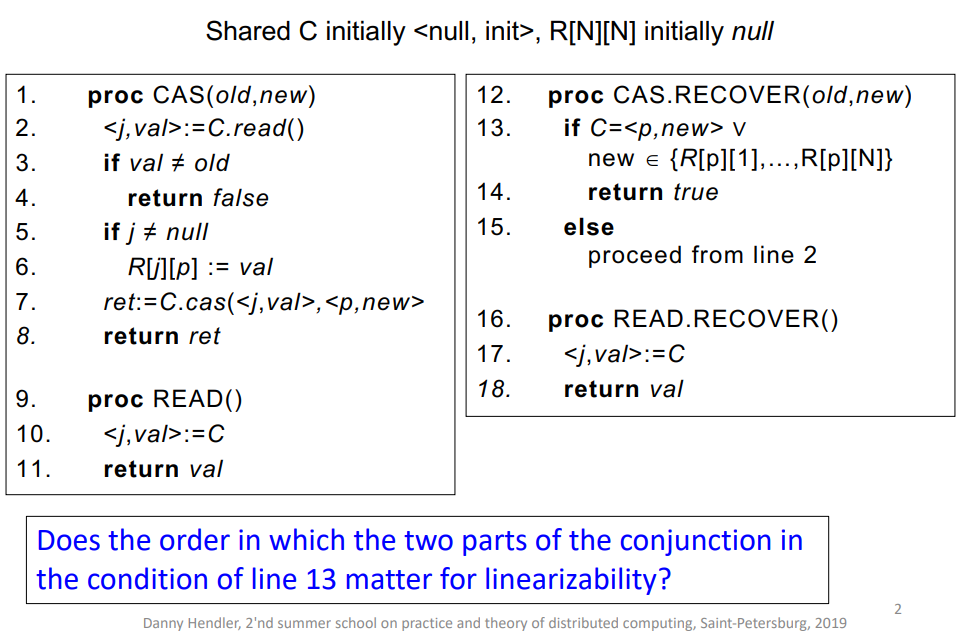

Let’s take a look at the recoverable version of the CAS program, which is intended to make operations more resilient to failures. The use of a two-dimensional array is a key feature of this implementation. This array acts as a log or record, storing information about which process (or “who”) wrote a value and when it happened. Such logging is essential in a recoverable system, particularly in NVRAM, where data persists despite system reboots or failures.

The linearizability of operations, which ensures that operations appear to occur in a sequential order consistent with their actual execution, is a key feature of this algorithm. The CAS RECOVER function’s evaluation order is critical for maintaining linearizability:

- If process

p1fails after a successfulCASoperation and then recovers, evaluating the second part of the expression inCAS.RECOVERfirst can lead to non-linearizable execution. - This is because another process,

p2, could complete aCASoperation in the meantime, changing the state in a way that’s not accounted for ifp1only checks the second part of the condition. - Therefore, the first part of the condition (checking

C=<p,new>) must be evaluated before the second part (checking if new is inR[p][1] to R[p][N]).

Conclusion

This article delves into the fundamental concepts of NVRAM, a new type of memory, compares it to RAM, presents key requirements for mutex and nonblocking algorithms, and offers guidelines for developing efficient NVRAM algorithms.

In an era when data management is critical to business success, exponential data growth presents a number of challenges for technology departments, including DRAM density limitations and strict budget constraints. These issues are driving the adoption of memory tiering, a game-changing approach that alters how data is handled and stored. Non-Volatile Random Access Memory (NVRAM), which is becoming more affordable and popular, is one of the key technologies designed to work within a tiered memory architecture.

This article will investigate the fundamentals of NVRAM, compare it to traditional solutions, and provide guidelines for writing efficient NVRAM algorithms.

What Is NVRAM?

Non-Volatile Random Access Memory, or NVRAM, is a type of memory that retains data even when the power is turned off. It combines the RAM (Random Access Memory) and ROM (Read Only Memory) properties, allowing data to be read and written quickly like RAM while also being retained when the power is turned off like ROM.

It is available on Intel-based computers and employs 3D Xpoint, a revolutionary memory technology that strikes a balance between the high speed of RAM and the persistence of traditional storage, providing a new solution for high-speed, long-term data storage and processing.

NVRAM is typically used for specific purposes such as system configuration data storage rather than as a general-purpose memory for running applications.

NVRAM is used in a variety of memory modules, including:

- Dual In-line Memory Modules (DIMMs), which use NVRAM to store firmware data or provide persistent memory

- Solid State Drives (SSDs) that use NVRAM to store firmware, wear-leveling data, and sometimes to cache writes

- Motherboard Chipsets that use NVRAM to store BIOS or UEFI settings

- PCIe Cards that use NVRAM for high-speed data storage or caching

- Hybrid memory modules use NVRAM in addition to traditional RAM.

What Are the Differences Between NVRAM and RAM?

To gain a better understanding of the differences between NVRAM and RAM, it is necessary to review the concepts of both types of memory.

RAM, or Random Access Memory, is a type of memory that can be read and written to in any order and is commonly used to store working data and machine code. RAM is “volatile” memory, which means it can store data until the computer is powered down.

Unlike RAM, NVRAM can retain stored information, making it ideal for storing critical data that must persist across reboots. It may contain information such as system configurations, user settings, or application state.

Apart from this critical distinction, these types of memory differ in other ways that define their advantages and disadvantages:

- Speed: While DRAM is fast, especially when it comes to accessing and writing data, its speed is typically lower than what NVRAM strives for. NVRAM, in addition to potentially competing with DRAM (Dynamic RAM) in terms of speed, offers the durability of traditional non-volatile memory.

- Energy consumption: NVRAM consumes less power than RAM/DRAM, owing to the fact that it does not require power to retain data, whereas the latter requires constant refreshing.

- Cost and availability: NVRAM may be more expensive and less widely available at first than established memory technologies such as RAM, which is widely available and available in a variety of price ranges.

What Distinguishes NVRAM Algorithms?

Because NVRAM allows for the direct storage of important bits of information (such as program settings) in memory, it becomes a game changer in the industry. The NVRAM algorithms are defined by several key characteristics:

- NVRAM provides new opportunities for developing recoverable algorithms, allowing for efficient recovery of a program’s state following system or individual process failure.

- NVRAM frequently has faster read and write speeds than traditional magnetic disc drives or flash-based SSDs, making it suitable for high-performance computing and real-time tasks that require quick data access.

- Some types of NVRAM, such as flash memory, are prone to wear due to frequent rewrites. This necessitates the use of special wear-leveling algorithms, which distribute write operations evenly across the memory in order to extend its lifespan.

- Integrating NVRAM into systems necessitates taking into account its distinct characteristics, such as access speed and wear management. This could entail modifying existing algorithms and system architectures.

How To Write NVRAM Algorithms

Mutual Exclusion Algorithms

Mutex (Mutual Exclusion) algorithms are designed to ensure that multiple processes can manage access to shared resources without conflict, even in the event of system crashes or power outages.

The following are the key requirements for this type of algorithm:

- Mutual exclusion: It ensures that only one process or thread can access a critical section at the same time, preventing concurrent access to shared resources.

- Deadlock-free: This avoids situations in which processes are indefinitely waiting for each other to release resources, ensuring that programs run continuously.

- Starvation-free: This ensures that every process has access to the critical section, preventing indefinite delays for any process.

Peterson’s Algorithm for NVRAM

Peterson’s Algorithm is an example of an algorithm that can be adapted for NVRAM. In computer science, it is a concurrency control algorithm used to achieve mutual exclusion in multi-threading environments. It enables multiple processes to share a single-use resource without conflict while ensuring that only one process has access to the resource at any given time. In an NVRAM environment, Peterson’s algorithm, which was originally designed for two processes, can be extended to support multiple processes (from 0 to n-1).

Adapting Peterson’s algorithm for NVRAM entails not only expanding it to support multiple processes but also incorporating mechanisms for post-failure recoverability.

To adapt Peterson’s algorithm for recoverability in NVRAM include specific recovery code that allows a process to re-enter the critical section after a crash. This might involve checking the state of shared variables or locks to determine the last known state before the crash.

To write the algorithm, you must first complete the following steps:

- Initialization: In NVRAM, define shared variables (flag array, turn variable). Set these variables to their default values, indicating that no processes are currently in the critical section.

- Entry section: Each process that attempts to enter the critical section sets a flag in the NVRAM. After that, the process sets the turn variable to indicate its desire to enter the critical section. It examines the status of other processes’ flags as well as the turn variable to see if it can enter the critical section.

- Critical section: Once inside, the process gets to work. NVRAM stores any state changes or operations that must be saved.

- Exit section: When the process completes its operations, it resets its flag in the NVRAM, indicating that it has exited the critical section.

- Recovery mechanism: Include code to handle crashes during entry or exit from the critical section.

- If a process fails in the entry section, it reads the state of competitors and determines whether to continue.

- If a process crashes in the exit section, it re-executes the entry section to ensure proper state updates.

- Handling process failures: Use logic to determine whether a failed process completed its operation in the critical section and take appropriate action.

- Tournament tree for process completion: Create a hierarchical tournament tree structure. Each process traverses this tree, running recovery and entry code at each level.

- If necessary, include an empty recovery code segment to indicate that the process is aware of its failure state.

Nonblocking Algorithms

Nonblocking algorithms are a type of concurrent programming algorithm that enables multiple threads to access and modify shared data without using locks or mutual exclusion mechanisms. These algorithms are intended to ensure that the failure or suspension of one thread does not prevent other threads from progressing.

The following are the primary requirements of nonblocking algorithms:

- Nonblocking algorithms are frequently lock-free, which means that at least one thread makes progress in a finite number of steps even if other threads are delayed indefinitely.

- Wait-free: A more powerful type of nonblocking algorithm is wait-free, in which each thread is guaranteed to complete its operation in a finite number of steps, regardless of the activity of other threads.

- Obstruction-free: The most basic type of nonblocking algorithm, in which a thread can finish its operation in a finite number of steps if it eventually operates without interference from other threads.

Linearizability is a key concept in concurrent programming that is associated with nonblocking algorithms. It ensures that all operations on shared resources (such as read, write, or update) appear to occur in a single, sequential order that corresponds to the actual order of operations in real time.

Nonblocking Algorithm Example

Let’s take a look at the recoverable version of the CAS program, which is intended to make operations more resilient to failures. The use of a two-dimensional array is a key feature of this implementation. This array acts as a log or record, storing information about which process (or “who”) wrote a value and when it happened. Such logging is essential in a recoverable system, particularly in NVRAM, where data persists despite system reboots or failures.

The linearizability of operations, which ensures that operations appear to occur in a sequential order consistent with their actual execution, is a key feature of this algorithm. The CAS RECOVER function’s evaluation order is critical for maintaining linearizability:

- If process

p1fails after a successfulCASoperation and then recovers, evaluating the second part of the expression inCAS.RECOVERfirst can lead to non-linearizable execution. - This is because another process,

p2, could complete aCASoperation in the meantime, changing the state in a way that’s not accounted for ifp1only checks the second part of the condition. - Therefore, the first part of the condition (checking

C=<p,new>) must be evaluated before the second part (checking if new is inR[p][1] to R[p][N]).

Conclusion

This article delves into the fundamental concepts of NVRAM, a new type of memory, compares it to RAM, presents key requirements for mutex and nonblocking algorithms, and offers guidelines for developing efficient NVRAM algorithms.