AMD Is Optimistic on AI PCs Bringing Greater Efficiency to Enterprise

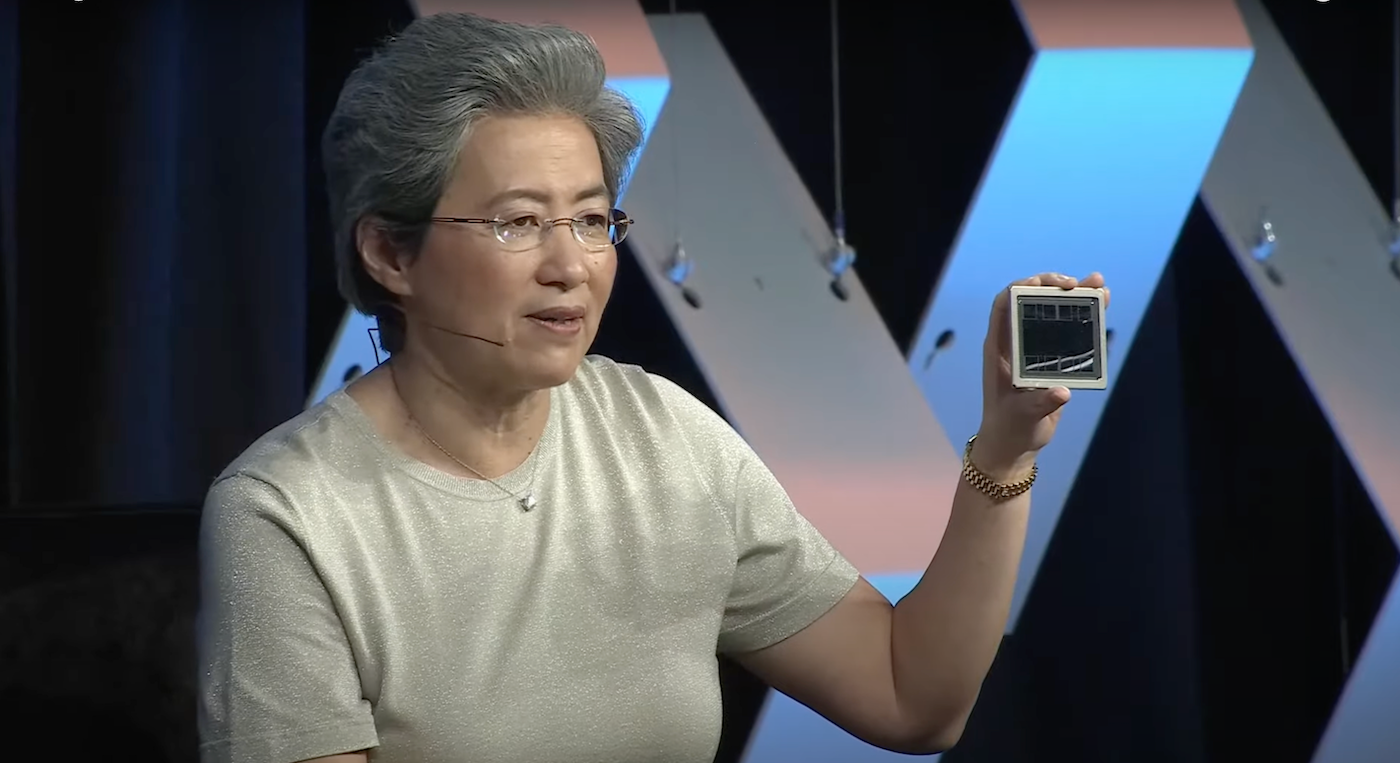

AMD CEO Lisa Su was optimistic about the future of AI on PCs during her keynote at SXSW on March 11, held and livestreamed in Austin, Texas (Figure A). Su’s comments echo Jason Banta, vice president of product management at AMD, who spoke to TechRepublic in a call on March 8 about how professionals might use AI features on PCs going forward. AMD competes primarily with NVIDIA and Intel to capture the lucrative AI hardware market, and Su addressed the competition with pack leader NVIDIA in particular in her keynote.

The goal of AI PCs, according to AMD

“The goal of AI PCs is to make sure that every one of us has our own AI capability,” said Su. “You don’t have to go out into the cloud. You can actually operate on your own data. You can actually ask it questions. It’ll answer for you. It’ll answer for you faster. It’ll answer for you in a private manner, because maybe you don’t want your data going everywhere. And it’s just the beginning of what I think is the ability to make all of us much, much more productive.”

In service of that goal, in December 2023, AMD debuted the Ryzen 8040 Series processors, which can run large AI models on laptops from Acer, Asus, Dell, HP, Lenovo and Razer with Windows 11. In January 2024, AMD announced a dedicated AI neural processing unit for desktop PCs.

SEE: AMD, Intel and NVIDIA are part of a federal program meant to provide AI research resources. (TechRepublic)

How AMD benefited from purchasing Xilinx

Banta said one reason AMD has been able to come out with its own NPU so quickly is because of AMD’s 2022 purchase of semiconductor company Xilinx.

“There’s a great heritage (within Xilinx) of using AI for many of the embedded applications where Xilinx was already very competitive. We took that AI capability and that technology, and it (AI) was one of the first technology partnerships we saw once Xilinx and AMD merged. We used that technology and brought that into our Ryzen AI product line.

“And so by leveraging all that great experience of AI neural processing and technology, by bringing that into the Ryzen family, we were able to bring additional experiences and quality to our CPU customers.”

AI PC use cases for enterprise

For professionals, Banta said, the AI PC could take some of the drudgery out of workflows, freeing up employees to be more creative. The AI PC could reduce the cost of AI by removing the capital investment required to deploy AI solutions on the cloud, he said.

“There’s cost advantages of running those models locally on the PC as opposed to running them in the cloud or running them on an on-premise server,” he said. “So there’s a natural affinity for bringing AI on-device for the commercial market.”

With generative AI for enterprise still being relatively new, Banta said customers are still experimenting with how to use it.

“Microsoft has their Copilot solution. We see people, they’re not fully dependent on it yet, but they’re using it, they’re trying it out and they’re finding things that do enhance their workflows,” Banta said. “But it requires some of that experimentation. Within AMD, we’re using Copilot, and it’s something that as people experiment with it and try it out, they say, ‘Hey, I found this thing that enhances my workflow or speeds things up.’”

Competition in the AI PC market

AMD, NVIDIA and Intel are competing on working on bringing generative AI client-side and on cutting down the time it takes for generative AI interference to run by moving some of that work to chips built into PCs. The idea is that AI processes will be more power efficient on a PC, too.

Speaking at SXSW, Su responded to an audience member’s question about whether AMD can “catch up” with NVIDIA, saying, “We have a lot of respect for NVIDIA, a lot of respect. They’ve done tremendously from a roadmap standpoint, but we also have a lot of confidence in where we’re going.”

AMD’s plan to move forward with responsible AI

During Su’s SXSW presentation, audience members brought up some of the concerns around AI: energy use in data centers and responsible AI output in particular.

When asked about handling the proliferation of AI responsibly, Su pointed to AMD’s Responsible AI Council and said moving ahead with a sense of responsibility should not mean moving ahead slowly.

“We must go faster, we must experiment, and just do it with a watchful eye,” she said.

TechRepublic covered SXSW remotely.

AMD CEO Lisa Su was optimistic about the future of AI on PCs during her keynote at SXSW on March 11, held and livestreamed in Austin, Texas (Figure A). Su’s comments echo Jason Banta, vice president of product management at AMD, who spoke to TechRepublic in a call on March 8 about how professionals might use AI features on PCs going forward. AMD competes primarily with NVIDIA and Intel to capture the lucrative AI hardware market, and Su addressed the competition with pack leader NVIDIA in particular in her keynote.

The goal of AI PCs, according to AMD

“The goal of AI PCs is to make sure that every one of us has our own AI capability,” said Su. “You don’t have to go out into the cloud. You can actually operate on your own data. You can actually ask it questions. It’ll answer for you. It’ll answer for you faster. It’ll answer for you in a private manner, because maybe you don’t want your data going everywhere. And it’s just the beginning of what I think is the ability to make all of us much, much more productive.”

In service of that goal, in December 2023, AMD debuted the Ryzen 8040 Series processors, which can run large AI models on laptops from Acer, Asus, Dell, HP, Lenovo and Razer with Windows 11. In January 2024, AMD announced a dedicated AI neural processing unit for desktop PCs.

SEE: AMD, Intel and NVIDIA are part of a federal program meant to provide AI research resources. (TechRepublic)

How AMD benefited from purchasing Xilinx

Banta said one reason AMD has been able to come out with its own NPU so quickly is because of AMD’s 2022 purchase of semiconductor company Xilinx.

“There’s a great heritage (within Xilinx) of using AI for many of the embedded applications where Xilinx was already very competitive. We took that AI capability and that technology, and it (AI) was one of the first technology partnerships we saw once Xilinx and AMD merged. We used that technology and brought that into our Ryzen AI product line.

“And so by leveraging all that great experience of AI neural processing and technology, by bringing that into the Ryzen family, we were able to bring additional experiences and quality to our CPU customers.”

AI PC use cases for enterprise

For professionals, Banta said, the AI PC could take some of the drudgery out of workflows, freeing up employees to be more creative. The AI PC could reduce the cost of AI by removing the capital investment required to deploy AI solutions on the cloud, he said.

“There’s cost advantages of running those models locally on the PC as opposed to running them in the cloud or running them on an on-premise server,” he said. “So there’s a natural affinity for bringing AI on-device for the commercial market.”

With generative AI for enterprise still being relatively new, Banta said customers are still experimenting with how to use it.

“Microsoft has their Copilot solution. We see people, they’re not fully dependent on it yet, but they’re using it, they’re trying it out and they’re finding things that do enhance their workflows,” Banta said. “But it requires some of that experimentation. Within AMD, we’re using Copilot, and it’s something that as people experiment with it and try it out, they say, ‘Hey, I found this thing that enhances my workflow or speeds things up.’”

Competition in the AI PC market

AMD, NVIDIA and Intel are competing on working on bringing generative AI client-side and on cutting down the time it takes for generative AI interference to run by moving some of that work to chips built into PCs. The idea is that AI processes will be more power efficient on a PC, too.

Speaking at SXSW, Su responded to an audience member’s question about whether AMD can “catch up” with NVIDIA, saying, “We have a lot of respect for NVIDIA, a lot of respect. They’ve done tremendously from a roadmap standpoint, but we also have a lot of confidence in where we’re going.”

AMD’s plan to move forward with responsible AI

During Su’s SXSW presentation, audience members brought up some of the concerns around AI: energy use in data centers and responsible AI output in particular.

When asked about handling the proliferation of AI responsibly, Su pointed to AMD’s Responsible AI Council and said moving ahead with a sense of responsibility should not mean moving ahead slowly.

“We must go faster, we must experiment, and just do it with a watchful eye,” she said.

TechRepublic covered SXSW remotely.